Centimeter-Wave Free-Space Neural Time-of-Flight Imaging

- Seung-Hwan Baek*

-

Noah Walsh*

- Ilya Chugunov

- Zheng Shi

- Felix Heide

SIGGRAPH 2022

Depth sensors have emerged as a cornerstone sensor modality with diverse applications in personal hand-held devices, robotics, scientific imaging, autonomous vehicles, and more. In particular, correlation Time-of-Flight (ToF) sensors have found widespread adoption for meter-scale indoor applications such as object tracking and pose estimation. While they offer high depth resolution at competitive costs, the precision of these indirect ToF sensors is fundamentally limited by their modulation contrast, which is limited by their modulation frequency, which is in turn limited by the effects of photo-conversion noise. In contrast, optical interferometric methods can leverage short illumination modulation wavelengths to achieve depth precision three orders of magnitude greater than ToF, but typically find their range restricted to the sub-centimeter.

In this work, we merge concepts from both correlation ToF design and interferometric imaging; a step towards bridging the gap between these methods. We propose a computational ToF imaging method which optically computes the GHz ToF correlation signals in free space, before photo-conversion, and demonstrates robust depth reconstruction at meter-scale range. To this end we repurpose electro-optic modulators from optical communication to generate and measure centimeter-wave illumination, and design a segmentation-inspired phase unwrapping network to turn high frequency wrapped phase measurements into depth. We validate our approach both in simulation and experimentally, and demonstrate high-precision depth estimation robust to surface texture and ambient night.

Centimeter-Wave Free-Space Neural Time-of-Flight Imaging

Seung-Hwan Baek*, Noah Walsh*, Ilya Chugunov, Zheng Shi, Felix Heide

SIGGRAPH 2022

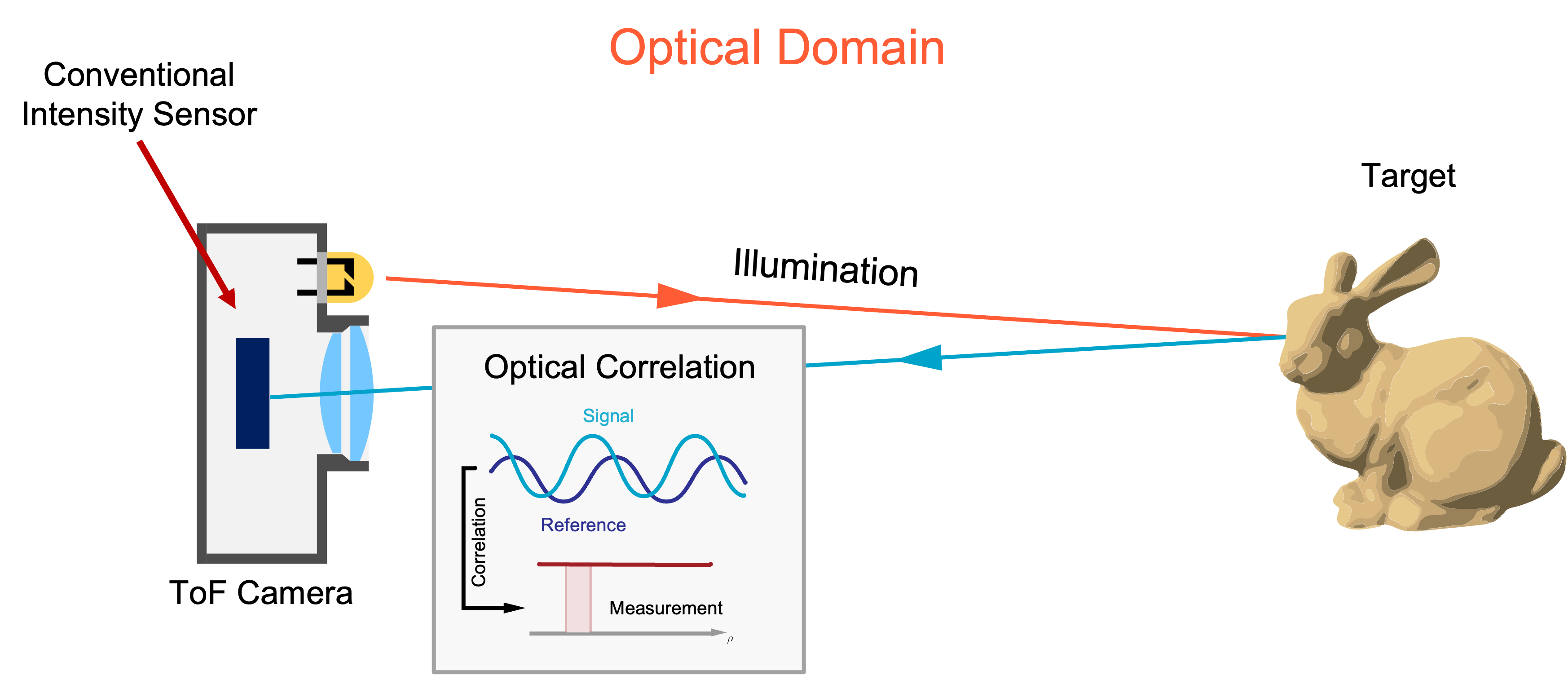

Conventional Correlation Imaging

Classical correlation time-of-flight imagers flood a scene with amplitude-modulated light, and use an array of photonic mixer devices to convert the returned phase-shifted light into an electric signal. The sensor then correlates this signal with on-board references to produce correlation measurements from which it estimates depth. Unfortunately, noise in the photon-electron conversion process limits the accuracy of these measurements, and these imagers are restricted to modulation frequencies on the order of 100 megahertz.

Free-Space Correlation Imaging

We shift correlation from the analog domain to the optical domain, avoiding this conversion noise in our correlation measurements. This also allows us to use a conventional intensity sensor in place of a specialized photonic mixer device array.

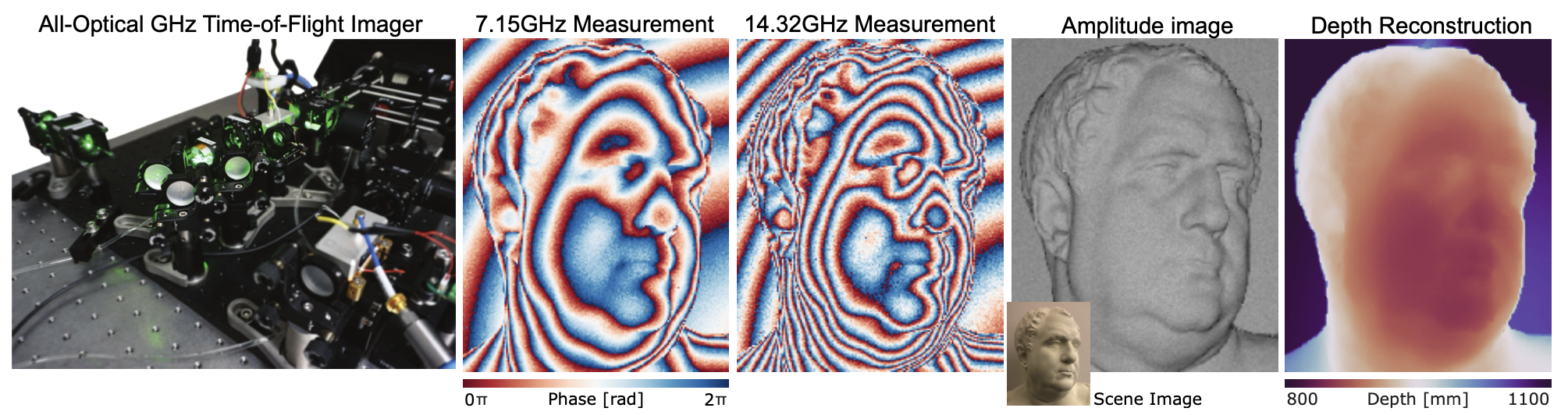

Experimental Prototype

We design an experimental prototype which relies on custom electro-optic modulators to perform free-space signal correlation. This system is not limited by silicon absorption physics, and can operate with modulation frequencies of over 10 gigahertz.

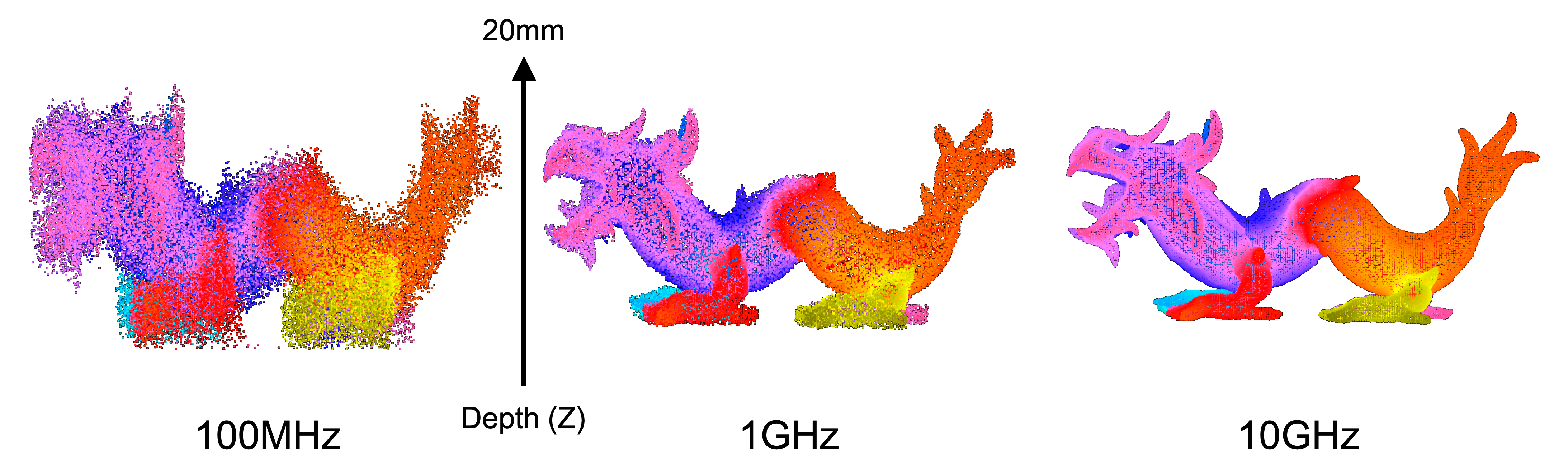

The Impact of Modulation Frequency

In correlation imaging, modulation frequency means contrast. For the same scale of noise, where a 100MHz imager gets millimeter-scale precision, a gigahertz imager would see sub-millimeter features, and a 10GHz imager could resolve micron-scale surface textures.

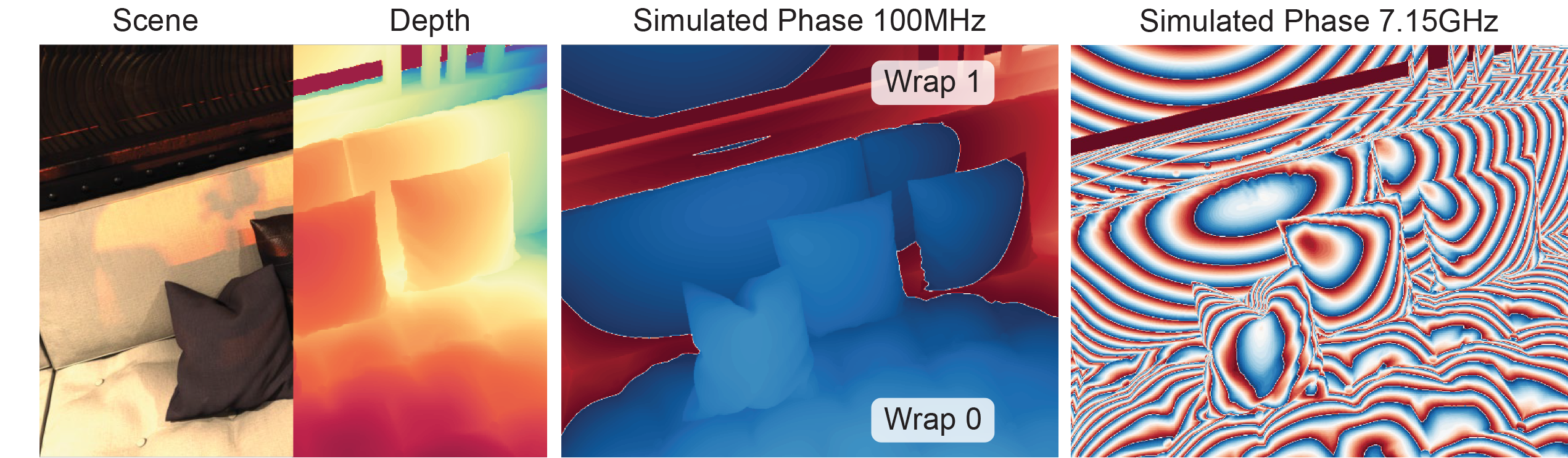

The Phase Wrapping Problem

Sometimes light makes travels more than one wavelength between the camera and the scene, in the case of a 100MHz imager its common to have to unwrap one or two of these phase boundaries to recover the real phase. When we move to a 7.15GHz setup, like our prototype imager, this wrap count jumps to the dozens.

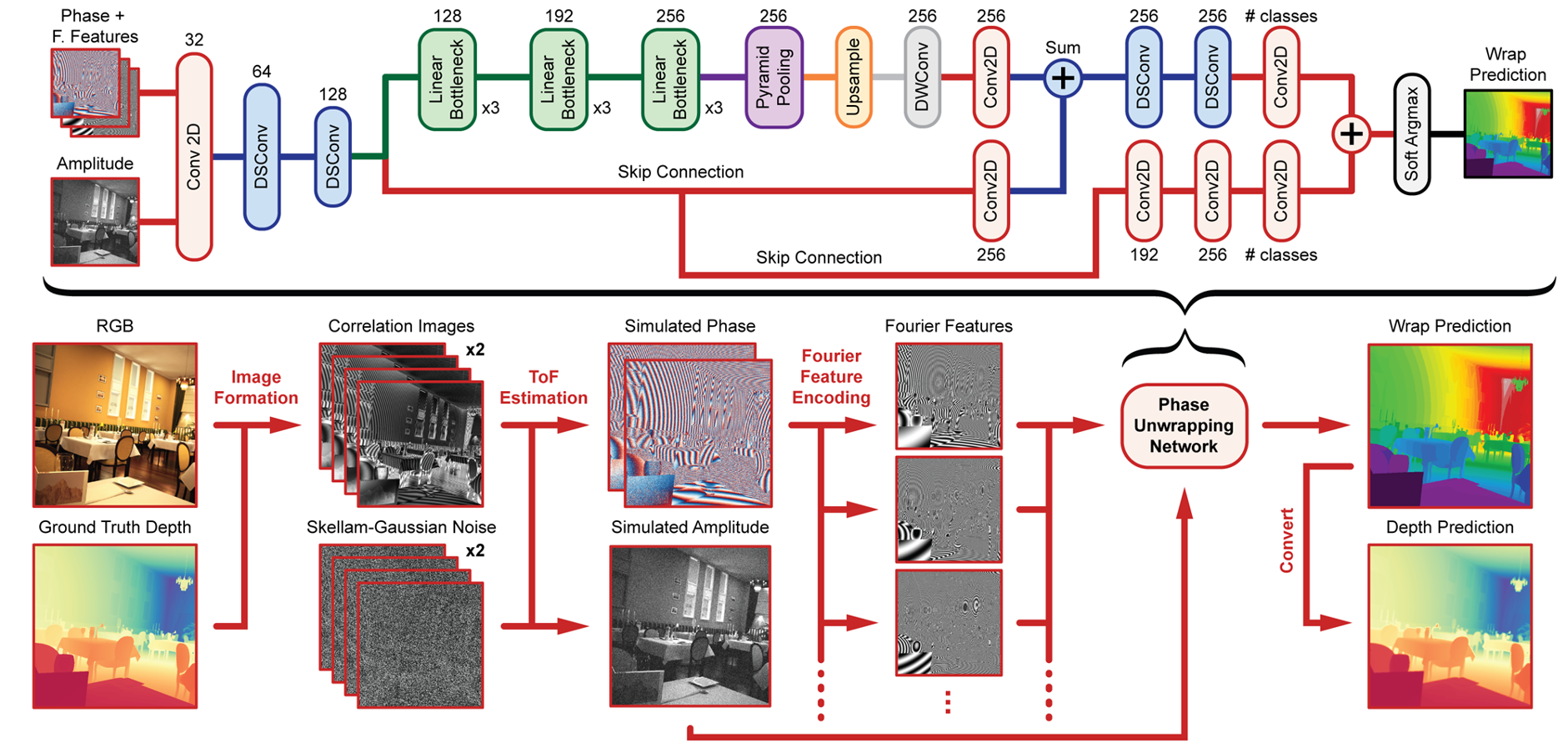

Neural Phase Unwrapping

Even with a second frequency phase measurement, we need to find the mapping for every noisy measurement pair to its real phase. We design and train a segmentation-inspired neural unwrapping network to tackle this hard problem. We use synthetic ground truth RGB and depth data to simulate gigahertz time-of-flight measurements, with realistic levels of noise tuned to real measurements. Our network outputs a probability vector for each pixel’s possible phase wrap counts (within a max range), which we supervise with cross entropy loss, just like a regular classification task. We then pass this vector through a soft argmax to produce numerical wrap count estimates, which are dually supervised by an L1 loss to enforce the ordinal aspect of the phase wrap classification problem; that it’s better to classify a fifteen wrap region as fourteen wraps rather than thirty.

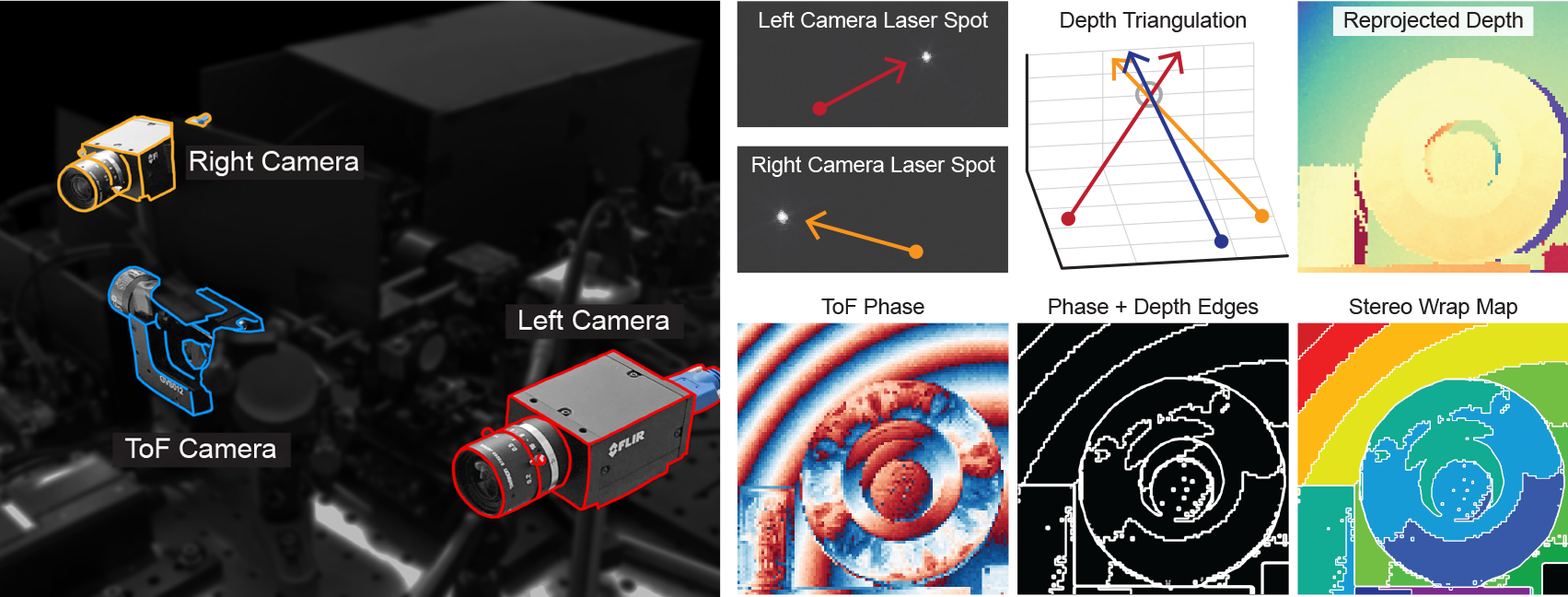

Stereo Finetuning

To specialize our network to the proposed experimental system, we look to finetune on a small set of ground truth captures. To generate this data we attach an auxiliary stereo camera system to our setup, triangulating the laser point in 3D space while its scanning. We use this rough depth to calculate wrap counts between phase discontinuities in the time-of-flight data.

Reconstruction Results

We demonstrate accurate shape reconstruction for a range of object geometries, with complex phase boundaries and small inset features such as the grooves of the ceramic vase. We also demonstrate robust reconstruction of conventionally difficult surface materials such as a polished metal helmet. This demonstrates the photon efficiency of our system, as we only need a small fraction of the illumination to return to the sensor in order to reliably estimate a point’s depth.

Quantiative Evaluation

In addition to reconstructing geometrically complex scenes, we also perform an array of quantitative tests to gauge our system’s performance. We use a high precision, linear translation stage to validate that we can detect and measure 50-micron shifts of a planar object at a distance of more than half a meter from the device, outperforming both existing correlation time-of-flight and interferometric methods. Similarly we can precisely measure the half millimeter difference between gauge block thicknesses at a meter’s distance. Details and additional tests can be found in the paper.