Split-Aperture 2-in-1 Computational Cameras

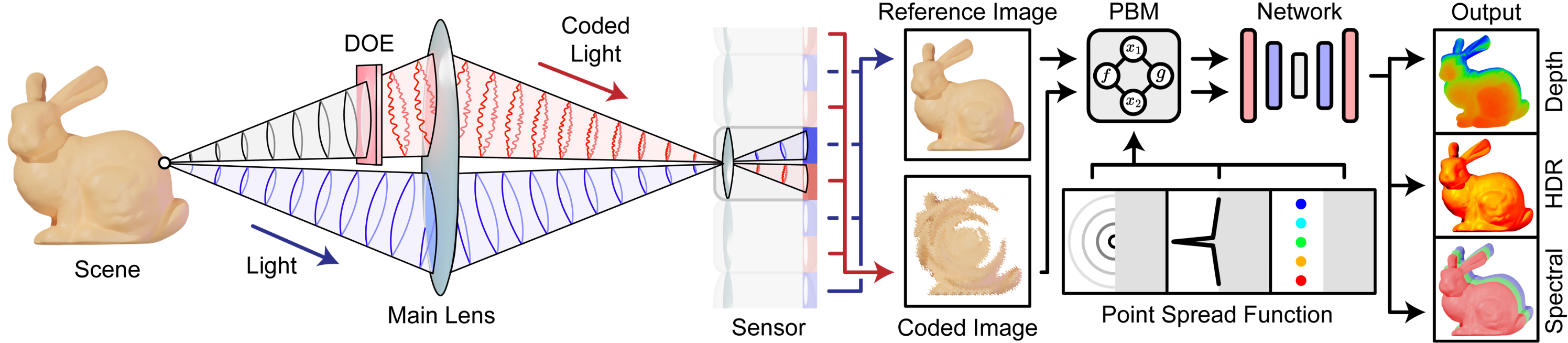

Split-aperture 2-in-1 computational cameras simultaneously capture optically coded and conventional images without increasing the camera size. By splitting the aperture, we modulate one half with a diffractive optical element (DOE) for encoding, while the other half remains unmodulated. Using a dual-pixel sensor, our camera separates the wavefronts, retaining high-frequency content and enabling single-shot high-dynamic-range, hyperspectral, and depth imaging, outperforming existing coded or uncoded methods.

Conventional cameras, while ubiquitous, capture only intensity, whereas computational cameras with co-designed optics and algorithms enable snapshot advanced imaging capabilities like high dynamic range recovery, depth estimation, and hyperspectral imaging. However, optical encoding also brings challenging inverse reconstruction problems, often reducing overall photographic quality and being applicable only in specialized applications.

In this work, we combine these two optical systems in a single camera by splitting the aperture: one half applies application-specific modulation using a diffractive optical element, and the other captures a conventional image. This co-design with a dual-pixel sensor allows simultaneous capture of coded and uncoded images — without increasing physical or computational footprint.

Split-Aperture 2-in-1 Computational Cameras.

Split-Aperture 2-in-1 Computational Cameras

Zheng Shi, Ilya Chugunov, Mario Bijelic, Geoffroi Côté, Jiwoon Yeom,

Qiang Fu, Hadi Amata, Wolfgang Heidrich, Felix Heide

SIGGRAPH 2024

2-in-1 Cameras: Versatility Meets Novel Imaging Modalities

Conventional cameras are highly versatile but are primarily designed to capture a perfect image, independently of the domain—be that imaging for display or imaging for the input of a downstream object detector. As the result, their captures are often not optimal for specific tasks. Over the past twenty years, researchers have explored computational cameras that integrate optics and computational models for specific tasks. These cameras, optimized for particular applications, promise to significantly enhance the functionality of traditional general-purpose camera systems. Despite their demonstrated potential, computational cameras remain limited to niche uses like microscopy due to the ill-posed nature of the reconstruction problem, complicating signal extraction amidst sensor and photon noise and often leading to artifacts absent in traditional systems.

In this work, we tackle these challenges by leveraging mature dual pixel sensor technology, split the aperture in half to capture both conventional and coded images at the same time!

![]()

Dual-Pixel Sensing

Increasingly prevalent in DSLRs and smartphones, e.g., Samsung 50MP ISOCELL GN2, for enhanced autofocus, dual-pixel sensors feature two photodiodes per pixel, each capturing light from one half of the aperture independently. A microlens on each pixel ensures this division, creating a system akin to a miniaturized two-sample light field camera or a stereo system with an extremely small baseline. This dual-pixel split applies to every color channel, ensuring that both captures retain full-color information, effectively splitting the color filter array (CFA) into two separate CFAs.

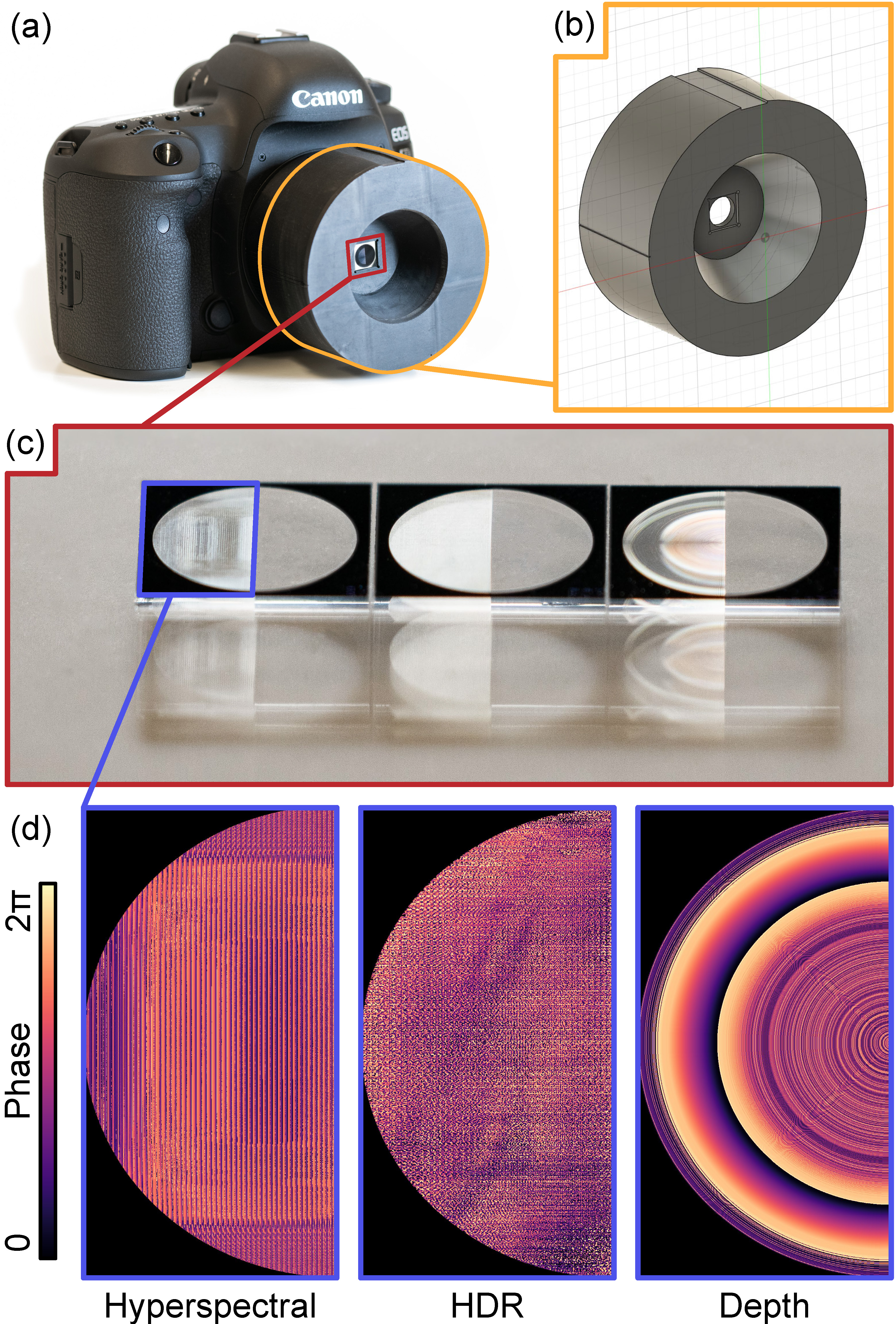

Experimental Prototype

We devised an experimental prototype, shown in (a), to evaluate the proposed method. The fabricated DOEs, shown in (c), are manufactured based on the phase profiles designed for each application, as shown in (d), utilizing a 16-level photolithography process. While this DOE is designed to be in the aperture plane of the target camera configuration, we opt to design and 3D print a DOE holder (b) to position the DOE adjacent to the lens cover glass, circumventing the need to dissect a commercial multi-element compound lens.

Cross-talk Dual-Pixel Calibration

In theory, each photodiode in a dual-pixel sensor independently records light from only one half of the aperture. In practice, off-axis light causes crosstalk, with light intended for one photodiode being captured by the other. Here, (a) illustrates the angle-dependent crosstalk. We calibrate a fixed crosstalk ratio for the central 3072×3072 pixels, as indicated by the colorbar at the top of (b). This enables accurate retrieval of clean coded and uncoded captures across various applications, see (b).

2-in-1 Computational Imaging Applications

We demonstrate the proposed 2-in-1 computational camera with three computational optics applications below: recovery of high dynamic range (HDR) images from optically-encoded streak images, monocular depth imaging using coded depth-from-defocus, and hyperspectral image reconstruction via chromatic aberrations.

Snapshot High Dynamic Range Imaging

The proposed 2-in-1 dual aperture camera enables us to acquire coded HDR information and uncoded LDR information simultaneously: the uncoded capture provides a conventional image with unsaturated regions unaffected by an optical encoding, while the coded capture specializes in mapping HDR signals onto an LDR sensor. This encoding process, not required to preserve LDR signals, learns to create multiple scaled duplicates of overexposed regions across a broader exposure spectrum, effectively combining multiple exposures at several locations in a streak pattern.

Snapshot HDR Example with 2-in-1 Cameras: As our 2-in-1 design captures both coded and uncoded images simultaneously on a single camera, with a single optical axis, we do not need any post-processing to accurately register pixels between these views. We are able to leverage this to directly reconstruct dynamic content with changing colors such as the art piece shown on the left, an otherwise extremely challenging task for traditional stereo and multi-frame burst imaging approaches.

In the following, the top row reports measured PSFs of the prototype with proposed split-aperture HDR DOE. The next rows evaluate the method for outdoor scenes, comparing our results with Ground Truth data obtained through bracketed exposures. The proposed method is able to recover fine detail of the highlights, while the learned LDR-to-HDR method, DeepHDR [2], produces incorrect HDR estimates with image structure and intensity levels that significantly deviate from those in the ground truth captures.

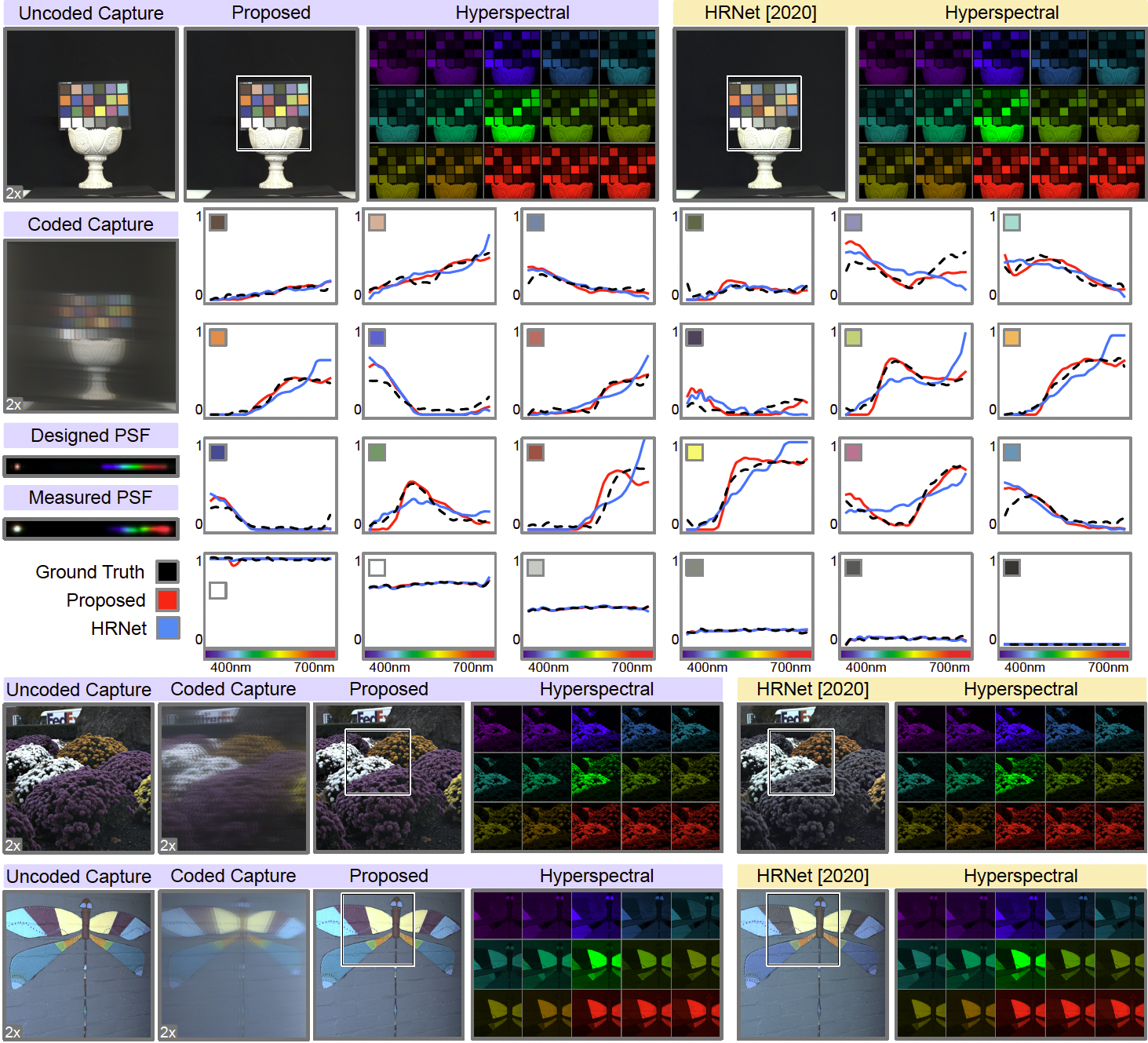

Snapshot Hyperspectral Imaging

Next, we demonstrate a split-aperture camera that encodes 31-channel hyperspectral information into a 3-channel RGB capture by intentionally introducing chromatic aberration. We employ a grating-like phase profile for the coded aperture half to modulate the incident light, inducing a wavelength-dependent lateral shift in the focus point. This allows us to reconstruct the hyperspectral information based on the shift magnitude relative to the aberration-free uncoded capture.

On the left, we display the measured rainbow-like PSFs. Scene 1 on the top illustrates results from a lab environment, and we include spectral validation plots for all 24 color blocks, with ground truth spectra obtained via a miniature spectrometer. The subsequent rows validate the method in outdoor setting. The spectral reconstructions from our method align closely with the measured spectral intensities, while HRNet[3], a state-of-art RGB-to-HS method, exhibits notable inaccuracies, especially at the spectrum boundaries. This discrepancy is also evident in out-of-lab experiments, where HRNet struggles with color accuracy, see red and purple images. In the absence of Ground Truth RGB captures, we present the uncoded and coded captures at double intensity, where the uncoded capture serves as a pseudo-ground truth in the RGB domain.

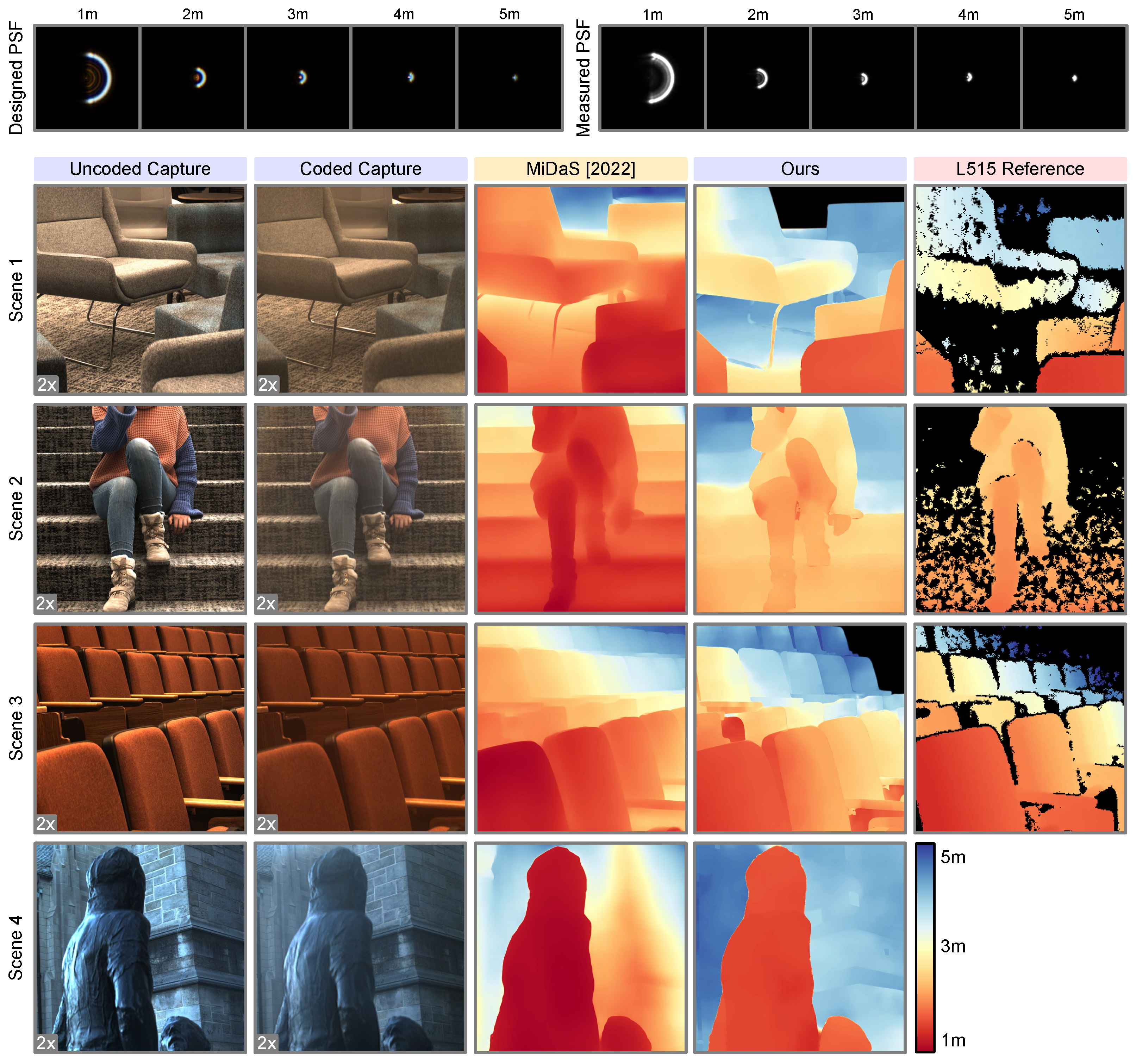

Monocular Depth from Coded Defocus

We showcase depth-from-defocus as our third application and investigate a 2-in-1 computational camera that relies on depth-dependent concentric rings as a target PSF, but is conditioned on a simultaneously captured monocular RGB image.

The top row of our results shows the measured depth-dependent encoding PSFs from our prototype, covering depths from 1m to 5m. For each scene, the leftmost two columns display the sensor captures using our method at double intensity, followed by depth reconstructions from different methods. In the case of indoor scenes, we employ a solid-state LiDAR camera (RealSense L515) to gather absolute depth data from the scenes, serving as a ground truth reference. Areas where the RealSense L515 camera was unable to provide measurements are indicated with a black mask. The depth reconstructions produced by our proposed method are in close alignment with the RealSense L515 reference depth. In contrast, the monocular depth estimation method MiDaS[4], running on the uncoded capture and rescaled to the target depth range, is limited to provide a plausible relative depth map and often inaccurately merges unconnected objects into a singular, continuous depth profile.

Related Work

[1] Zheng Shi, Yuval Bahat, Seung-Hwan Baek, Qiang Fu, Hadi Amata, Xiao Li, Praneeth Chakravarthula, Wolfgang Heidrich, and Felix Heide, Seeing Through Obstructions with Diffractive Cloaking. SIGGRAPH 2022

[2] Marcel Santana Santos, Tsang Ing Ren, and Nima Khademi Kalantari. Single image HDR reconstruction using a CNN with masked features and perceptual loss. SIGGRAPH 2020

[3] Yuzhi Zhao, Lai-Man Po, Qiong Yan, Wei Liu, and Tingyu Lin. Hierarchical regression network for spectral reconstruction from RGB images. CVPR 2020

[4] René Ranftl, Katrin Lasinger, David Hafner, Konrad Schindler, Vladlen Koltun. Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer. TPAMI 2020