Lidar Waveforms are Worth 40x128x33 Words

-

Dominik Scheuble

-

Hanno Holzhüter

- Steven Peters

- Mario Bijelic

- Felix Heide

ICCV 2025 (Highlight)

Lidar has become crucial for autonomous driving, providing high-resolution 3D scans that are key for accurate scene understanding. As shown on the right, lidar sensors measure the time-resolved full waveforms from the returning laser light, which a subsequent digital signal processor (DSP) converts to point clouds by identifying peaks in the waveform. Conventional automotive lidar DSP pipelines process each waveform individually, ignoring potentially valuable context from neighboring waveforms. As a result, lidar point clouds are prone to artifacts from low signal-to-noise ratio (SNR) regions, highly reflective objects, and environmental conditions like fog. In this work, we propose a neural DSP that jointly processes full waveforms using a transformer architecture leveraging features from adjacent waveforms to generate high-fidelity multi-echo point clouds. Trained on synthetic and real data, the method improves Chamfer distance by 32cm and 20cm compared to conventional peak finding and existing transient imaging approaches, respectively. This translates to maximum range improvements of up to 17m in fog and 14m in challenging real-world conditions

Lidar Waveforms are Worth 40x128x33 Words

Dominik Scheuble, Hanno Holzhüter, Steven Peters, Mario Bijelic, Felix Heide

ICCV 2025

Reconstruction in Challenging Conditions

Unlike conventional lidar DSPs, our neural DSP uses shape features and spatial context to improve performance — especially in difficult conditions. To demonstrate this, we collected a dedicated test set in a weather chamber under heavy fog. While standard on-device processing struggles with backscatter and missing object points as shown below, our neural DSP reduces these effects significantly. As a result, it extends the sensor’s maximum range by nearly 30 meters compared to conventional DSPs.

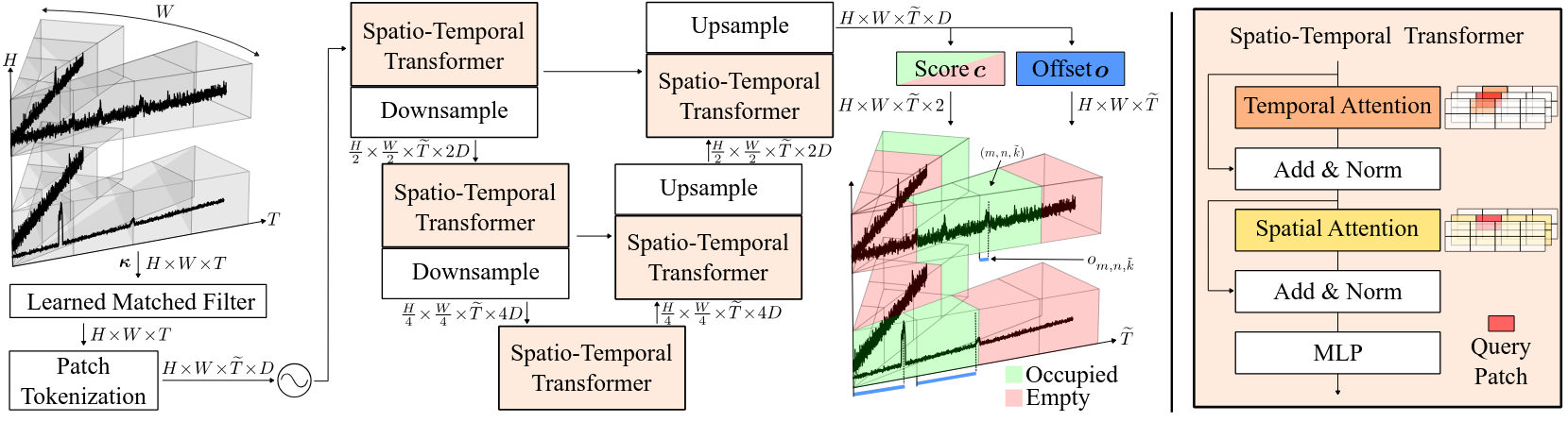

Neural DSP

We propose a neural DSP that formulates peak-finding as a classification problem. As shown below, waveforms are divided along the temporal dimension into multiple patches. Waveform patches are then processed with dedicated spatio-temporal transformers arranged in an U-Net architecture. The spatio-temporal transformer performs first temporal attention for each pixel individually and then uses a SWIN approach to compute spatial attention. The neural DSP predicts a classification score, classifying whether a patch is empty or occupied, and then regresses a distance to locate the peak within the patch. By thresholding with the classification score, the neural DSP is able to output a multi-peak point cloud at inference time.

Reconstruction Results

We assess the benefit of our Neural DSP by extracting waveform data out of a production-grade automotive lidar in various challenging conditons. In the first row, our neural DSP is the only method to deliver a satisfying scene reconstruction by suppressing the backscatter and recovering heavily attenuated returns in foggy conditions. In clear-weather scenes, our neural DSP reconstructs low-reflectivity objects like a black car (second row) with sharp contours, while other methods produce oversmoothed results or fail to reconstruct objects entirely. Furthermore, our method is able to recover attenuated returns from distant objects (third row) challenging for baseline methods. Additionally, it is able to correct distance errors and suppresses blooming artifacts from e.g. retroreflective traffic signs, where baseline methods produce clusters of false points.

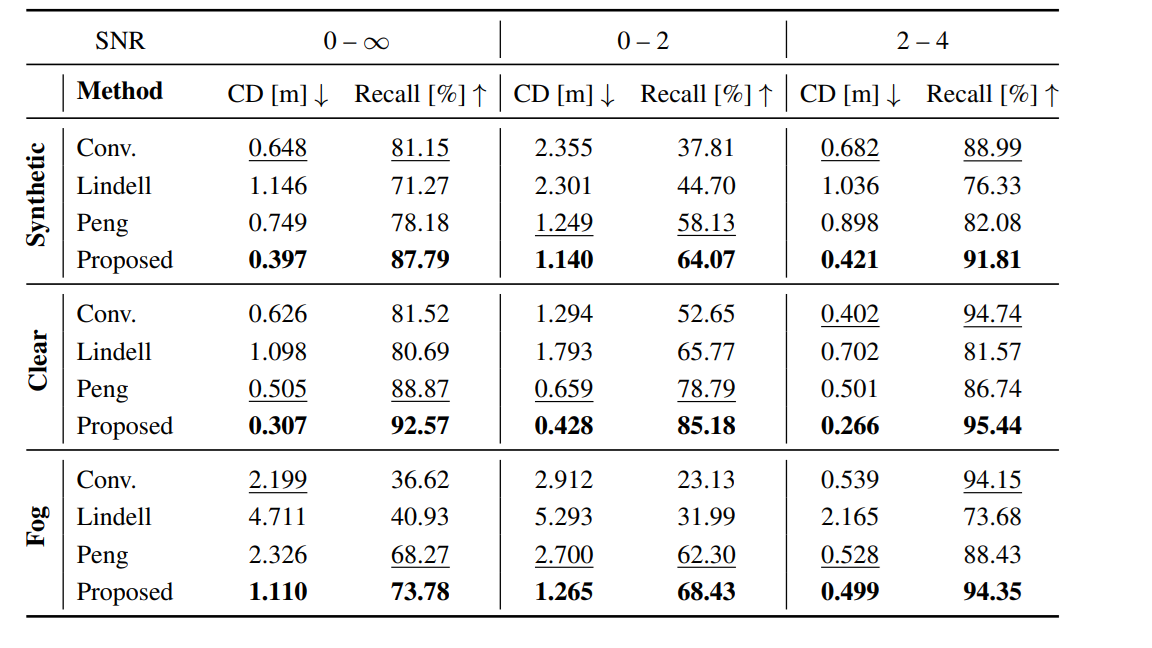

Quantitative Results

As shown in the Table on the right, we use Chamfer Distance (CD) and Recall to assess the quality of the predicted point clouds. To assess the robustness in challenging conditions, we evaluate two signal-to-noise ratio (SNR) ranges: difficult low-SNR conditions (0-2) and easier high-SNR conditions (2-4). The quantitative results confirm the above qualitative findings. Existing transient imaging methods by Lindell et al. and Peng et al. improve reconstruction in low SNR (0–2) scenarios. At high SNR (2–4), their denoising strategy offers however no advantage over conventional peak finding (Conv.). In contrast, the proposed neural DSP outperforms baselines across all SNR ranges and excels in fog. Notably, the neural DSP has never seen any real fog captures during training but was only trained with synthetic fog data, validating the benefit and high-degree of realism of our presented waveform simulation model.

As shown on the left, our Neural DSP is able to reconstruct fine scene details close to the ground truth scan as indicated in the zoom-ins. As visualized in the top row, our method recovers fine details in challenging foggy conditions such as the tire which is clearly distinguishable from the road in contrast to conventional peak-finding. Similarly in the bottom row, our method fully recovers the front of a distant vehicle which is entirely missed by conventional DSP processing.

Super-Resolution Waveform Cues

Current lidar sensors suffer from significantly lower resolution compared to, e.g., cameras. However, the full waveform contains information useful to increase the resolution without the need for more pixels. For example, multiple peaks in the waveform suggest that a higher-resolution lidar with smaller beam divergence would likely resolve distinct points. Conversely, a single strong peak is an indicator that a higher-resolution system would detect multiple points at the same distance.

As shown on the right, extending our neural DSP to exploit these cues to render super-resolution point clouds is beneficial for distinguishing small hazardous items from the road, as shown on the right. The best-performing baseline ILN suffers from artifacts and flying pixels.

Driving Results

As our methods outperform conventional DSPs, especially in harsh conditions, and further produces high-fidelity point clouds in driving scenarios, we hope that this work encourages lidar manufacturers to open up sensing pipelines of next-generation sensors to be more programmable and enable end-to-end scene understanding in future work.

Related Publications

[1] Félix Goudreault, Dominik Scheuble, Mario Bijelic, Nicolas Robidoux and Felix Heide. LiDAR-in-the-loop Hyperparameter Optimization. CVPR 2023

[2] Dominik Scheuble, Chenyang Lei, Seung-Hwan Baek, Mario Bijelic and Felix Heide. Polarization Wavefront Lidar: Learning Large Scene Reconstruction from Polarized Wavefronts. CVPR 2024