Collaborative On-Sensor Array Cameras

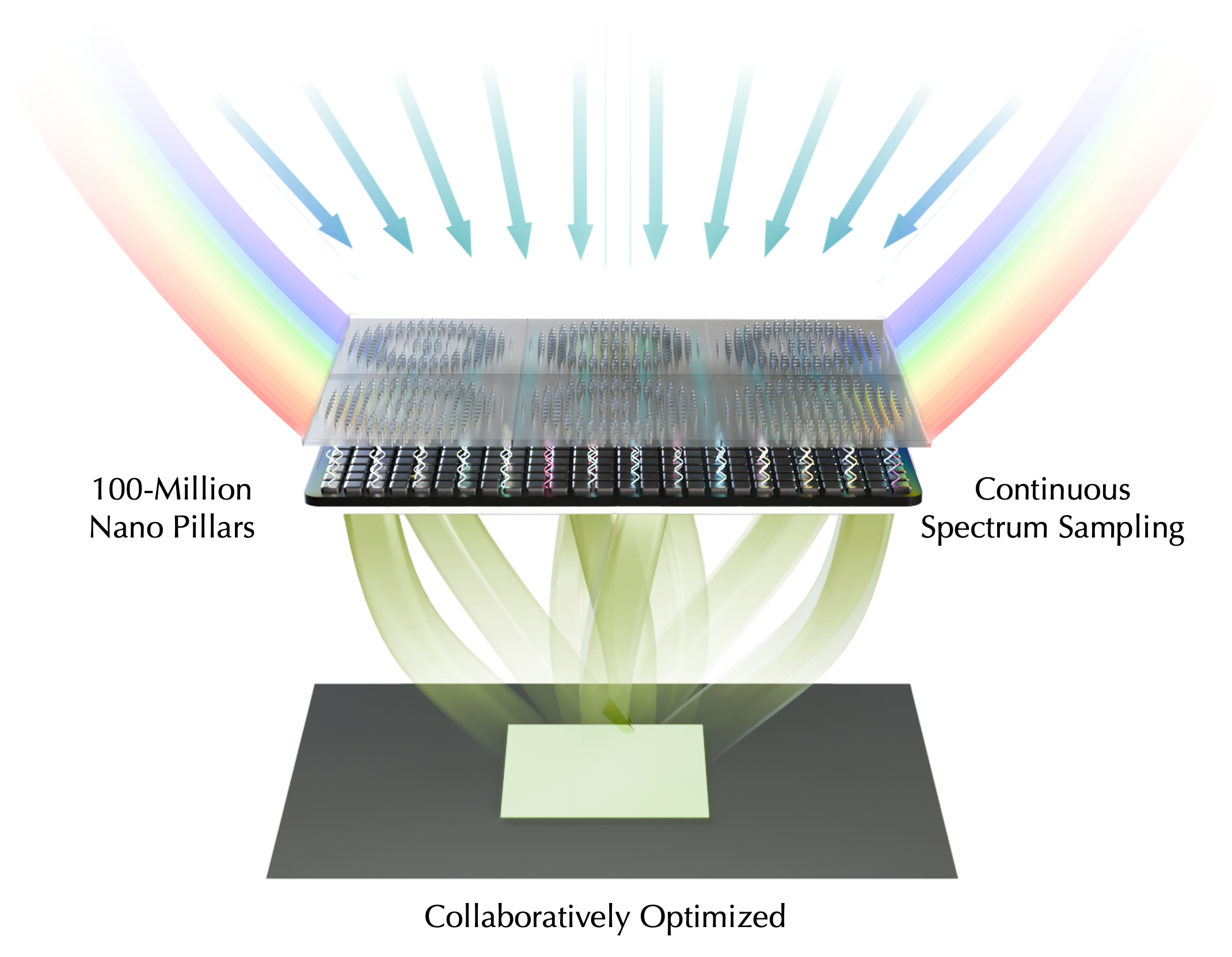

We investigate a collaborative array of metasurface elements that are jointly learned to perform broadband imaging. The array features 100-million nanoposts that are end-to-end optimized over the full visible spectrum–a design task that existing inverse design methods or learning approaches cannot support due to memory and compute limitations. We introduce a distributed learning method to tackle this challenge. This allows us to optimize a large parameter array along with a learned meta-atom proxy and a non-generative reconstruction method that is parallax-aware and noise-aware.

We investigate a collaborative array of metasurface elements that are jointly learned to perform broadband imaging. The array features 100-million nanoposts that are end-to-end optimized over the full visible spectrum–a design task that existing inverse design methods or learning approaches cannot support due to memory and compute limitations. We introduce a distributed learning method to tackle this challenge. This allows us to optimize a large parameter array along with a learned meta-atom proxy and a non-generative reconstruction method that is parallax-aware and noise-aware.

Overall, we find that a collaboratively optimized metalens array—just 3.6 mm above an off-the-shelf RGB sensor—enables high-quality, real-time, hallucination-free broadband imaging in an ultra-compact form factor. By delivering high-fidelity, on-sensor, video-rate operation, this work challenges the long-standing assumption that meta-optics cameras are impractical for real-world broadband imaging due to speed and quality constraints.

Collaborative On-Sensor Array Cameras

Jipeng Sun, Kaixuan Wei, Thomas Eboli, Congli Wang, Cheng Zheng, Zhihao Zhou, Arka Majumdar, Wolfgang Heidrich, Felix Heide

SIGGRAPH 2025

On-Sensor Video-Rate Broadband Metalens Camera

Prior work in metalens broadband imaging has predominantly focused on improving individual metalenses, yet single‐element diffractive optics remain fundamentally limited by chromatic dispersion. In this work, we optimized a 100-million nano-pillar metalens array collaboratively across the whole visible spectrum. This makes an on-sensor, real-time, high-fidelity, broadband metalens camera possible.

Collaborative Broadband Lens Array

We densely sampled the incident spectrum from 400 nm to 700 nm at 0.5 nm intervals to compute the point-spread functions (PSFs) of each sub-lens in our array. We devised an end-to-end design method to jointly optimize the meta-optic phase profiles and the downstream reconstruction algorithm. The resulting sub-lens PSFs exhibit complementary, wavelength-dependent aberrations that mutually compensate: when combined, these elements produce a composite PSF whose footprint remains small across the entire visible band.

End-to-End Collaborative Imaging

We optimized the metalens array using collaborative through a differentiable spatially-varying image formation model and a reconstruction algorithm. The forward model computes the PSFs with a neural metasurface proxy spanning the complete visible spectrum at wide incident angles, with computations distributed across multiple GPUs. After two-step alignment to compensate for parallax, we reconstruct the full visible spectrum at the resolution of the input sub-images, with a multi-image inverse filter method collaboratively combining wavelength spectra across the six collaborative measurements. The whole pipeline is fully differentiable, thereby enabling end-to-end optimization of the collaborative nanophotonic imaging system.

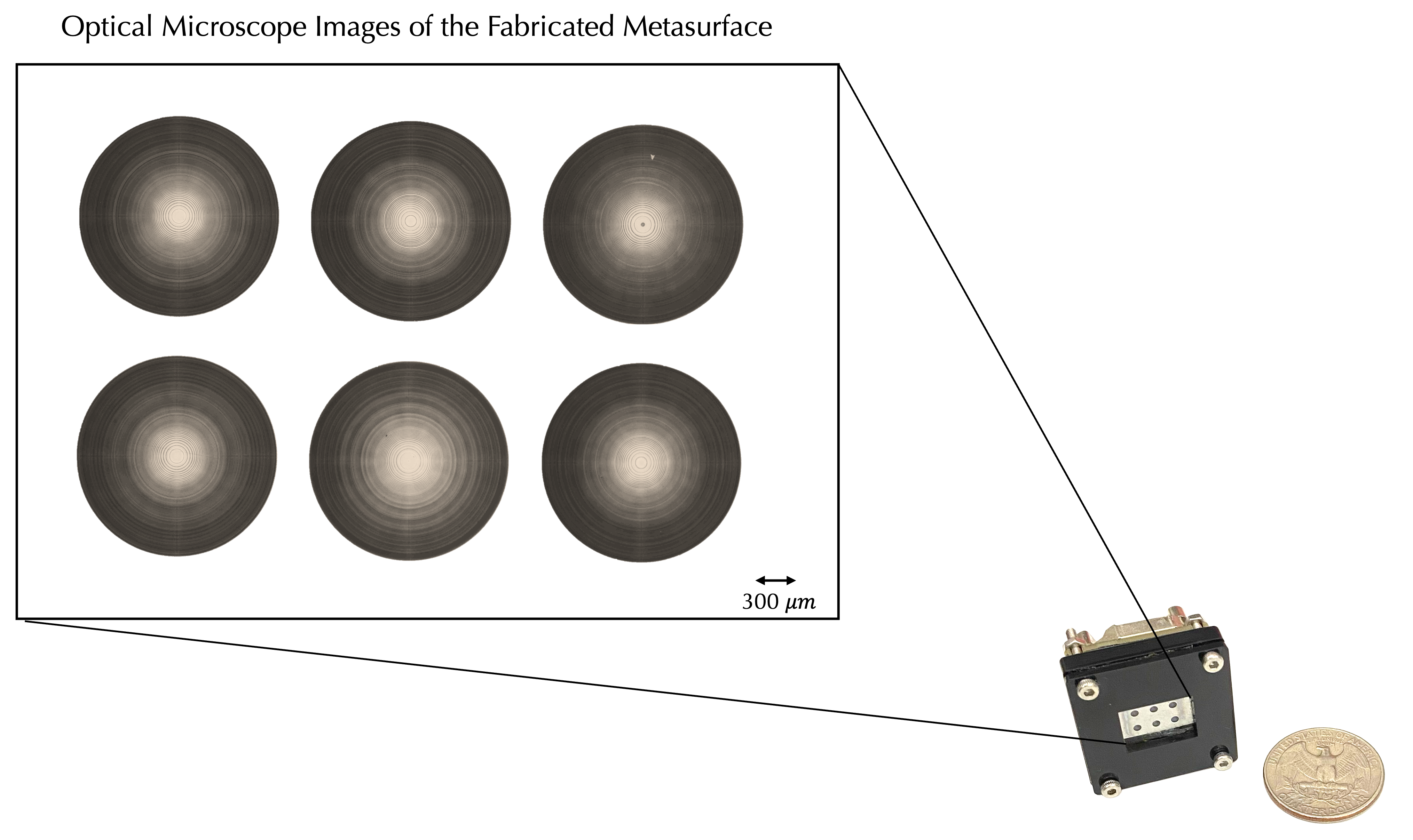

On-Sensor Experimental Prototype

We fabricated the designed metasurface through an E-beam lithography process and attached it directly above an off-the-shelf RGB sensor with a 3D-printed frame. On the left, we show the optical microscope images of the fabricated array and the experimental prototype. The fabricated metalens array comprises a 2 × 3 grid of lenses, each with a 3.6 mm focal length, 3.57 mm pitch and 1.5 mm diameter. In total, the array measures about 7.1 mm × 10.7 mm—roughly half the diameter of a U.S. quarter (24.26 mm). Each metalens produces a subimage of approximately 3.5 mm × 3.5 mm, covering a FOV of 50°× 50°.

Real-Time High-Fidelity Reconstruction without Hallucination

Our camera prototype improves on existing flat cameras and metalens cameras that uses diffusion-based image reconstruction in accuracy, speed, and absence of hallucinated content in the reconstructed results. We recover details such as the face of the person and the polka dots on the book, whereas the compared method instead blurs the face and mangles the dots on the book.

Robust Real-World Reconstruction in Diverse Conditions

We conducted real-world experiments across diverse and challenging conditions — including variations in illumination, depth parallax, and the presence of novel objects. We captured scenes featuring small text, human faces, significant depth variations, and low-contrast textures. These scenes were recorded for a wide range of illumination conditions such as low-light, artificial light, direct sunlight, and intense localized light sources. Below are representative measurement and reconstruction results, alongside aligned comparison measurements from a reference camera with a physical length of 50 mm.

Video-Rate Capture & Reconstruction

Our reconstruction runs at real-time rates. Compared to the diffusion-based reconstruction methods that take more than a minute to process a single image, our technique is 2000 times faster and runs at 35 FPS on the same test platform. Both approaches are implemented in PyTorch and do not leverage further optimizations. We rely on fast FFT-based inverse filtering with a subsequent denoising network that is lightweight since fusing six images already reduces noise.

Indoor video reconstruction where a toy snake and toy tractor were moved in the foreground. A white board with colorful text is in the background. Our video reconstruction retained sharp details for both foreground and background irrespective of depth parallax.

Though tested under a complex dynamic scene with various real-world broadband illuminants including lighting—sunlight, shop fixtures, and car headlights, our camera prototype reconstructed the scene consistently and robustly.

In an indoor scene of a man flipping through a book of human faces and polka dots—later joined by another man holding a colorful basketball—the camera accurately captures facial details, the crisp round dots, and the ball’s vivid hues.

Related Publications

[1] Praneeth Chakravarthula, Jipeng Sun, Xiao Li, Chenyang Lei, Gene Chou, Mario Bijelic, Johannes Froech, Arka Majumdar, Felix Heide. Thin On-Sensor Nanophotonic Array Cameras. ACM Transactions on Graphics, 2023

[2] Johannes E. Fröch, Praneeth Chakravarthula, Jipeng Sun, Ethan Tseng, Shane Colburn, Alan Zhan, Forrest Miller, Anna Wirth-Singh, Quentin A. A. Tanguy, Zheyi Han, Karl F. Böhringer, Felix Heide, Arka Majumdar. Beating Spectral Bandwidth Limits for Large Aperture Broadband Nano-optics. Nature Communications, 2025

[3] Ethan Tseng, Shane Colburn, James Whitehead, Luocheng Huang, Seung-Hwan Baek, Arka Majumdar, Felix Heide. Neural Nano-Optics for High-quality Thin Lens Imaging. Nature Communications, 2021