Driving Datasets

Reliable perception in all weather conditions remains one of the most critical challenges in autonomous driving. Adverse weather conditions such as snow, heavy rain, and fog can severely degrade the ability of current systems to sense and interpret their surroundings. This has long been considered one of the last remaining technical challenges preventing self-driving cars from reaching the market. To address this, we present a suite of multimodal datasets designed to promote robust environmental perception under a wide range of conditions. An overview of the dataset is presented below:

Seeing Through Fog

Novel multi-modal datatset with 12,000 samples under different weather and illumination conditions and 1,500 measurements acquired in a fog chamber. Furthermore, the dataset contains accurate human annotations for objects annotated with 2D and 3D bounding boxes and frame tags describing the weather, daytime and street condition.

Gated2Depth

Imaging framework which converts three images from a gated camera into high-resolution depth maps with depth accuracy comparable to pulsed lidar measurements.

Gated2Gated

Self-supervised imaging framework, which estimates absolute depth maps from three gated images and uses cycle and temporal consistency as training signals. This work provides an extension of the Seeing Through Fog dataset with synchronized temporal history frames for each keyframe. Each keyframe contains synchronized lidar data and fine granulated scene and object annotations.

Pixel Accurate Depth Benchmark

Evaluation benchmark for depth estimation and completion under varying weather conditions using high-resolution depth.

Long Range Stereo

Novel long-range multimodal dataset with 107,000+ samples captured over 1,000 km in Southern Germany under diverse lighting and weather conditions. Collected using synchronized LiDAR, RGB and gated NIR cameras, the dataset includes stereo gated images including three active and two ambient captures, stereo RGB images and projected ground-truth LiDAR depth.

Long Range Stereo Extension

Extended Long-Range Stereo Dataset featuring calibrated and synchronized RCCB stereo captures, along with accumulated and refined LiDAR ground truth for depth evaluation up to 220 meters.

Polarization Wavefront Lidar Dataset

Simulated and real-world dataset of polarized wavefront lidar from an automotive-grade sensor.

Object detection dataset in challenging adverse weather conditions

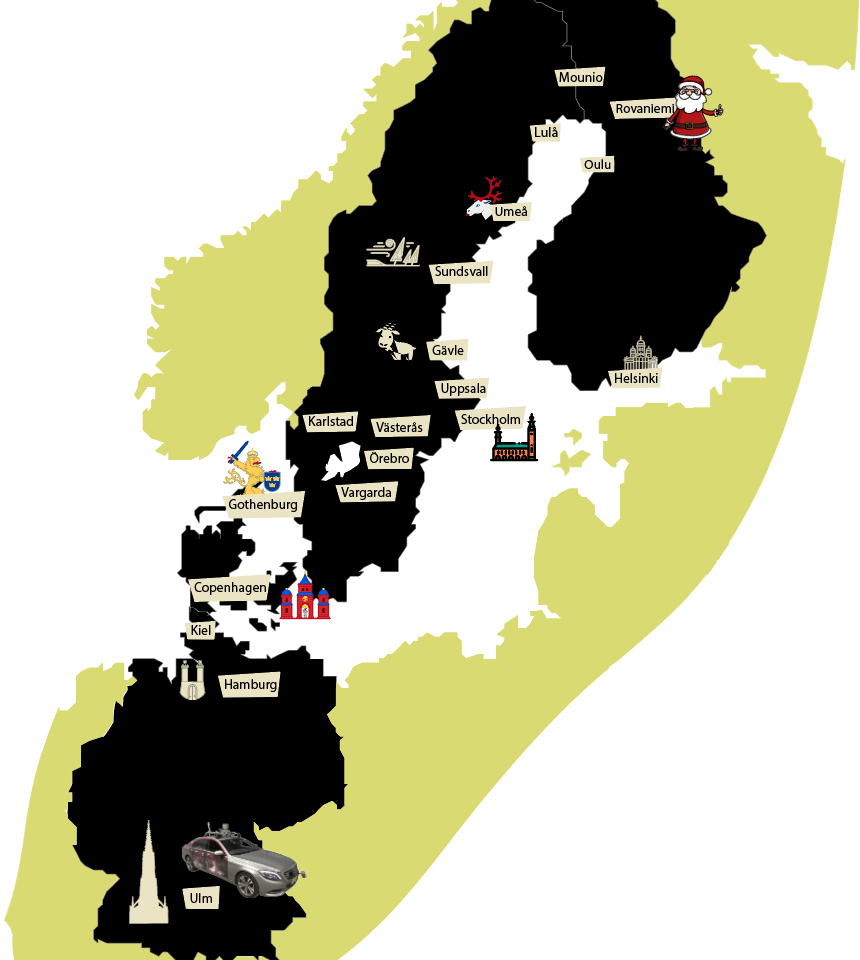

We introduce an object detection dataset in challenging adverse weather conditions covering 12000 samples in real-world driving scenes and 1500 samples in controlled weather conditions within a fog chamber. The dataset includes different weather conditions like fog, snow, and rain and was acquired by over 10,000 km of driving in northern Europe. The driven route with cities along the road is shown on the right. In total, 100k Objekts were labeled with accurate 2D and 3D bounding boxes. The main contributions of this dataset are:

- We provide a proving ground for a broad range of algorithms covering signal enhancement, domain adaptation, object detection, or multi-modal sensor fusion, focusing on the learning of robust redundancies between sensors, especially if they fail asymmetrically in different weather conditions.

- The dataset was created with the initial intention to showcase methods, which learn of robust redundancies between the sensor and enable a raw data sensor fusion in case of asymmetric sensor failure induced through adverse weather effects.

- In our case we departed from proposal level fusion and applied an adaptive fusion driven by measurement entropy enabling the detection also in case of unknown adverse weather effects. This method outperforms other reference fusion methods, which even drop in below single image methods.

- Please check out our paper for more information. Click here for our paper at CVF.

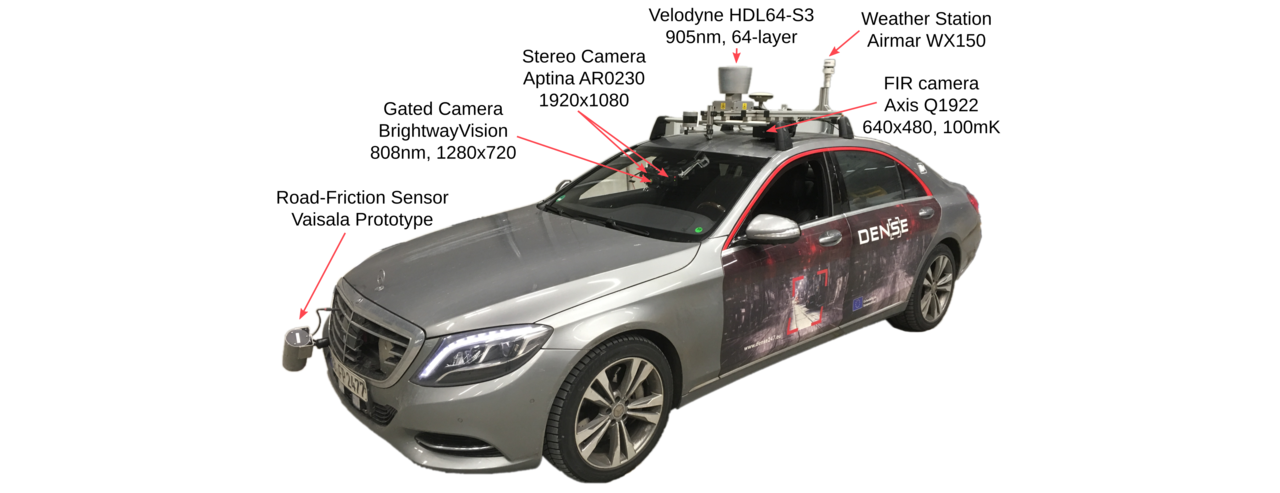

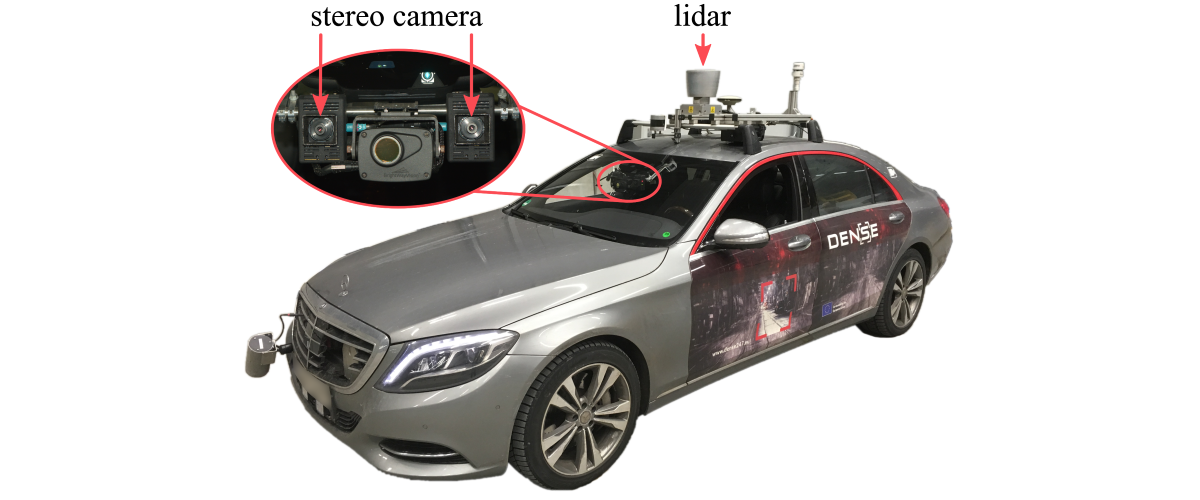

Sensor setup

To capture the dataset, we have equipped a test vehicle with sensors covering the visible, mm-wave, NIR, and FIR band, see Figure below. We measure intensity, depth, and weather conditions. For more information, please refer to our dataset paper.

Videos

Dataset Overview

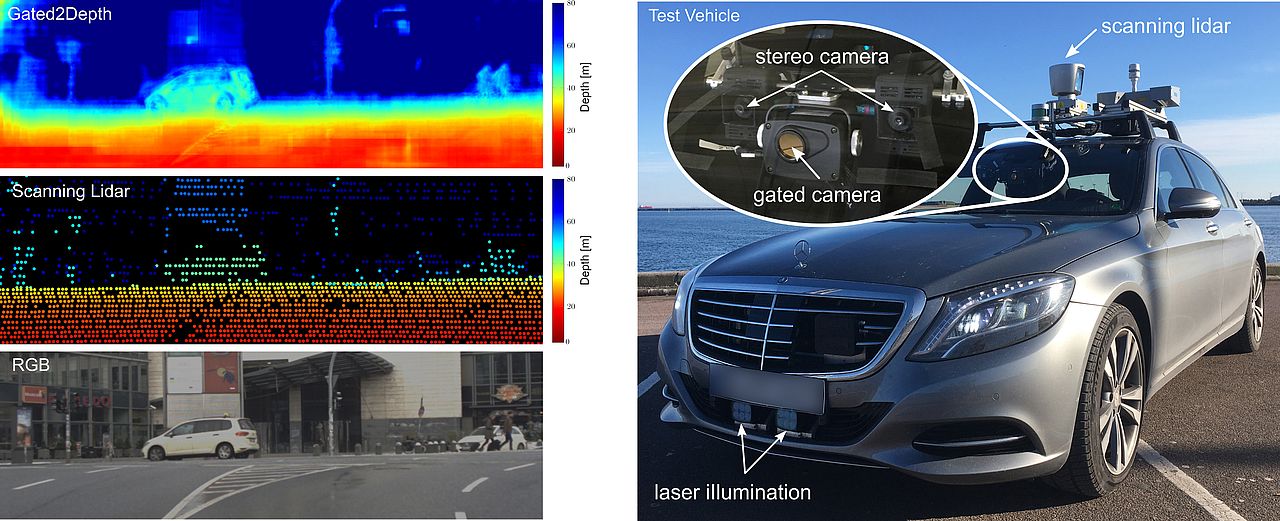

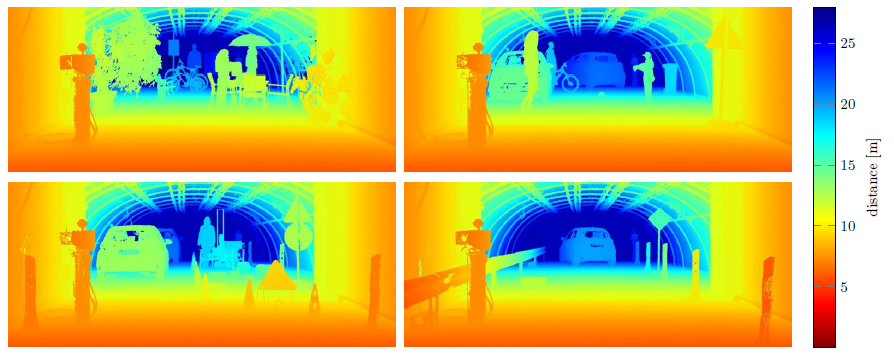

We present an imaging framework which converts three images from a low-cost CMOS gated camera into high-resolution depth maps with depth accuracy comparable to pulsed lidar measurements.The main contributions are:

- We propose a learning-based approach for estimating dense depth from gated images, without the need for dense depth labels for training.

- We validate the proposed method in simulation and on real-world measurements acquired with a prototype system in challenging automotive scenarios. We show that the method recovers dense depth up to 80m with depth accuracy comparable to scanning lidar.

- Dataset: We provide the first long-range gated dataset, covering over 4,000km driving throughout Northern Europe.

Dataset Overview

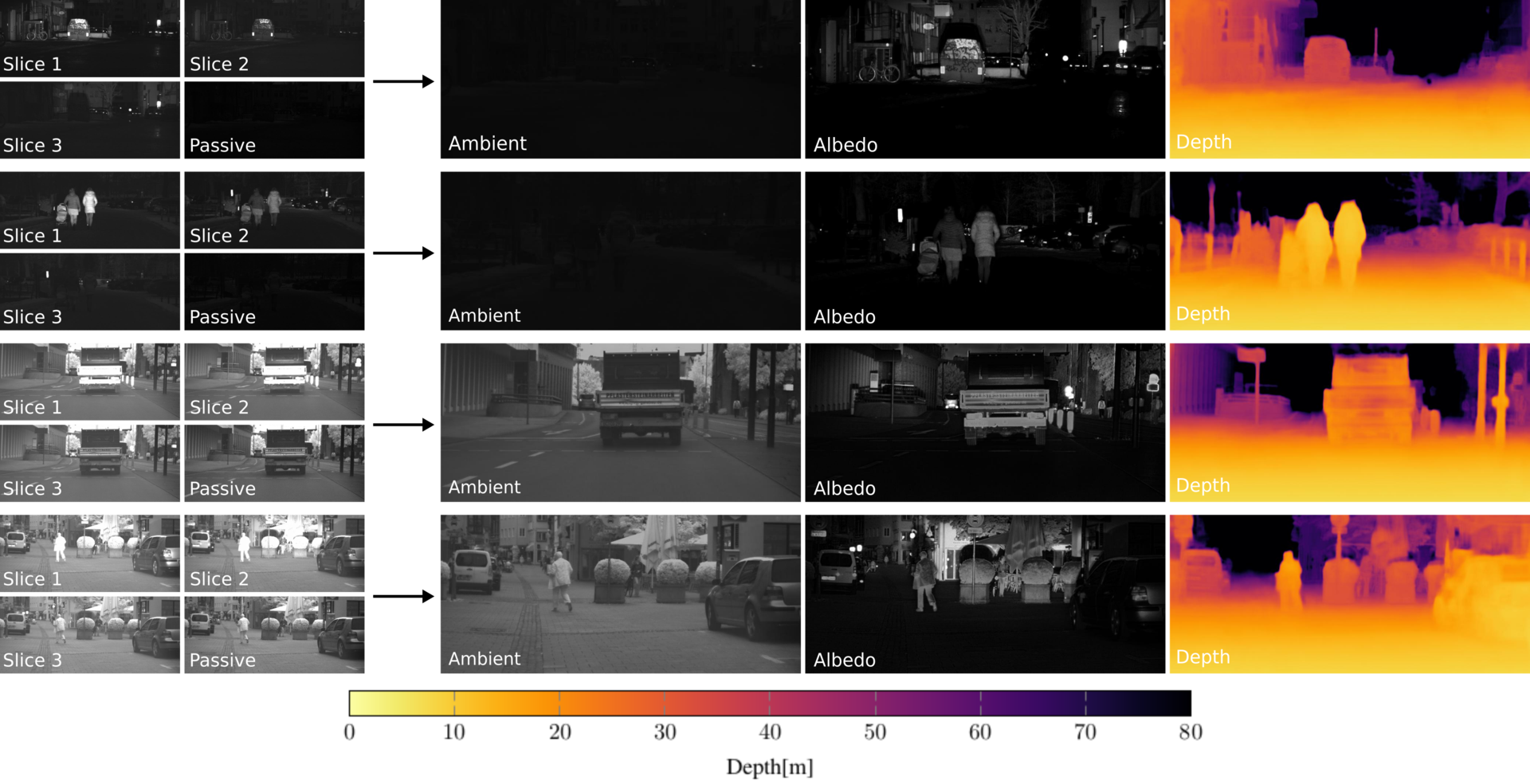

We present a self-supervised imaging framework, which estimates dense depth from a set of three gated images without any ground truth depth for supervision. By predicting scene albedo, depth and ambient illumination, we reconstruct the input gated images and enforce cycle consistency as can be seen in the title picture. In addition, we use view synthesis to introduce temporal consistency between adjacent gated images.

The main contributions are:

- We propose the first self-supervised method for depth estimation from gated images that learns depth prediction without ground truth depth.

- We validate that the proposed method outperforms previous state of the art including fully supervised methods in challenging weather conditions as dense fog or snowfall where lidar data is too cluttered to be used for direct supervision.

- Dataset: We provide the first temporal gated imaging dataset, consisting of around 130,000 samples, captured in clear and adverse weather conditions as an extension of the labeled Seeing Through Fog dataset which provides detailed scene annotations, object labels and corresponding multi modal data.

Videos

Dataset Overview

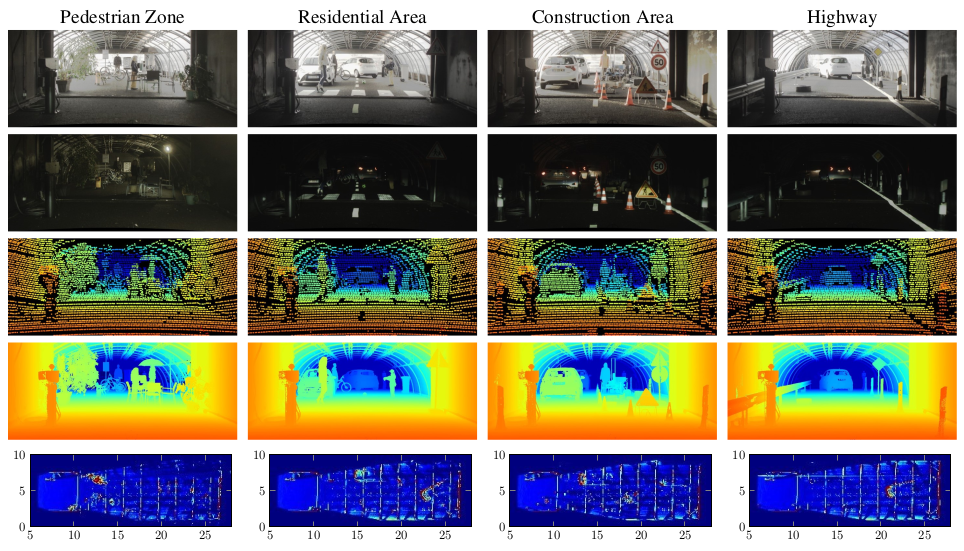

We introduce an evaluation benchmark for depth estimation and completion using high-resolution depth measurements with angular resolution of up to 25” (arcsecond), akin to a 50 megapixel camera with per-pixel depth available. The main contributions of this dataset are:

- High-resolution ground truth depth: annotated ground truth of angular resolution 25” – an order of magnitude higher than existing lidar datasets with angular resolution of 300”.

- Fine-Grained Evaluation under defined conditions: 1,600 samples of four automotive scenarios recorded in defined weather (clear, rain, fog) and illumination (daytime, night) conditions.

- Multi-modal sensors: In addition to monocular camera images, we provide a calibrated sensor setup with a stereo camera and a lidar scanner.

We model four typical automotive outdoor scenarios from the KITTI dataset. Specifically, we setup the following four realistic scenarios: pedestrian zone, residential area, construction area and highway. In total, this benchmark consists of 10 randomly selected samples of each scenario (day/night) in clear, light rain, heavy rain and 17 visibility levels in fog (20-100 m in 5 m steps), resulting in 1,600 samples in total.

Sensor Setup

To acquire realistic automotive sensor data, which serves as input for the depth evaluation methods assessed in this work, we equipped a research vehicle with a RGB stereo camera (Aptina AR0230, 1920×1024, 12bit) and a lidar (Velodyne HDL64-S3, 905 nm). All sensors run in a robot operating system (ROS) environment and are time-synchronized by a pulse per second (PPS) signal provided by a proprietary inertial measurement unit (IMU).

Videos

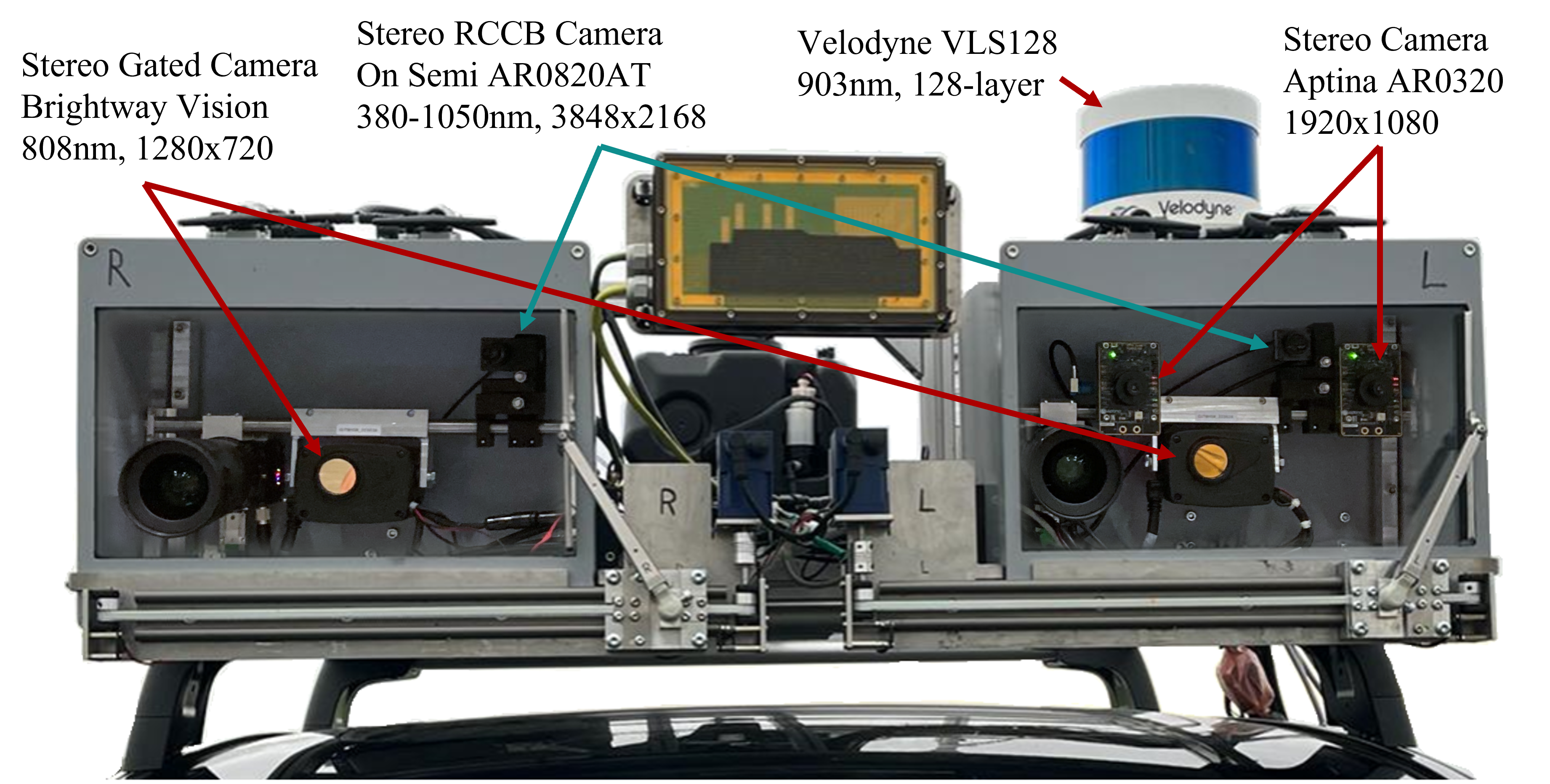

We introduce a long-range depth dataset captured using a vehicle equipped with multiple synchronized sensor systems. Our setup includes a Velodyne VLS128 LiDAR sensor with a range of up to 200 meters operating at 10 Hz with 128 lines, an automotive RGB stereo camera (OnSemi AR0230) providing 12-bit HDR images at 1920×1080 pixels resolution and 30 Hz, and a gated stereo NIR camera (BrightWayVision) capturing 10-bit images at 1280×720 pixels with a frame rate of 120 Hz. The gated camera produces three gated slices and two additional ambient captures. Scene illumination is provided by two vertical-cavity surface-emitting lasers (VCSEL) modules mounted on the front tow hitch, operating at 808 nm wavelength, each delivering 500 W peak power with laser pulses of 240–370 ns duration. All sensors are calibrated and synchronized.

Our dataset was collected during an extensive driving campaign covering over one thousand kilometers across Southern Germany. It consists of 107,348 total samples captured under diverse lighting conditions including day, night, and various weather scenarios. After careful sub-selection to ensure scenario diversity, we provide a structured split of 54,320 samples for training, 728 for validation, and 2,463 for testing.

We introduce an extension of the Long-Range Stereo Dataset with Stereo RCCB images, which is included in the standard download of the dataset. The RCCB stereo array has a baseline of b = 0.76 m and captures images across the visible spectrum from 380 to 1050 nm. Unlike conventional RGB cameras using an RGGB (Bayer) color pattern, RCCB cameras replace the green channels with clear channels, increasing sensitivity and improving nighttime performance by approximately 30%. The employed Onsemi AR0820AT image sensor is optimized for low-light conditions and challenging high dynamic range scenes, featuring a 2.1 µm DR Pix BSI pixel and an on-sensor HDR capability of up to 140 dB.

To allow for even greater evaluation distances, extending beyond 160 m, and to achieve high-resolution ground-truth depth, we provide densely accumulated LiDAR point clouds for a long-range test set. These point clouds are constructed using a customized adaptation of the LIO-SAM algorithm by Shan et al. [5], leveraging IMU, GNSS, and LiDAR measurements. The accumulated LiDAR maps inherently include distant objects and regions beyond typical sensing ranges, for which we limit the maximum depth to 220 m. Additionally, to handle occlusions and motion artifacts from dynamic objects, we filter projected LiDAR points using depth consistency checks based on stereo depth maps estimated from a foundation stereo algorithm.

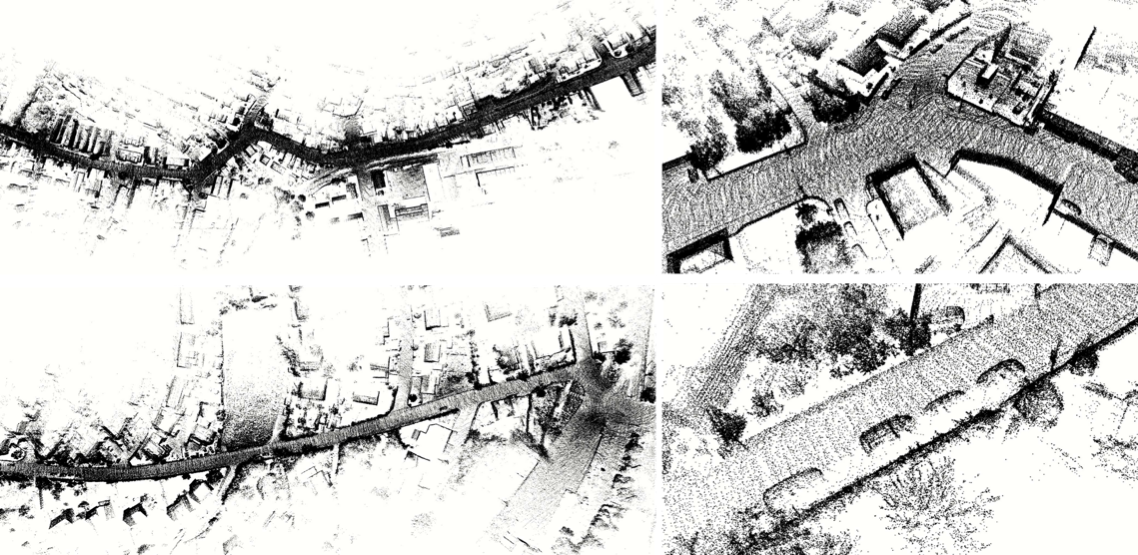

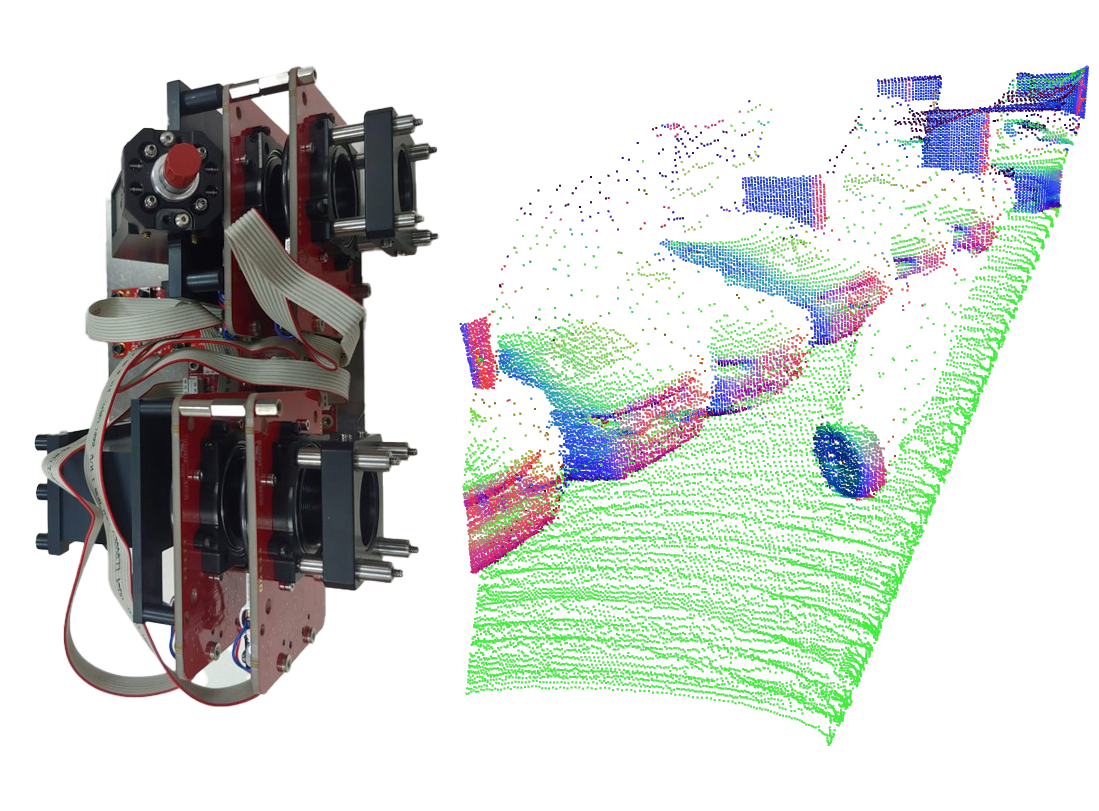

We capture a long-range polarimetric lidar dataset in typical automotive scenes with object distances up to 100m. Our hardware prototype balances long-range capability of up to 223 m and high 150 x 236 spatial resolution within a 23.95° vertical and 31.53° horizontal field-of-view. On the right, a figure illustrates the internal setup of the hardware. We apply separate emission and reception modules using a MEMS micro-mirror for scanning and a digital micro-mirror device (DMD) to direct returning light, reducing light loss. The system operates at a wavelength of 1064 nm, equipped with a narrow bandpass filter to reduce ambient light interference while maintaining Class-1 eye safety standards. The laser power can be adjusted to find the right balance between range and signal saturation. The laser light is modulated in polarization through a half-wave and quarter-wave plate. Subsequently, a back-side illuminated Avalanche Photodiode (APD) with an adjustable bias reads the raw signal, digitized by a PCIe-5764 FlexRIO-Digitizer ADC sampling at 1 Gs/s, providing a resolution of 15 cm per bin.

Related Publications

[1] Mario Bijelic, Tobias Gruber, Fahim Mannan, Florian Kraus, Werner Ritter, Klaus Dietmayer, Felix Heide. Seeing Through Fog Without Seeing Fog: Deep Multimodal Sensor Fusion in Unseen Adverse Weather. CVPR, 2020.

[2] Tobias Gruber, Frank Julca-Aguilar, Mario Bijelic, Felix Heide. Gated2Depth: Real-Time Dense Lidar From Gated Images. ICCV, 2019.

[3] Amanpreet Walia, Stefanie Walz, Mario Bijelic, Fahim Mannan, Frank Julca-Aguilar, Michael Langer, Werner Ritter, Felix Heide. Gated2Gated: Self-Supervised Depth Estimation from Gated Images. CVPR, 2022.

[4] Tobias Gruber, Mario Bijelic, Felix Heide, Werner Ritter, Klaus Dietmayer.Pixel-Accurate Depth Evaluation in Realistic Driving Scenarios. 3DV, IEEE, 2019.

[5] Tixiao Shan, Brendan Englot, Drew Meyers, Wei Wang, Carlo Ratti and Daniela Rus. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. The IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020.