Multi-view Spectral Polarization Propagation for Video Glass Segmentation

-

Yu Qiao

- Bo Dong

-

Ao Jin

-

Yu Fu

- Seung-Hwan Baek

- Felix Heide

- Pieter Peers

-

Xiaopeng Wei

- Xin Yang

ICCV 2023

We present a polarization-guided video glass segmentation propagation method (PGVS-Net) that can robustly and coherently propagate glass segmentation in RGB-P video sequences. By exploiting spatio-temporal polarization and color information, our method combines multi-view polarization cues and thus can alleviate the view dependence of single-input intensity variations on glass objects. The approach outperforms glass segmentation on RGB-only video sequences as well as produce more robust segmentation than per-frame RGB-P single-image segmentation methods.

Paper

Yu Qiao, Bo Dong, Ao Jin, Yu Fu, Seung-Hwan Baek, Felix Heide Pieter Peers, Xiaopeng Wei, Xin Yang

Multi-view Spectral Polarization Propagation for Video Glass Segmentation

ICCV 2023

PGV-117 Dataset

To train and evaluate our method, we introduce a novel RGB-P-video-based dataset, PGV-117. The dataset is collected using a trichromatic (RGB) polarizer-array camera (LUCID PHX050S), equipped with four directional linear-polarizers, i.e., $0^{\circ}$, $45^{\circ}$, $90^{\circ}$ and $135^{\circ}$. The frame rate is fixed at 30fps, and we vary the exposure time from 3,000 μs to 39,999 μs depending on the scene and lighting conditions. Each frame in the PGV-117 dataset is composed of: an RGB image, four directional polarization images, inferred spectral AoLP and DoLP maps, and the captured RAW camera data. Polarization cues are angle dependent. To avoid biasing PGV-117 to certain view angles and camera paths, we randomly pick one of five pre-determined camera motion patterns: front, inclined, circular, straight-forward, and up-down.

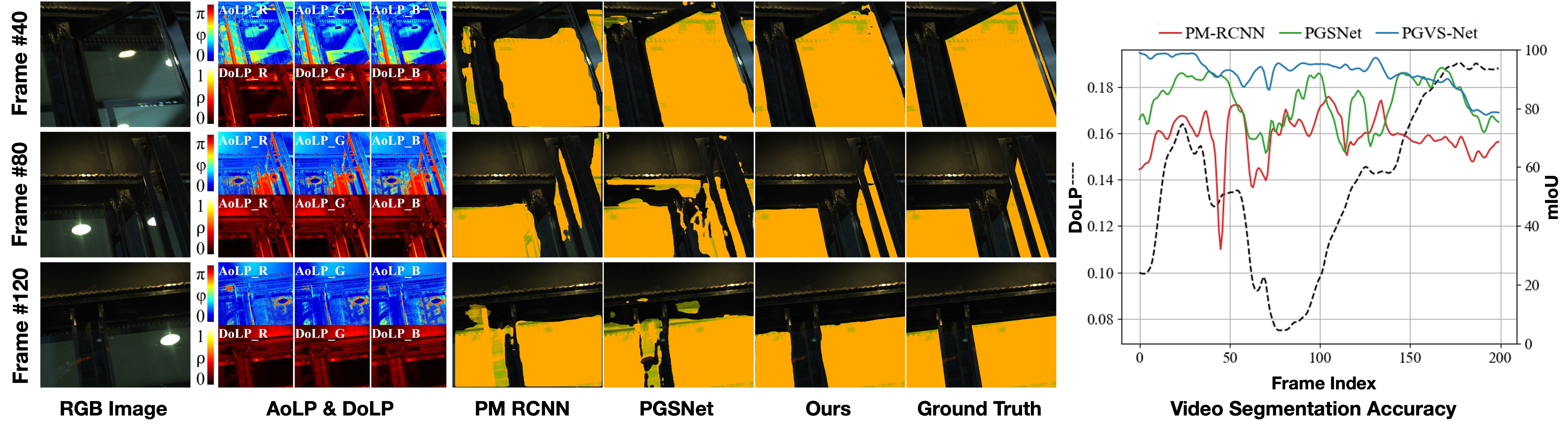

Qualitative Comparisons

We conducted a comparative analysis of PGVS-Net compared to single-frame image-based glass segmentation techniques and state-of-the-art video segmentation approaches. To ensure a fair evaluation, we retrained all these methods using the PGV-117 dataset and subsequently assessed their performance across 32 test sequences. We compared PGVS-Net against:

1. PM-RCNN, an RGB-P glass segmentation method.

2. ViSha, a recent video shadow detection method.

3. Spatio-Temporal Memory (STM)-based video segmentation methods, namely STCN and RDE.

In all our experiments, during the inference stage, the initial masks from PGVS-Net were generated by the polarization-aware PGSNet. The qualitative results of this comparison are depicted below.

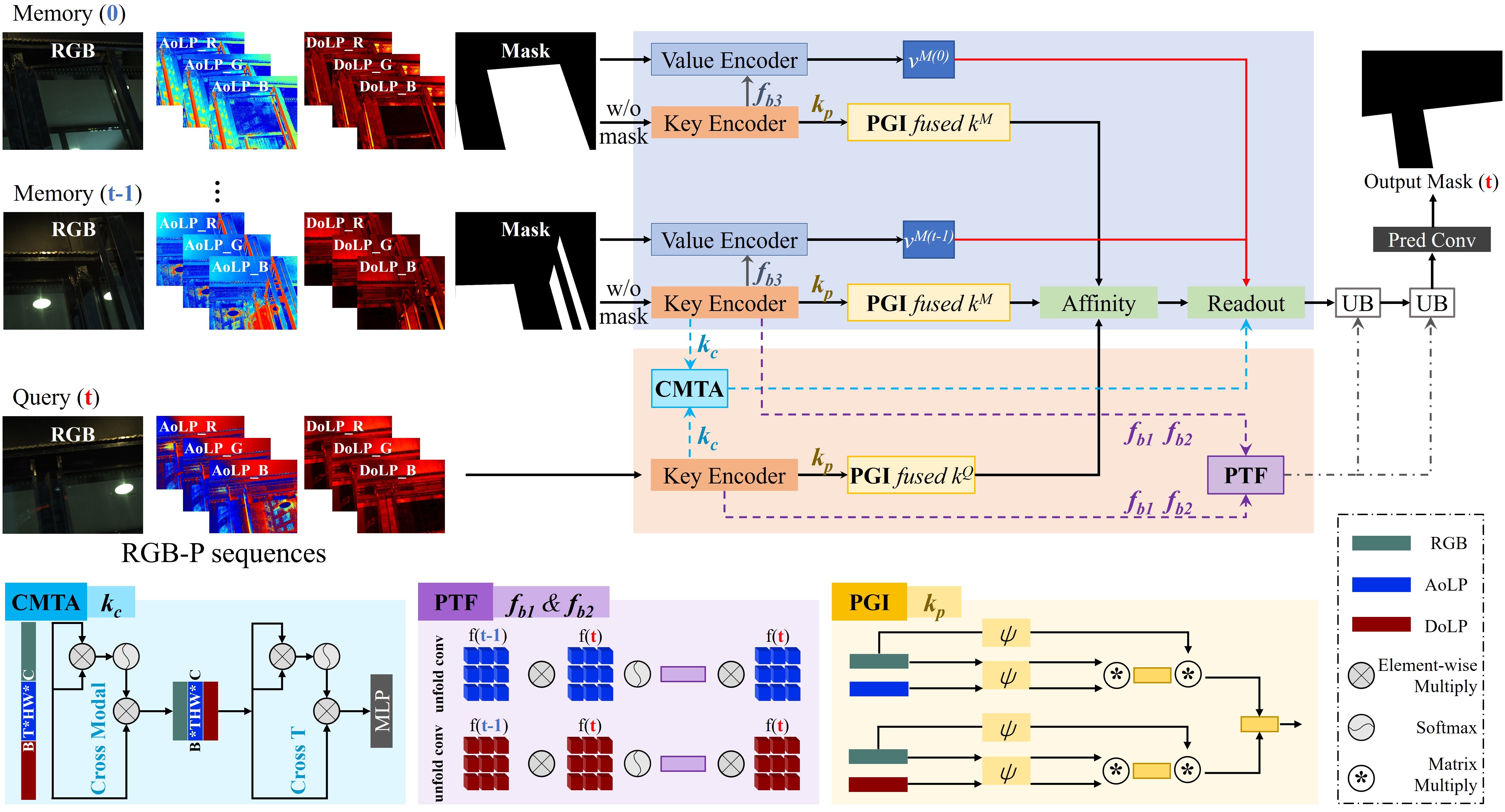

Polarization Video Glass Segmentation network (PGVS-Net)

PGVS-Net sequentially processes video frames beginning with the second frame, utilizing the ground truth annotation provided in the initial frame. As the video processing unfolds, preceding frames with object masks—established in the first frame or inferred in subsequent frames—are employed as memory frames. Furthermore, the current frame, excluding the object mask, assumes the role of the query frame. Note that each frame consists of trichromatic intensity $I$, AoLP $\varphi$, and DoLP $\rho$ information.

We encode each frame into a paired set of key and value maps. Specifically, for a given modality $x$, we use a ResNet50 architecture to encode the modality into three distinct feature maps: $f_{b1}^x$, $f_{b2}^x$, and $f_{b3}^x$, corresponding to the outputs of the first, second, and third blocks, respectively. The key feature map of each frame is derived using our specially devised polarization-guided integration module , based on $f_{b1}^x$, $f_{b2}^x$, and $f_{b3}^x$. Importantly, both memory and query frames employ the same methodology to generate key maps. For memory frames, the value map is generated through the following steps:

1. Trichromatic $I$, $\varphi$, $\rho$, and the glass mask are concatenated along the channel dimension and fed as input to a ResNet18 model to produce a feature map;

2. The resulting feature map is concatenated with $f_{b3}^x$ and subjected to two residual blocks and a Convolutional Block Attention Module (CBAM) attention block;

3. Finally, the memory value maps are produced by aggregating the multimodality maps from step 2.

In essence, relying on the query key and the pairs of memory key and value maps, PGVS-Net endeavors to estimate the value map for a given query frame through multi-view polarimetric propagation and the extraction of temporal correlations. The resultant value map of the query frame is then translated into the glass mask of the query frame via a decoder.

Related Publications

[1] Haiyang Mei, Bo Dong, Wen Dong, Jiaxi Yang, Seung-Hwan Baek, Felix Heide, Pieter Peers, Xiaopeng Wei, Xin Yang Glass Segmentation using Intensity and Spectral Polarization Cues. CVPR, 2022

[2] Seung-Hwan Baek and Felix Heide Polarimetric Spatio-Temporal Light Transport Probing. ACM SIGGRAPH Asia, 2021