Radar Fields: Frequency-Space Neural Scene Representations for FMCW Radar

- David Borts

-

Erich Liang

-

Tim Brödermann

-

Andrea Ramazzina

-

Stefanie Walz

-

Edoardo Palladin

-

Jipeng Sun

-

David Bruggemann

- Christos Sakaridis

- Luc Van Gool

- Mario Bijelic

- Felix Heide

SIGGRAPH 2024

Neural fields have demonstrated remarkable success in outdoor scene reconstruction, but neural reconstruction for radar remains largely unexplored. Radar sensors are cost-effective, widely-deployed, and robust to scattering in fog and rain, making them a complementary and practical alternative to more expensive optical sensing modalities that fail in adverse conditions. We present Radar Fields – a neural scene reconstruction method designed for active radar imagers. For the first time, it is possible to reconstruct large, outdoor 3D scenes from a single drive-through with a 2D radar sensor. Our approach unites an explicit, physics-informed sensor model with an implicit, learned neural geometry and reflectance model to directly synthesize raw radar measurements and extract 3D geometry.

Radar Fields: Frequency-Space Neural Scene Representations for FMCW Radar

David Borts, Erich Liang, Tim Brödermann, Andrea Ramazzina, Stefanie Walz, Edoardo Palladin,

Jipeng Sun, David Bruggemann, Christos Sakaridis, Luc Van Gool, Mario Bijelic, Felix Heide

SIGGRAPH 2024

Neural Fields for FMCW Radar

We introduce Radar Fields as a scene representation capable of recovering dense geometry and synthesizing radar signals at unseen views by learning from a single trajectory of raw radar measurements. We lift the limitations of conventional radar processing by learning directly in frequency space, as we train on raw radar Fourier-transformed waveforms instead of sparse processed radar point clouds. We exploit the physics of FMCW radar to correlate frequency-space ground truth information with 3D space. This allows us to access much higher spatial resolution (<0.05m radially) in a sensor otherwise notorious for its low resolution. For the first time, we can recover 3D geometry from a 2D radar sensor without any volume rendering.

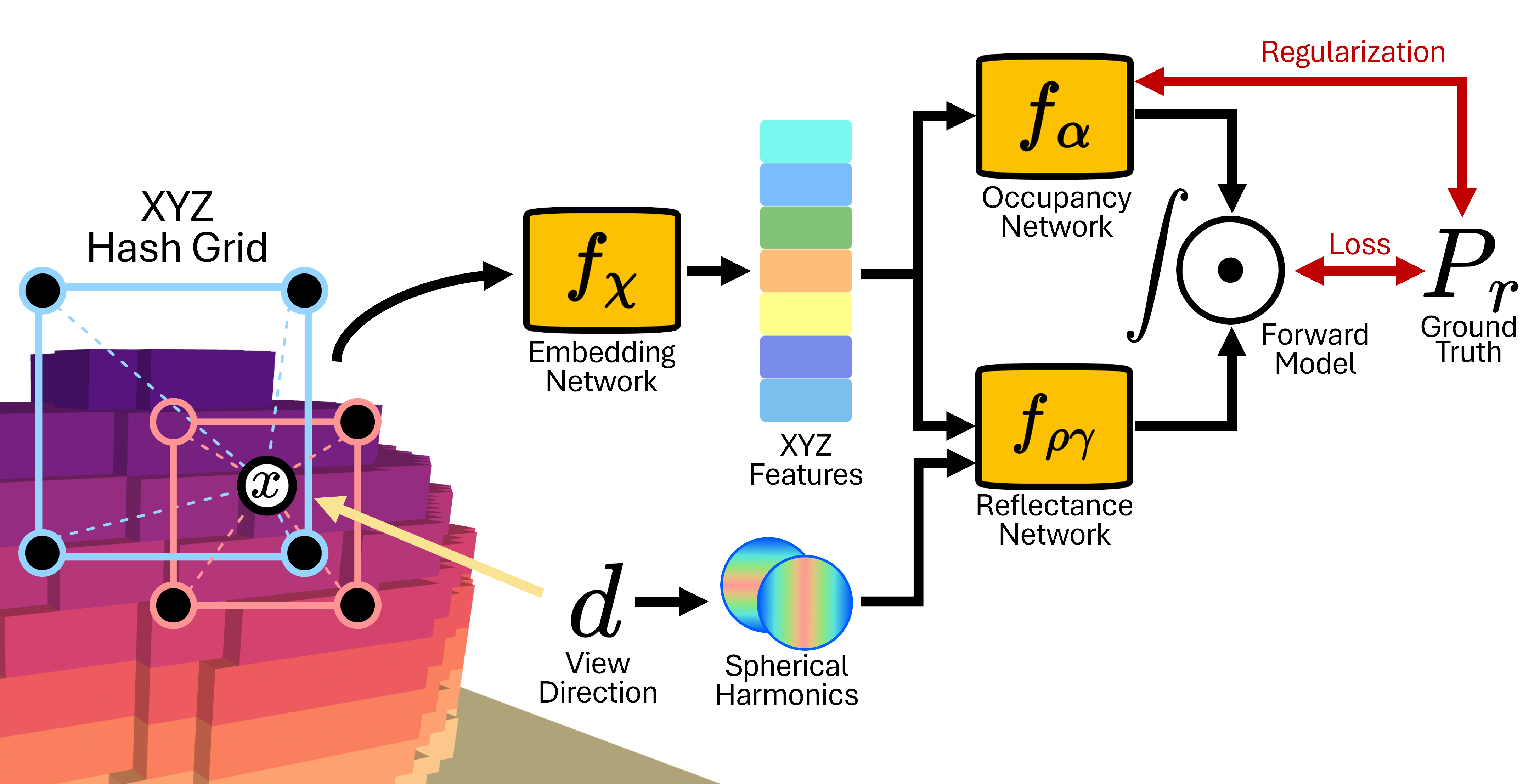

Signal Formation ModelWe establish a direct correlation between the presence of frequencies in raw, Fourier-transformed radar waveforms and the presence of objects in 3D space. For a point in space at distance $R$ from the radar sensor, with opacity $\alpha$, reflectivity $\rho$, and directivity $\gamma$, the Fourier transform of the detected radar signal will have amplitude $P_r$ at the corresponding frequency bin. Note that all variables in this equation are already known, except for $\alpha$, $\rho$, and $\gamma$. This model allows us to correlate physical properties of the scene with detected signals, and decompose the problem into separate learned 3D occupancy and reflectance neural fields. |

|

ArchitectureWe learn separate MLPs for the two unknowns in our signal formation model: occupancy ($\alpha$) and reflectance ($\rho\cdot\gamma$). These predictions pass through our signal formation model and are integrated across super-samples to directly predict radar frequency-space waveforms. Input 3D coordinates $x$ and view directions $d$ are super-sampled based on the divergence of the radar beam, and are encoded by multi-resolution hashgrid and spherical harmonics, respectively. We apply geometric regularization penalties to our occupancy network to improve 3D reconstructions and break the symmetry between $f_\alpha$ and $f_{\rho\gamma}$. |

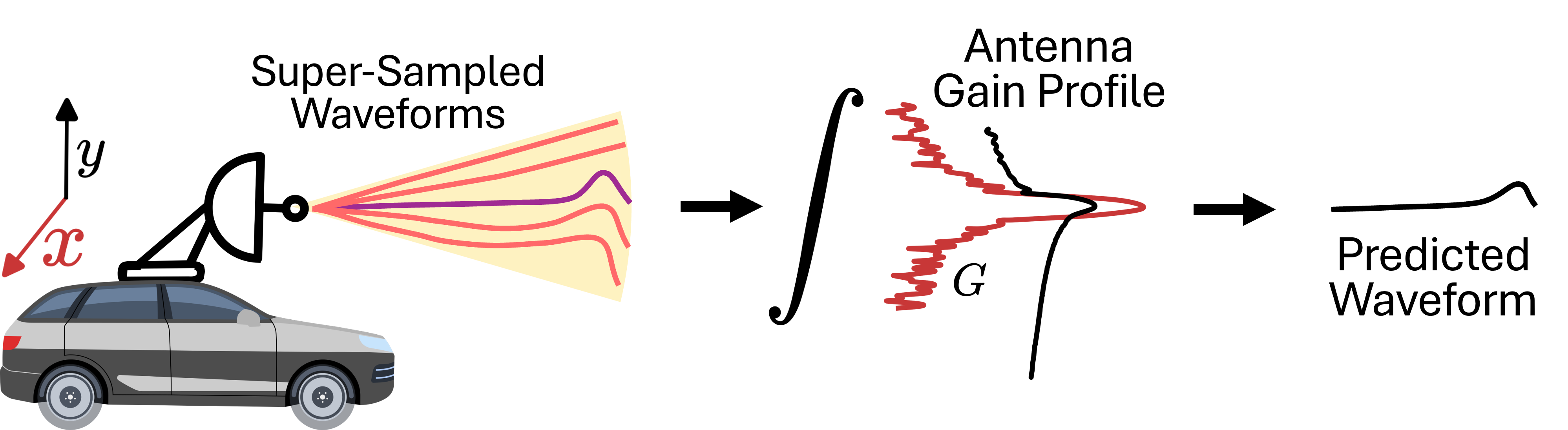

3D Beam IntegrationWe sample our neural field during training and inference by super-sampling additional rays within the elliptical cone (yellow) defined by the divergence of a single radio beam as it propagates through the scene. For each of the 400 beams emitted by our radar at each frame (purple), we super-sample around each ray (red) and query our neural fields with all of the sampled points. We then integrate the model predictions separately for each ray, weighing the samples using the gain profile of the radar antenna. This mimics how a real FMCW radar would collect measurements, mixing returned power across the entire cone into a single waveform (black). We can therefore accurately map from 3D scene to 2D integrated scan measurements. |

|

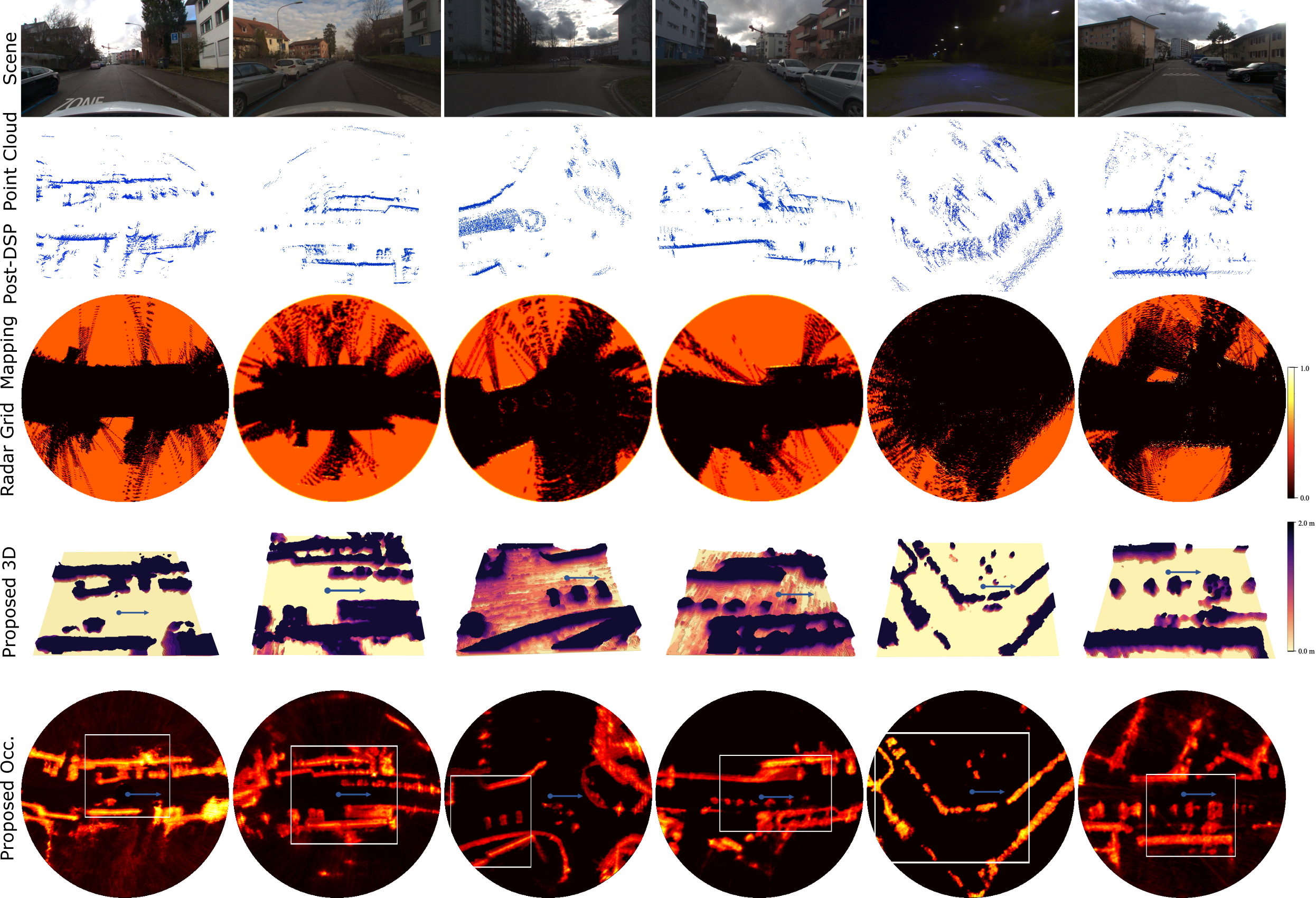

3D Radar Reconstructions

Radar Fields is able to extract dense, 3D scene geometry from a low-resolution, 2D radar sensor. Conventional radar mapping algorithms [1] often rely on sparse, processed point clouds, and operate entirely in 2D. This produces less informative scene reconstructions, which often fail to resolve smaller objects like cars or see behind any reflective objects. Radar Fields can resolve individual vehicles, reflective objects, and large scale infrastructure, even if these objects might occlude each other.

We compare the dense, 3D geometry learned by Radar Fields to the sparse 2D point clouds typically produced by on-device radar signal processing algorithms (2nd row), and to a conventional radar grid-mapping reference method [1] applied to those point clouds (3rd row). Since these baseline methods only learn in 2D, the scene reconstruction is shown in bird’s-eye-view (BEV). Compared to a BEV down-projection of Radar Fields (5th row), or a full 3D reconstruction (4th row), we observe improved reconstruction quality – especially on occluded and small objects.

Adverse Weather

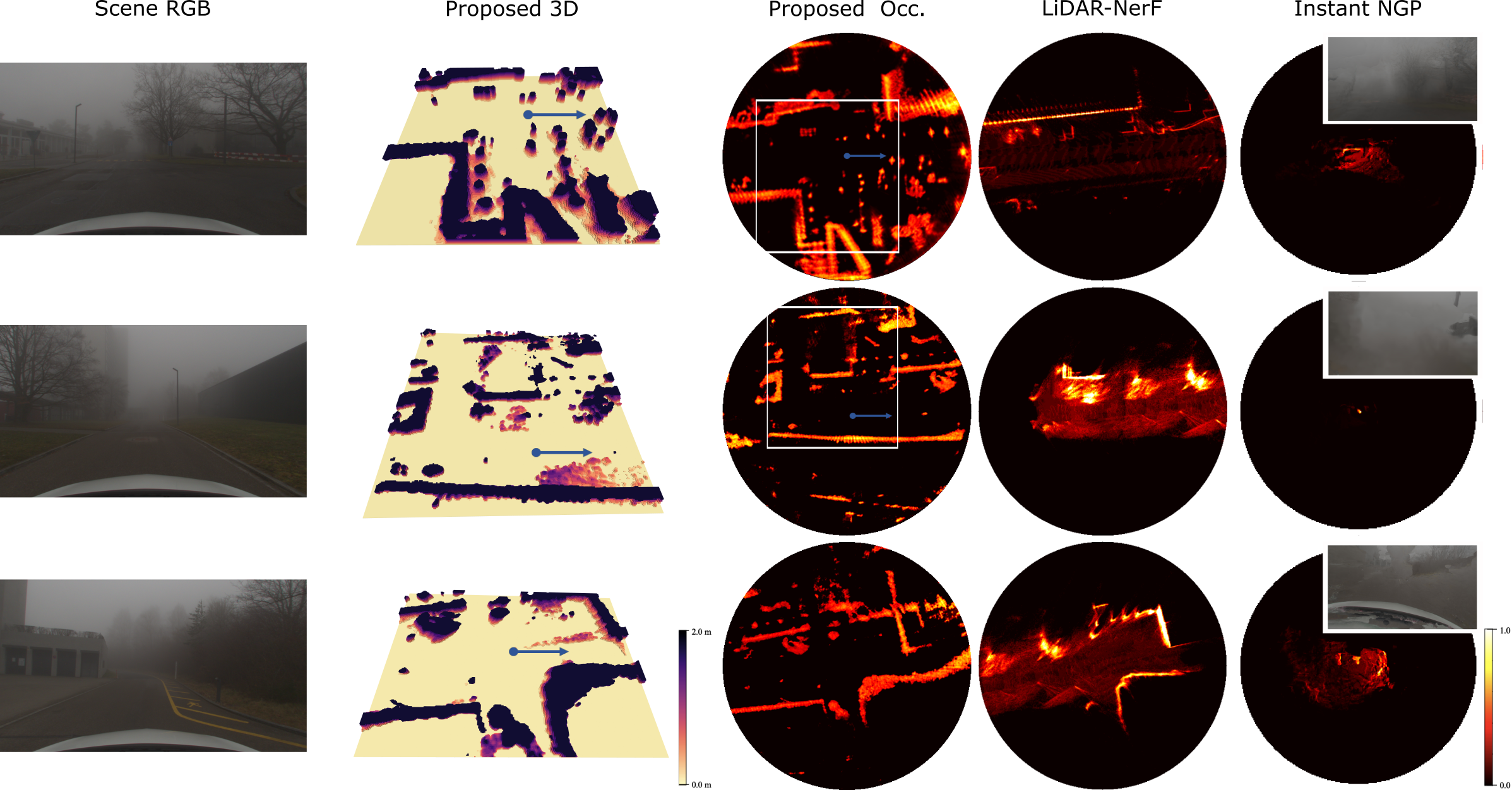

Scattering media (fog and rain particles) greatly degrade measurements from optical sensors, like cameras and LiDAR [2]. Radar, however, employs a much larger wavelength that can pass through these particles virtually unaffected. This makes Radar Fields remarkably robust to adverse weather, where RGB and LiDAR-based reconstruction methods fail. In these challenging conditions, Radar Fields continues to learn dense 3D scene geometry with little difference in performance when compared to ideal weather conditions. LiDAR [3] and RGB [4][5] methods, on the other hand, fail to reconstruct the scene with the same range and quality. Below, for example, is a side-by-side comparison of a 3D reconstruction from Radar Fields (trained on radar only) against RGB and depth reconstructions from Nerfacto [5] (trained on RGB only), both trained on the same foggy scene from our dataset.

We compare Radar Fields against state-of-the-art LiDAR and RGB-based reference methods on a variety of foggy scenes. LiDAR-NeRF [3] suffers from a decreased range of reconstruction due to back-scatter, while Radar Fields can resolve even small objects at more than triple the range in some directions (top row). Instant-NGP [4], on the other hand, does not converge, failing to reconstruct any scenes and exhibiting excessive floaters in RGB predictions, due to fog.

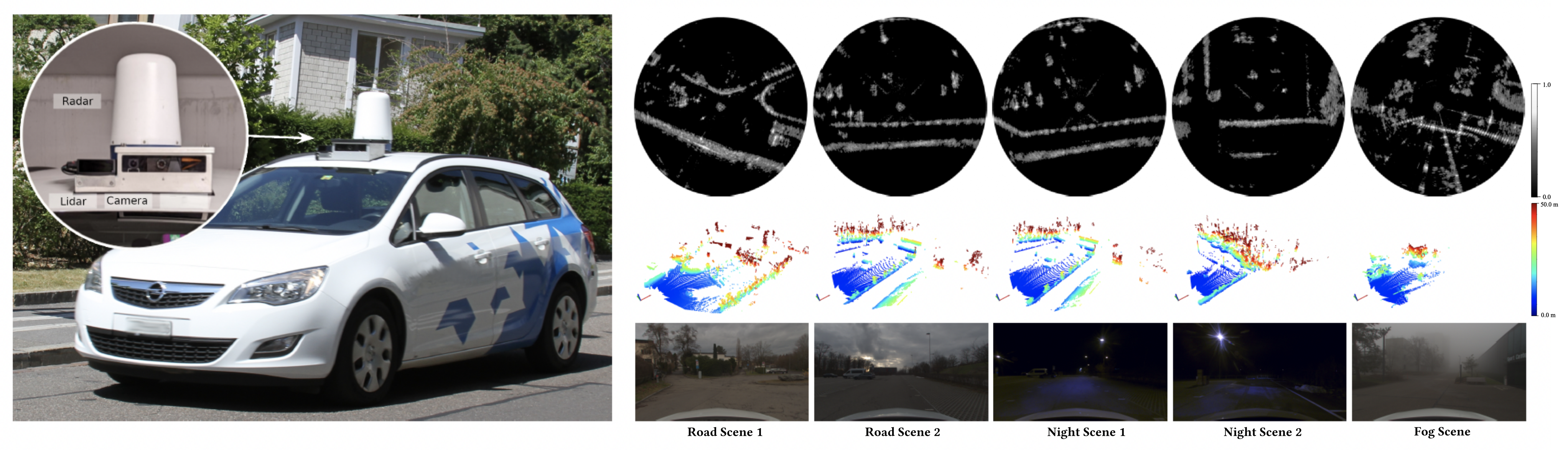

Acquisition and Dataset

We train and validate our method on a novel multi-modal dataset. This datset includes RGB camera, LiDAR, GNSS, and raw radar data. To do this, we built a custom sensor rig, with a custom FMCW radar sensor and high-throughput raw data readout routines. We then drove extensively through urban areas, from which we have selected our highest quality recordings. Crucially, the sequences in our dataset are diverse, including low-light, adverse weather, and ideal conditions. Our FMCW radar, which is a custom Navtech CIR-DEV, records 360° around the vehicle and provides a long range of 330m at a range resolution of ca. 4cm. The camera, a TRI023S-C with 2 MPix, and the LiDAR both span a frontal FOV. We incorporate a state-of-the-art microelectromechanical-system (MEMS) LiDAR (RS-LiDAR-M1) with a horizontal FOV of 120°, as shown in the setup below.

Related Publications

[1] Werber et al., Automotive radar gridmap representations, ICMIM, 2015.

[2] Ramazzina et al., ScatterNeRF: Seeing Through Fog with Physically-Based Inverse Neural Rendering, ICCV, 2023.

[3] Tao et al., LiDAR-NeRF: Novel LiDAR View Synthesis via Neural Radiance Fields, ACM Multimedia, 2024.

[4] Muller et al., Instant Neural Graphics Primitives with a Multiresolution Hash Encoding, ACM Transactions on Graphics, 2022.

[5] Tancik et al., Nerfstudio: A Modular Framework for Neural Radiance Field Development, ACM SIGGRAPH Conference Proceedings, 2023

[6] Pan et al., Neural Single-Shot GHz FMCW Correlation Imaging Optics Express, 2024.