Pupil-aware Holography

- Praneeth Chakravarthula

- Seung-Hwan Baek

- Florian Schiffers

- Ethan Tseng

- Grace Kuo

-

Andrew Maimone

- Nathan Matsuda

- Oliver Cossairt

- Douglas Lanman

- Felix Heide

SIGGRAPH Asia 2022

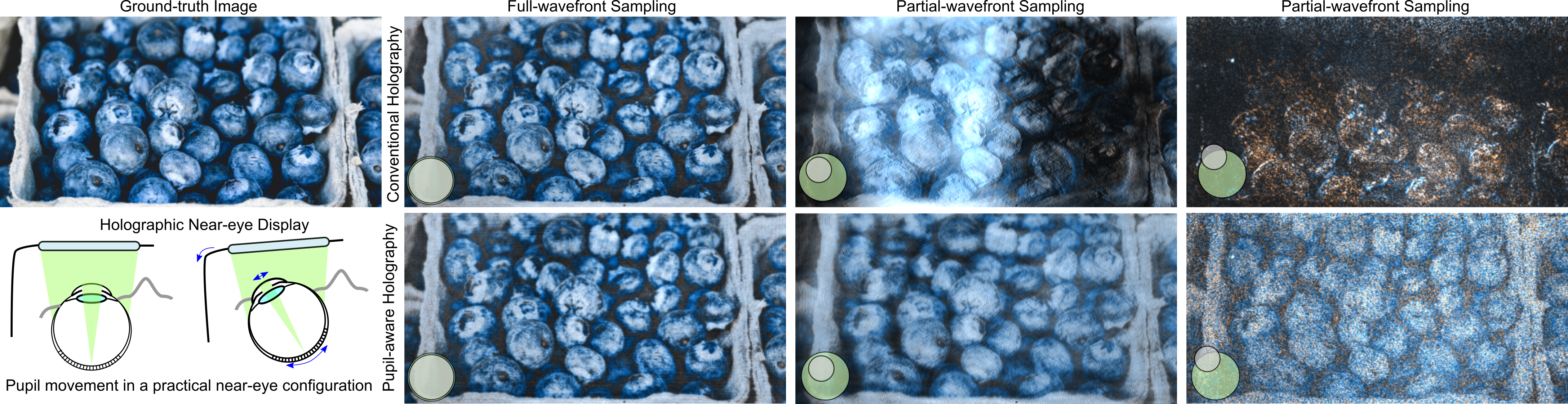

Conventional holographic displays often use a large lens to acquire the entire wavefront for high-fidelity reconstructions, resulting in a tiny effective eyebox. However, near-eye holographic displays pose a unique problem in that the wavefront can only be partially sampled by the moving eye pupil with an unknown location and diameter at any given instant. This results in catastrophic failures such as complete loss of image on existing holographic displays (top-right). This paper presents the first pupil-aware near-eye holography framework that identifies and addresses this pupil-dependency problem, achieving robust reconstructions for arbitrarily partially sampled wavefronts, even at the edge of the eyebox (bottom-right). All of the results shown in this figure are acquired on an experimental hardware prototype.

Holographic displays promise to deliver unprecedented display capabilities in augmented reality applications, featuring a wide field of view, wide color gamut, spatial resolution, and depth cues all in a compact form factor. While emerging holographic display approaches have been successful in achieving large étendue and high image quality as seen by a camera, the large étendue also reveals a problem that makes existing displays impractical: the sampling of the holographic field by the eye pupil. Existing methods have not investigated this issue due to the lack of displays with large enough étendue, and, as such, they suffer from severe artifacts with varying eye pupil size and location. We show that the holographic field sampled by the eye pupil is highly varying for existing display setups. We propose pupil-aware holography that maximizes the perceptual image quality irrespective of the eye pupil's size, location, and orientation in a near-eye holographic display. We validate the proposed approach both in simulations and on a prototype holographic display and show that our method eliminates severe artifacts and significantly outperforms existing approaches.

Paper

Praneeth Chakravarthula, Seung-Hwan Baek, Florian Schiffers, Ethan Tseng, Grace Kuo, Andrew Maimone, Nathan Matsuda, Oliver Cossairt, Douglas Lanman, Felix Heide

Pupil-aware Holography

SIGGRAPH Asia 2022

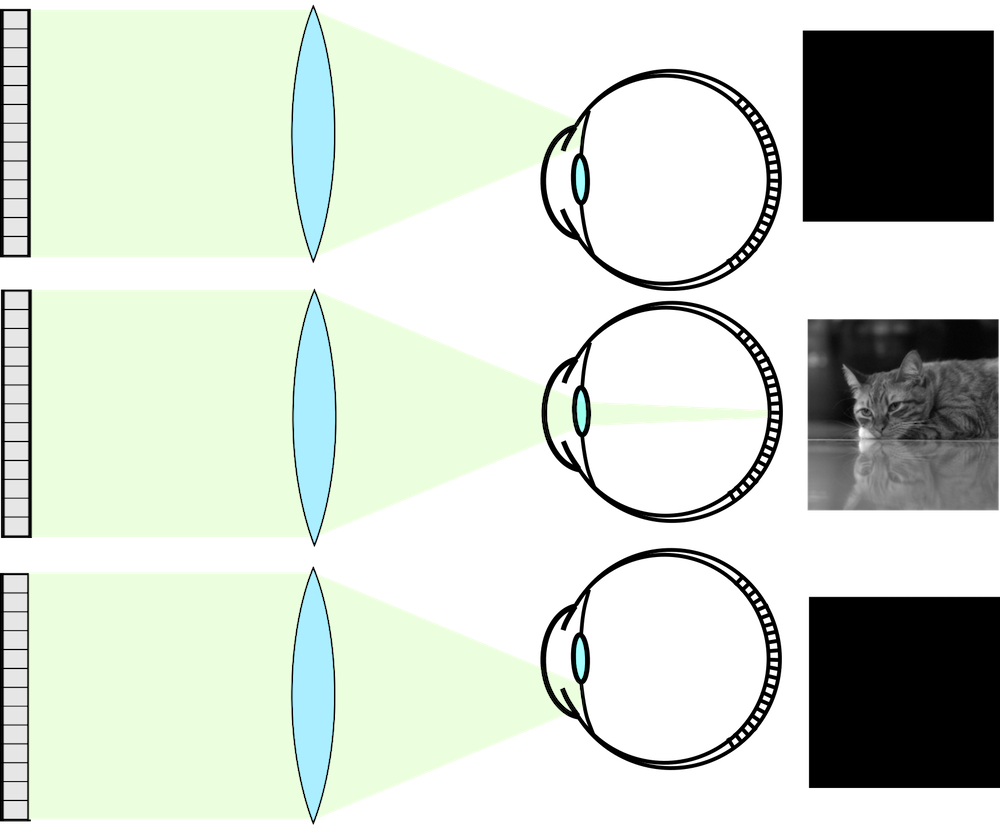

Conventional holographic displays focus the SLM-modulated light down to a point on the pupil plane, resulting in a very tiny eyebox (middle). This approach severely restricts the “valid” region where the eyes can see the holographic image, thereby resulting in no viewable imagery outside of the small eyebox region (top and bottom) that is mandated by the limited étendue of today’s holographic systems.

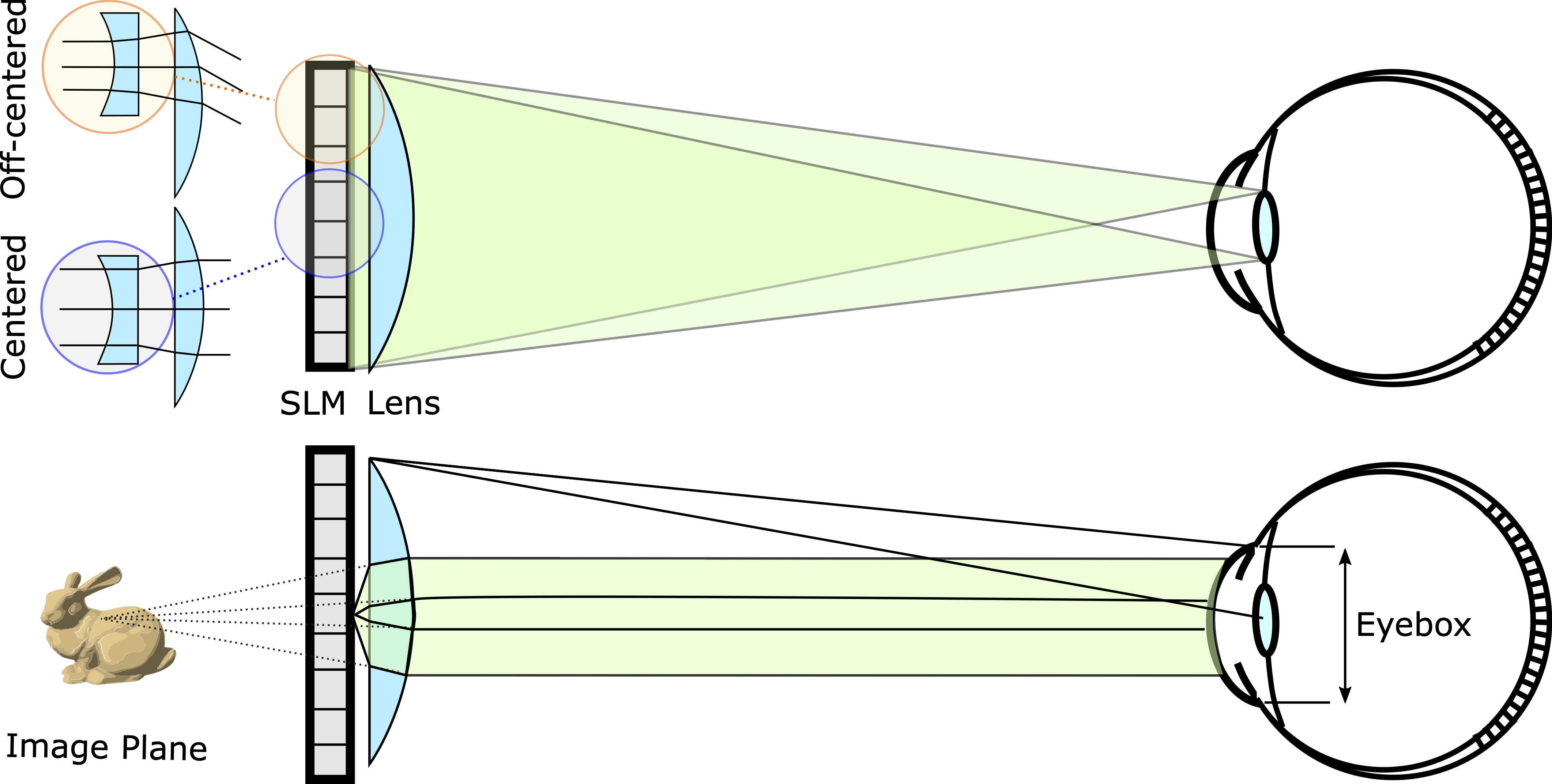

To evaluate the proposed pupil-aware holographic phase retrieval, we use a combination of a large physical lens and local lenslets as displayed on the SLM (top) together to bias the rays toward the eye, thereby making a sufficiently large field of view and a wide eyebox prototype display testbed. The SLM phase of each lenslet is a conjugate of the large physical ray-biasing lens. Therefore, the lenslet array is a spatially shifted conjugate phase array of the large physical lens. Their combination results in plane waves leaving the SLM-lens system (bottom), thereby creating a virtual image at optical infinity. While other configurations are possible, the proposed design ensures smooth transitions at the boundaries of local lenslets displayed on the SLM, and ray biasing without chromatic artifacts.

Method Overview

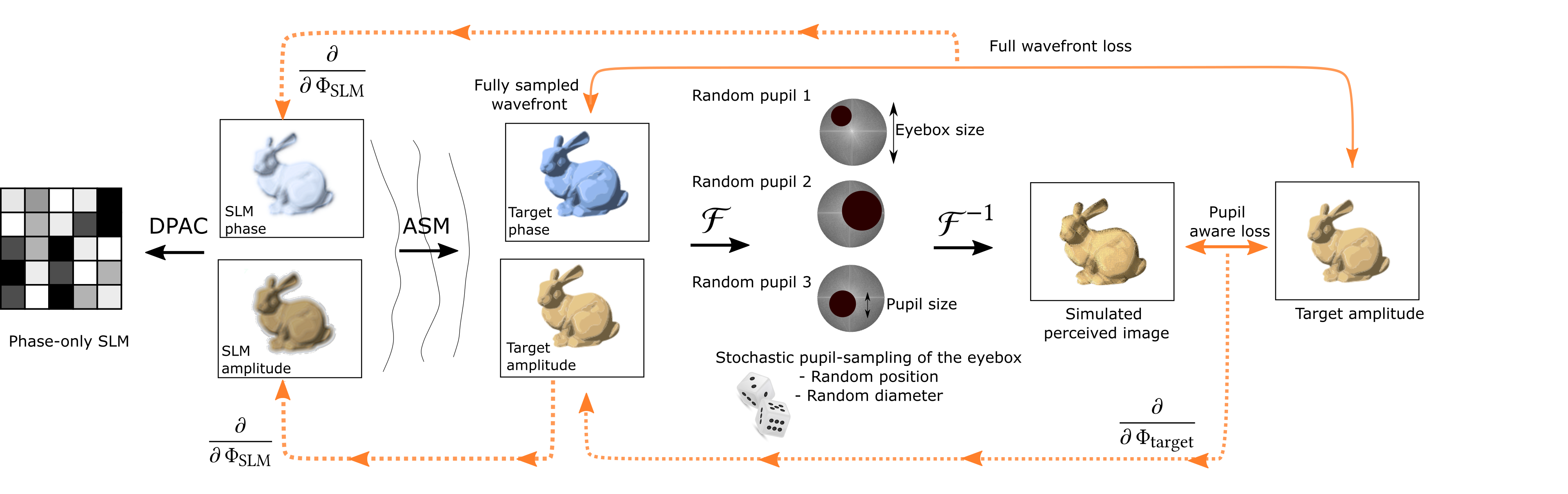

We optimize for phase-only holograms to produce high-fidelity reconstructions and energy distribution across the eyebox. To this end, we rely on a differentiable image formation model that explicitly considers the eye pupil sampling of the eyebox, allowing us to backpropagate an intensity reconstruction loss to the SLM phase pattern for each step of our iterative optimization. By stochastically sampled pupil-aware optimization, the proposed method is able to achieve both image quality and energy distribution over the entire eyebox.

Eyebox Energy Distribution

Traditional non-pupil-aware holographic phase retrieval assumes an unknown phase on the image plane, which is typically either random (top row) or smooth (middle row). While a random object phase on the image plane generates a uniform eyebox energy, it results in the reconstructed image corrupted with extreme noise (top row). On the other hand, a uniform object phase results in energy concentrated within the eyebox, which results in noise-free reconstructions at the center of eyebox and loss of image as the pupil moves away from it, resulting in a tiny effective eyebox (middle row).The proposed pupil-aware holography results in high-fidelity reconstructions by distributing the energy across the eyebox (bottom row).

Experimental Evaluation

We densely sample the eyebox (top) to study the image quality as the pupil traverses the eyebox. Conventional holographic methods produce high-fidelity imagery at the center of the eyebox by concentrating the light energy distribution at the center. As a result, conventional non-pupil-aware methods results in complete loss of imagery as the pupil moves from the center (middle). Pupil-aware holography maintains the imagery throughout the eyebox as shown in the bottom row.

3D Holography

We demonstrate that the proposed method can also be extended to 3D holography. To this end, we show multiplane 3D holographic projections on an experimental prototype. That is, using the proposed method we optimize a single SLM pattern to simultaneously project imagery at both near and far distances. The images shown here are measured only by changing the camera focus, and the corresponding in-focus and out-of-focus imagery can be observed in the insets.

Related Publications

[1] Praneeth Chakravarthula, Ethan Tseng, Henry Fuchs and Felix Heide. Hogel-free Holography. ACM Transactions on Graphics (TOG), 2020.

[2] Praneeth Chakravarthula, Ethan Tseng, Tarun Srivastava, Henry Fuchs and Felix Heide. Learned Hardware-in-the-loop Phase Retrieval for Holographic Near-Eye Displays. ACM Transactions on Graphics (TOG), 2020.

[3] Praneeth Chakravarthula, Yifan Peng, Joel Kollin, Henry Fuchs and Felix Heide. Wirtinger Holography for Near-Eye Displays. ACM Transactions on Graphics (TOG), 2019.