Artifact-Resilient Real-Time Holography

Holographic near-eye displays are a promising technology for augmented and virtual reality, but their practical application is limited by a failure to account for real-world viewing conditions, where obstructions like eyelashes and eye floaters can severely degrade image quality. Here, we address this challenge by demonstrating that the vulnerability of current methods stems from eyeboxes dominated by low frequencies typical in smooth-phase holograms, whereas random-phase holograms exhibit greater resilience. We introduce a differentiable metric, derived from the Rayleigh distribution, to quantify this phase randomness and leverage it to train a real-time neural phase generator. The resulting system produces artifact-resilient 3D holograms that maintain high visual fidelity under dynamic obstructions.

Artifact-Resilient Real-Time Holography

Victor Chu, Oscar Pueyo-Ciutad, Ethan Tseng, Florian Schiffers, Nathan Matsuda, Grace Kuo, Albert Redo-Sanchez, Douglas Lanman, Oliver Cossairt, Felix Heide

Artifact-Resilient Real-Time Holography

SIGGRAPH Asia 2025 (Journal)

Obstructions in Random vs. Smooth Phase Holography

Recent camera-in-the-loop (CITL) optimization has enabled holographic displays to correct for static aberrations like dust and imperfect lenses, producing high-quality 3D images. However, this approach fails when faced with dynamic obstructions such as eyelashes, which can completely block the view. This limitation highlights a fundamental trade-off in hologram generation between using a smooth phase pattern versus a more random-like phase. Historically, smooth phase has been preferred for its ability to create images with high contrast and minimal speckle. The critical drawback is that it concentrates light into a very small viewing window, or “eyebox,” making the hologram fragile and easily blocked. A more random phase, in contrast, distributes light over a wider angle, allowing the image to diffract around unanticipated obstacles.

Introducing: Rayleigh Distance for Quantifying Phase Randomness

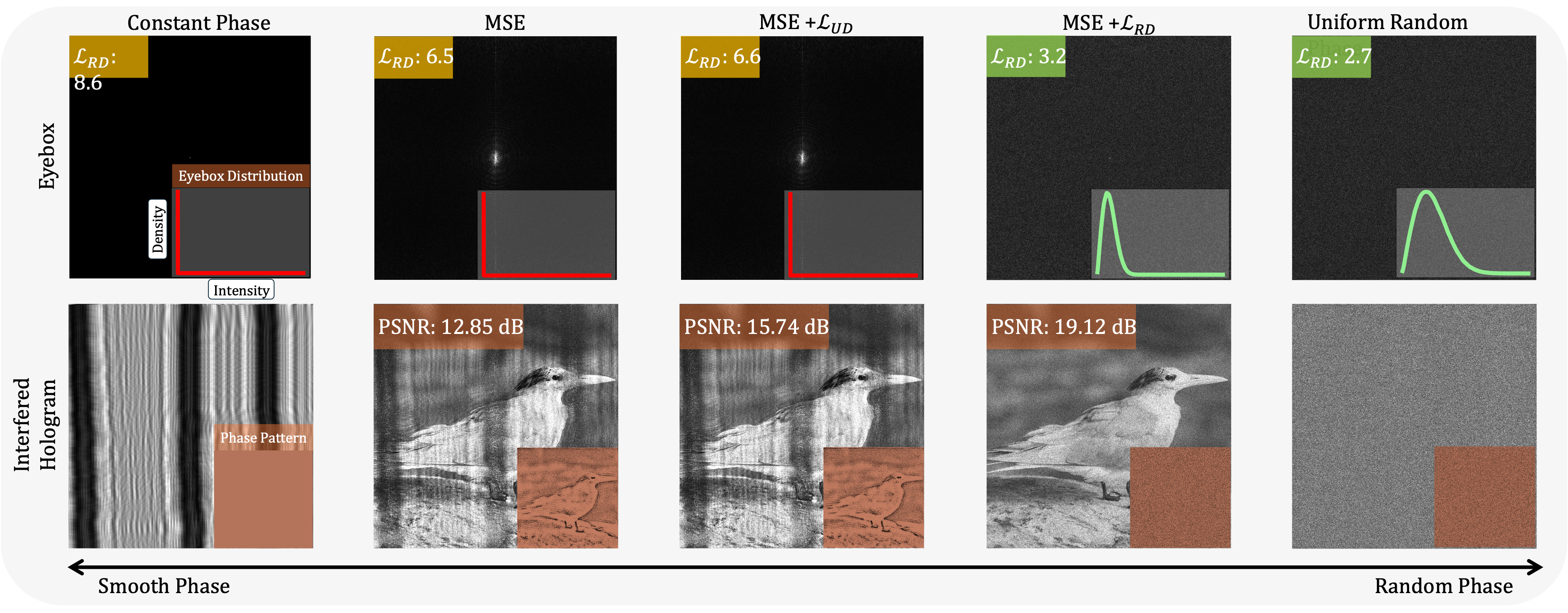

Although random phase can naturally lead to artifact-resilient holograms, this notion becomes ill-defined in settings such as neural network based phased CGH, where a clear “phase initialization” does not exist. To address this, we introduce a metric for phase randomness that performs patch-wise similarity between a hologram’s eyebox intensity and that of a reference random-phase hologram whose eyebox intensity follow a Rayleigh distribution. We denote this loss by $\mathcal{L}_{RD}$ (Rayleigh Distance). Below, we contrast a constant-phase hologram (left) with several optimized variants: (i) constant phase with MSE loss only; (ii) constant phase with MSE plus a uniform-intensity eyebox loss, $\mathcal{L}_{UD}$; (iii) constant phase with $\mathcal{L}_{RD}$ ; and, as an upper reference, (iv) an unoptimized hologram initialized with uniformly random phase (right). Empirically, $\mathcal{L}_{RD}$ steers optimization toward solutions exhibiting higher phase randomness and a broader energy distribution in the eyebox, even when starting from constant phase. Moreover, we observe that full randomness is not required for artifact resilience: partially randomized solutions (second from right) already provide substantial protection.

Real-Time Artifact-Resilient Holography

Using $\mathcal{L}_{RD}$, we are able to train a neural network based CGH phase retrieval engine that outputs artifact-resilient holograms in real time. We call our method Real-Time ARH. We compare HoloNet vs. our Real-Time ARH with a quantized phase light modulator (TI DLP6750Q1EVM). Without obstructions, our Real-Time ARH produces better visual quality, as HoloNet requires camera-in-the-loop calibration to overcome the hardware imperfections of the real setup. Our method, because it relies on random phase holography, is naturally more robust to these imperfections. When obstructions like eyelashes are introduced, HoloNet holograms are distorted. Our Real-Time ARH, on the other hand, is artifact-resilient, since its use of random phase holography allows light to scatter around obstructions. These experimental captures use 8-frame time multiplexing per channel.

Training Artifact-Resilient Hologram Networks

We characterize the energy distribution within a random phase eyebox using the Rayleigh Distribution. This allows us to define a novel, differentiable metric, the “Rayleigh Distance,” which we use to train a real-time, neural network-based phase generator. We demonstrate that over 40 training epochs, our metric effectively prevents the network from defaulting to fragile smooth phase solutions, forcing it to instead converge on robust, artifact-resilient holograms.

Smartphone Captures of 3D Results

To test for artifact resilience, we compute holograms from 3D scenes using 8-frame time multiplexing per channel and capture them with a smartphone camera that is partially obstructed by eyelashes. The experiment highlights differences between the two methods. For holograms generated by HoloNet, the obstruction causes visible artifacts in the captured image. In contrast, the images captured from our Real-Time ARH show fewer artifacts, as the method allows light to scatter around the obstruction. Additionally, our method renders defocus blur consistent with the 3D scene, while the HoloNet implementation used for comparison does not produce this effect.

Related Publications

[1] Schiffers, Florian and Chakravarthula, Praneeth and Matsuda, Nathan and Kuo, Grace and Tseng, Ethan and Lanman, Douglas and Heide, Felix and Cossairt, Oliver. Stochastic Light Field Holography. International Conference on Computational Photography (ICCP), 2023

[2] Chakravarthula, Praneeth and Baek, Seung-Hwan and Schiffers, Florian and Tseng, Ethan and Kuo, Grace and Maimone, Andrew and Matsuda, Nathan and Cossairt, Oliver and Lanman, Douglas and Heide, Felix. Pupil-aware Holography. ACM Transactions on Graphics (TOG), 41(6):212, 2022