Seeing With Sound: Acoustic Beamforming for Multimodal Scene Understanding

- Praneeth Chakravarthula

- Jim Aldon D'Souza

- Ethan Tseng

-

Joe Bartusek

- Felix Heide

CVPR 2023

Mobile robots, including autonomous vehicles rely heavily on sensors that use electromagnetic radiation like lidars, radars and cameras for perception. While effective in most scenarios, these sensors can be unreliable in unfavorable environmental conditions, including low-light scenarios and adverse weather, and they can only detect obstacles within their direct line-of-sight. Audible sound from other road users propagates as acoustic waves that carry information even in challenging scenarios. However, their low spatial resolution and lack of directional information have made them an overlooked sensing modality.

In this work, we introduce long-range acoustic beamforming of sound produced by road users in-the-wild as a complementary sensing modality to traditional electromagnetic radiation-based sensors. To validate our approach and encourage further work in the field, we also introduce the first-ever multimodal long-range acoustic beamforming dataset. We propose a neural aperture expansion method for beamforming and demonstrate its effectiveness for multimodal automotive object detection when coupled with RGB images in challenging automotive scenarios, where camera-only approaches fail or are unable to provide ultra-fast acoustic sensing sampling rates.

Paper

Seeing With Sound: Long-range Acoustic Beamforming for Multimodal Scene Understanding

Praneeth Chakravarthula, Jim Aldon D’Souza, Ethan Tseng, Joe Bartusek, Felix Heide

CVPR 2023

Multimodal Beamforming Dataset

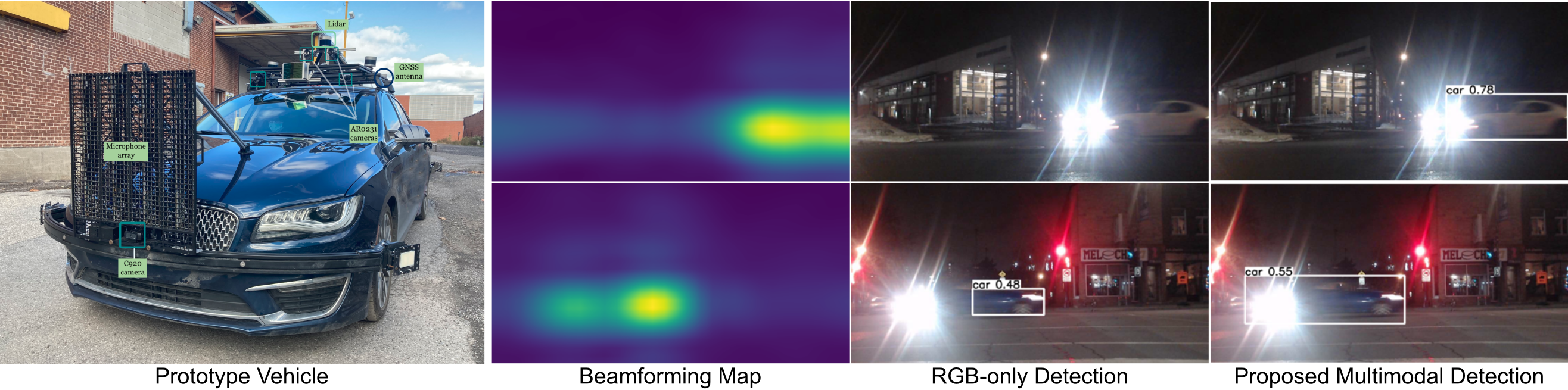

To assess long-range acoustic beamforming for automotive scene understanding, we have acquired a large dataset of roadside noise along with ambient scene information captured by an RGB camera, lidar, global positioning system (GPS) and an inertial measurement unit (IMU), and annotated by experts.

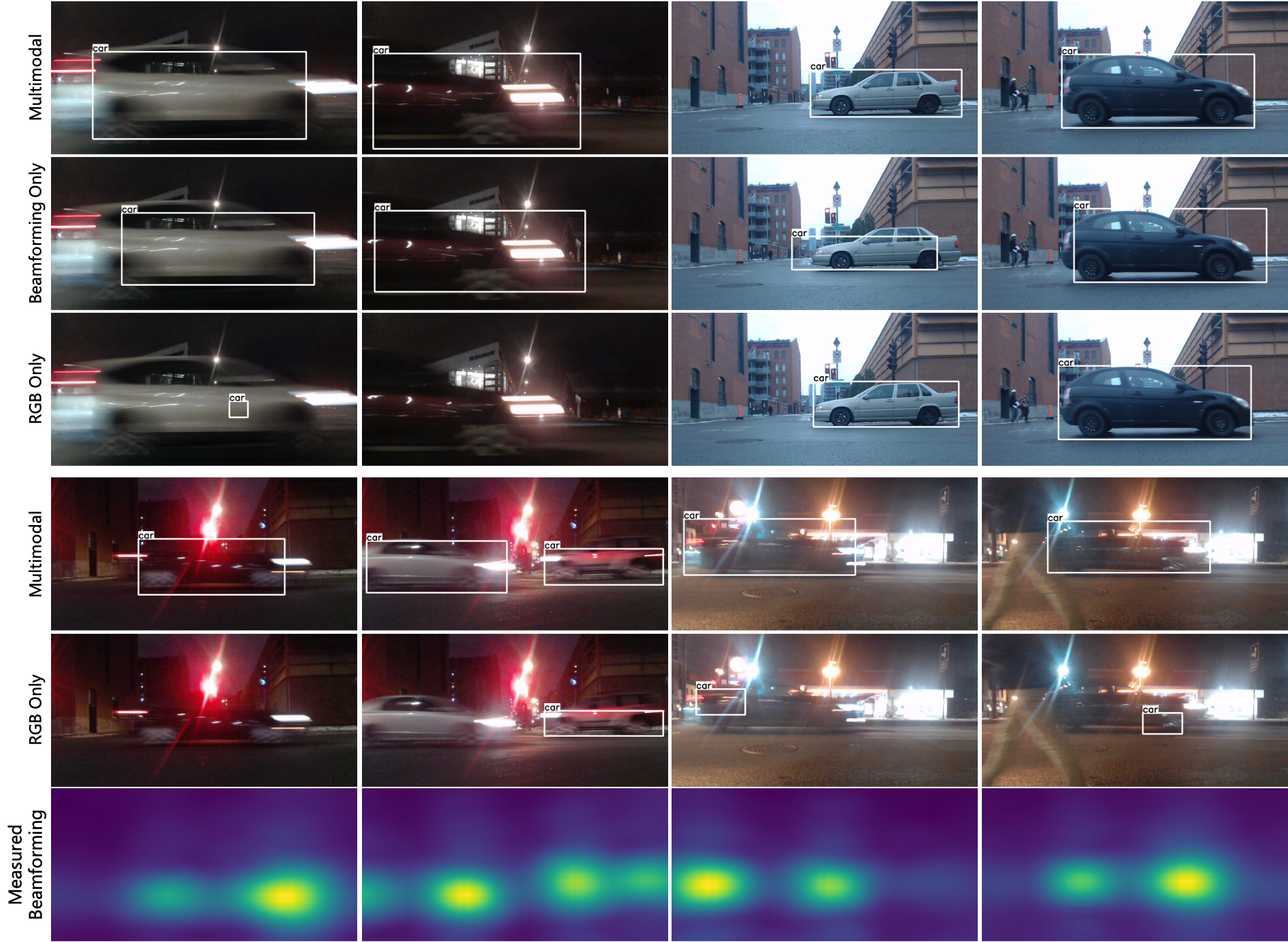

We collect a large dataset of acoustic pressure signals from road traffic, along with camera, lidar, GPS and IMU sensory streams. Our data consists of a variety of traffic scenes with multiple object instances. The raw sound signals are processed for a range of frequency bands in our dataset and a snapshot visualization of our dataset with beamforming maps overlayed on RGB images is shown above.

Overview of the Framework

Roadside noise is measured by a microphone array sensor and a beamforming map of acoustic signals is computed as complementary modality to existing photon-based sensor stacks. A trained neural network translates multimodal signals to features which can be used for downstream tasks such as object detection and predicting a future RGB camera frame.

Neural Aperture Expansion and Beamforming

We train a network to synthetically expand the aperture of the microphone array to produce higher fidelity beamform maps with smaller PSF corruption. The beamforming maps visualize sound from the vehicles (center, see arrows). As can be observed, a smaller aperture (left) results in blurry beamforming outputs compared to larger aperture (right).

Multimodal Object Detection

Traffic sound provides complementary signals that improve detections significantly on challenging scenarios where low-light, glare, and motion blur reduce the accuracy of RGB detections. Furthermore, beamforming allows us to reliably detect multiple sound source in the scene.

Related Publications

[1] Mario Bijelic, Tobias Gruber, Fahim Mannan, Florian Kraus, Werner Ritter, Klaus Dietmayer, Felix Heide. Seeing Through Fog Without Seeing Fog: Deep Multimodal Sensor Fusion in Unseen Adverse Weather. CVPR 2020.