Single-shot Monocular RGB-D Imaging using Uneven Double Refraction

CVPR 2020 (Oral)

Prototype camera

Reconstructed color image

Demo video

Reconstructed depth map

We demonstrate that a sparse depth map and a color image can be obtained in real time using uneven double refraction.

Cameras that capture color and depth information have become an essential imaging modality for applications in robotics, autonomous driving, virtual, and augmented reality. Existing RGB-D cameras rely on multiple sensors or active illumination with specialized sensors. In this work, we propose a method for monocular single-shot RGB-D imaging. Instead of learning depth from single-image depth cues, we revisit double-refraction imaging using a birefractive medium, measuring depth as the displacement of differently refracted images superimposed in a single capture. However, existing double-refraction methods are orders of magnitudes too slow to be used in real-time applications, e.g., in robotics, and provide only inaccurate depth due to correspondence ambiguity in double reflection. We resolve this ambiguity optically by leveraging the orthogonality of the two linearly polarized rays in double refraction -- introducing uneven double refraction by adding a linear polarizer to the birefractive medium. Doing so makes it possible to develop a real-time method for reconstructing sparse depth and color simultaneously in real-time. We validate the proposed method, both synthetically and experimentally, and demonstrate 3D object detection and photographic applications.

Paper

Andreas Meuleman*, Seung-Hwan Baek*, Felix Heide, Min H. Kim (* indicates equal contribution)

Single-shot Monocular RGB-D Imaging using Uneven Double Refraction

CVPR 2020

Selected Results

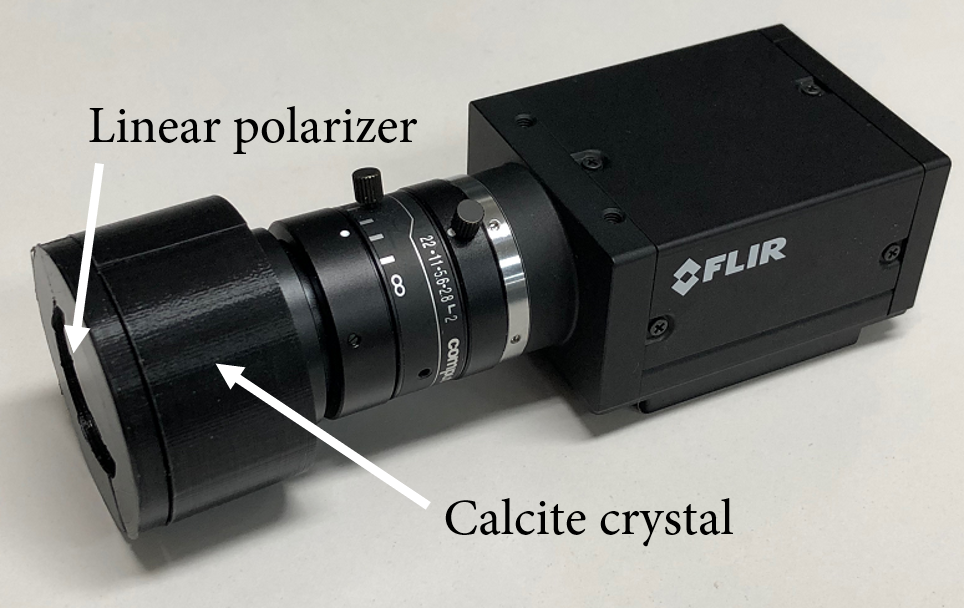

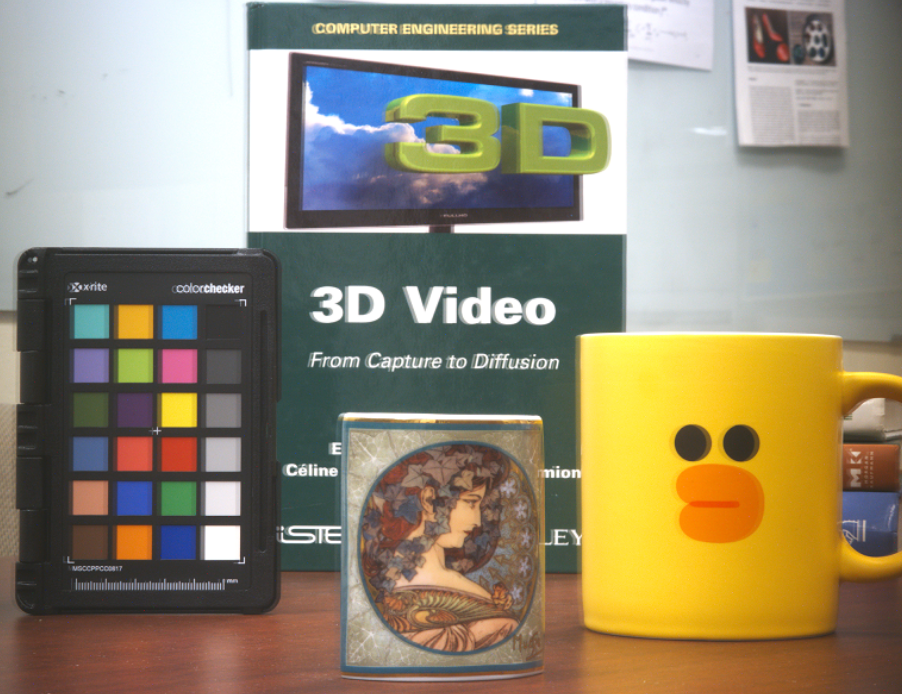

Hardware prototype

Captured color

Reconstructed color

Uneven double refraction.

Bireringent material, e.g. calcite crystal, refracts light into two perpendiculary polarized light resulting in double refraction. In this paper, we exploit the perpendicular polarization of double refraction to attenuate one of them using a polarizer unevening the radiances of the double refraction. This optical disambiguation enables single-shot RGB-D imaging in real time.

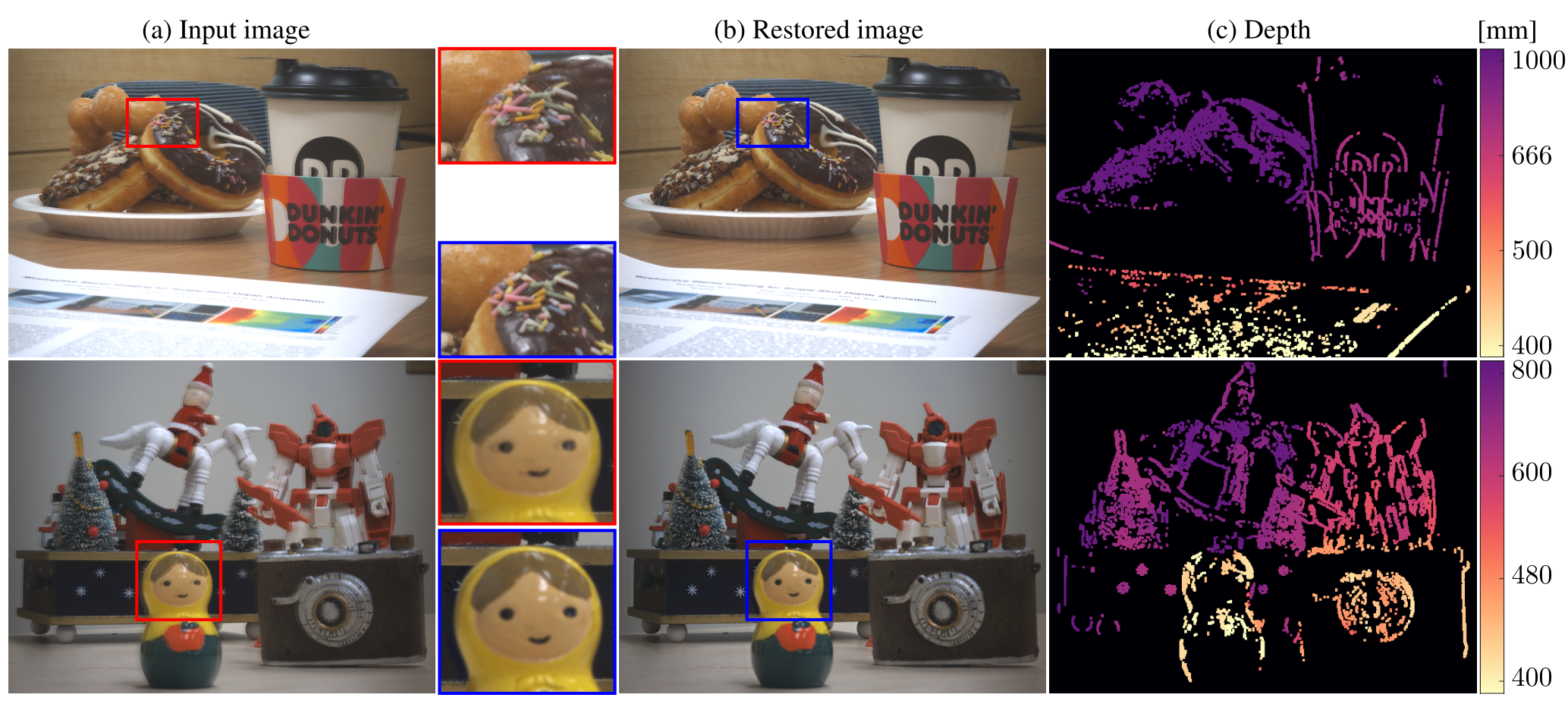

Qualitative results using the prototype camera.

(a) From a captured input image with uneven double refraction, we reconstruct (b) the color image without the double refraction (see the closeup) and (c) the sparse depth map in real time.

Captured

Estimated depth

Captured

Reconstruction

Depth and color accuracy

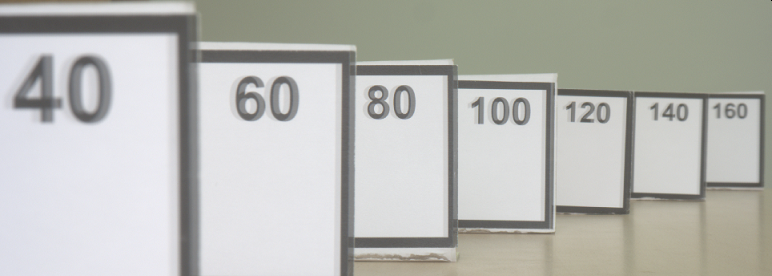

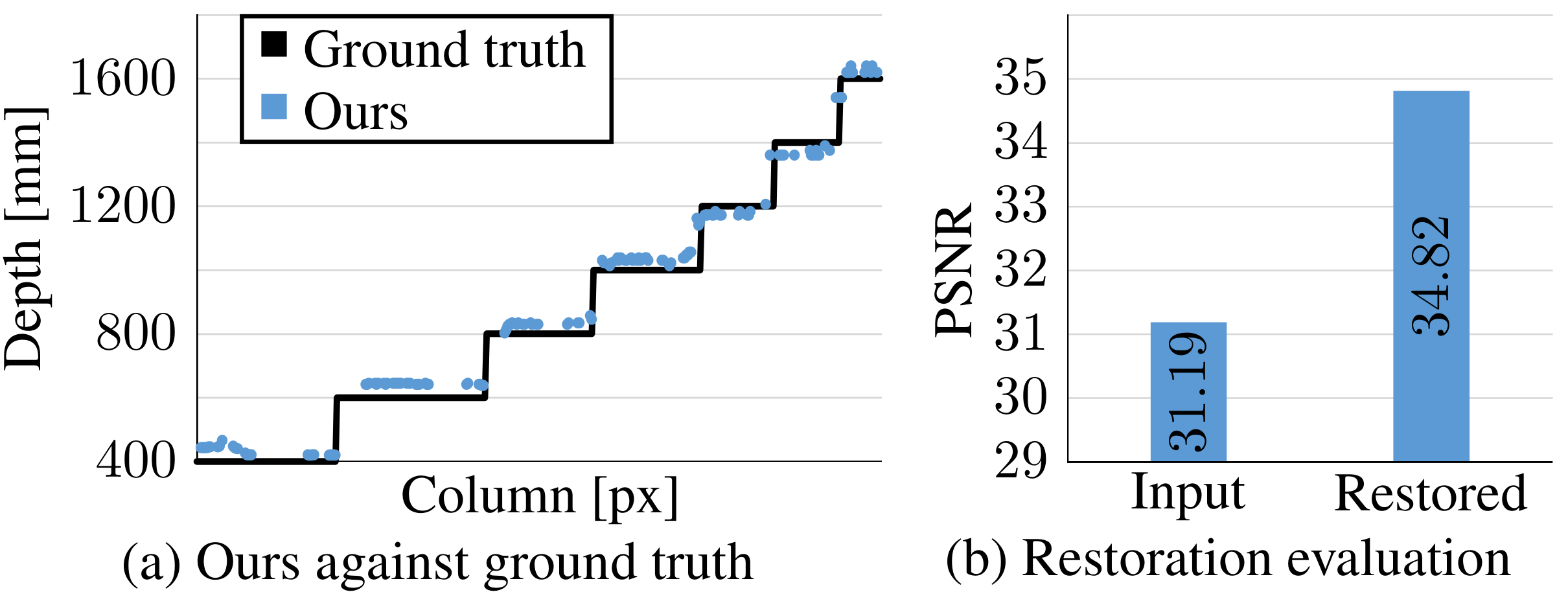

Quantitative evaluation of the prototype camera.

We captured a scene with pannels at known depth values. The graph on the right shows our estimation at the center row compared to the ground truth distances. For more results and numbers, refer to the paper.

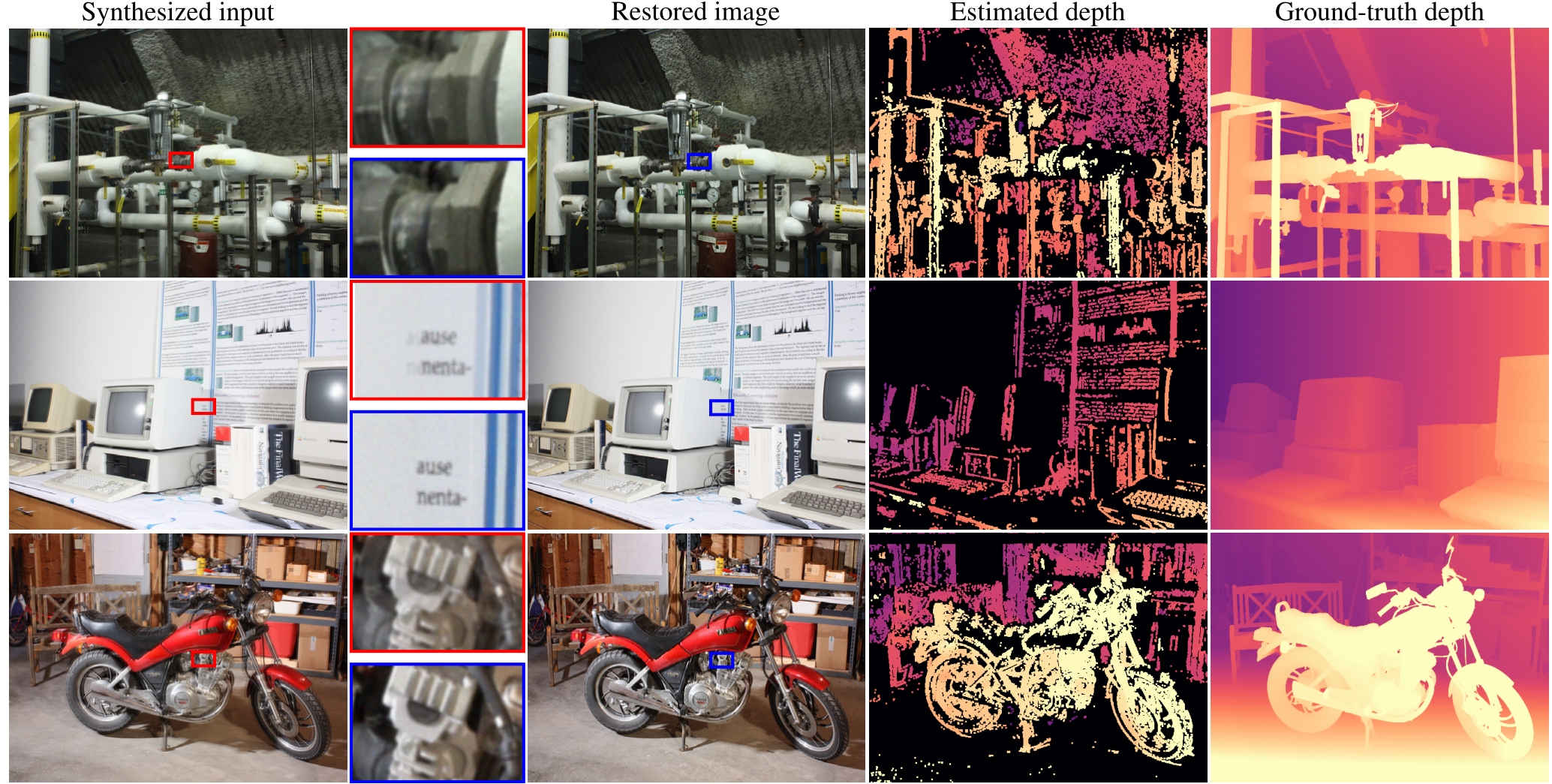

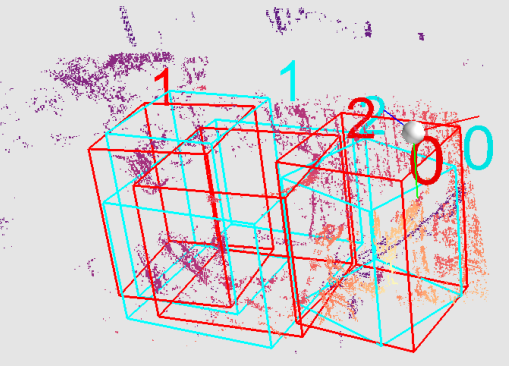

Qualitative results on synthetic data (including sensor noise simulation).

We tested our method in simulation demonstrating high accuracy both in terms of color restoration and depth estimation. For quantitative numbers, refer to the paper.

(a) Depth-based refocusing

(c) Depth-aware composition

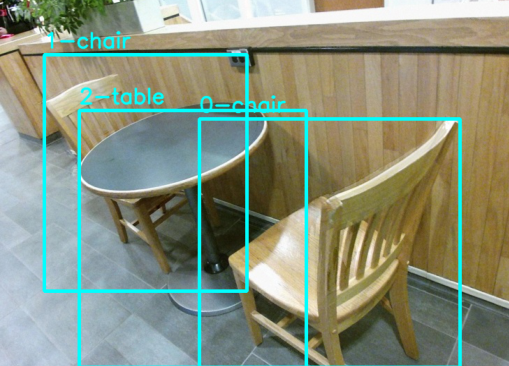

(b) RGB-D segmentation

(d) 3D object detection

Applications.

Our real-time RGB-D camera enables a variety of applications using a depth map and a color image. Examples include depth-based refocusing, RGB-D segmetation, depth-aware composition, and 3D object detection.

Baek et al.

speed: 0.02 fps

Ours

speed: 30 fps

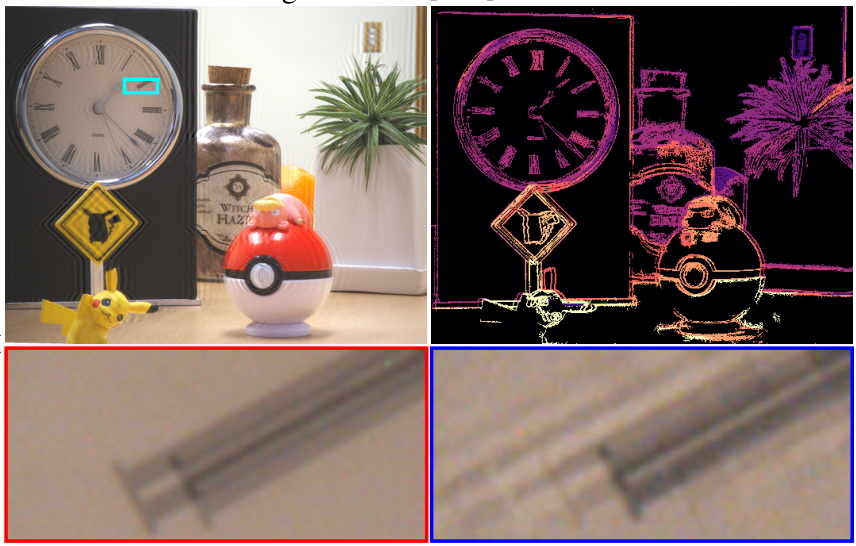

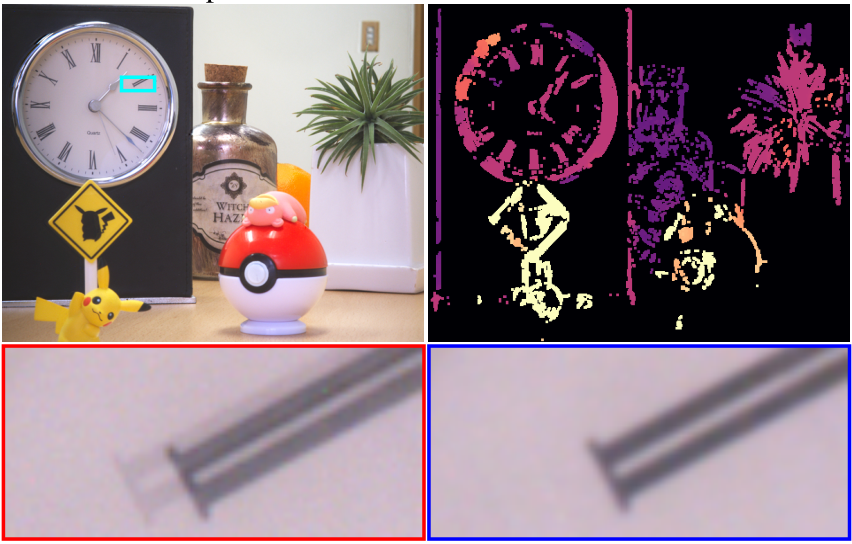

Comparison with Baek et al.

Comparison with the existing double refraction method. Baek’s method severely suffers from ringing artifacts in the color reconstruction due to the ambiguity in even double refraciton. Moreover, the ambiguity imposes huge computational burden resulting in impractical running time required for many RGB-D applications. Making use of uneven double refraction resolves the ambiguity enabling real-time RGB-D imaging with accurate depth and color reconstruction. See the blue closeup of reconstructions.

Comparison with dual pixel.

Dual pixel phone cameras have been successfully employed to obtain close-range depth. However, their disparity is bounded by the defocus blur, thus not exceeding a few pixels. The disparity on a dual pixel sensor becomes saturated quickly than our double refraction disparity.