Neural Auto Exposure for High-Dynamic Range Object Detection

-

Emmanuel Onzon

-

Fahim Mannan

- Felix Heide

CVPR 2021

The real world is a high dynamic range (HDR) world with a range up to 280 dB that cannot be captured directly by today's imaging sensors. Existing live vision pipelines tackle this fundamental challenge by relying on HDR sensors that try to recover HDR images from multiple captures with different exposures. While HDR sensors increase the dynamic range substantially, they are not without disadvantages, including severe artifacts for dynamic scenes, reduced fill-factor, lower resolution, and sensor cost. At the same time, traditional auto-exposure methods for low-dynamic range sensors have advanced as proprietary methods relying on image statistics, separated from a downstream vision algorithm. In this work, we revisit auto-exposure control as an alternative to HDR sensors. We propose a neural network for exposure selection that is trained jointly, end-to-end with an object detector and an image signal processing (ISP) pipeline. To this end, we introduce a novel HDR dataset for automotive object detection and an HDR training procedure. We validate that the proposed neural auto-exposure control, which is tailored to object detection, outperforms conventional auto-exposure methods by more than 5 points in mean average precision (mAP).

Paper

Neural Auto Exposure for High-Dynamic Range Object Detection

Emmanuel Onzon, Fahim Mannan, Felix Heide

CVPR 2021

Selected Results

Learning Auto-Exposure for Automotive Object Detection.

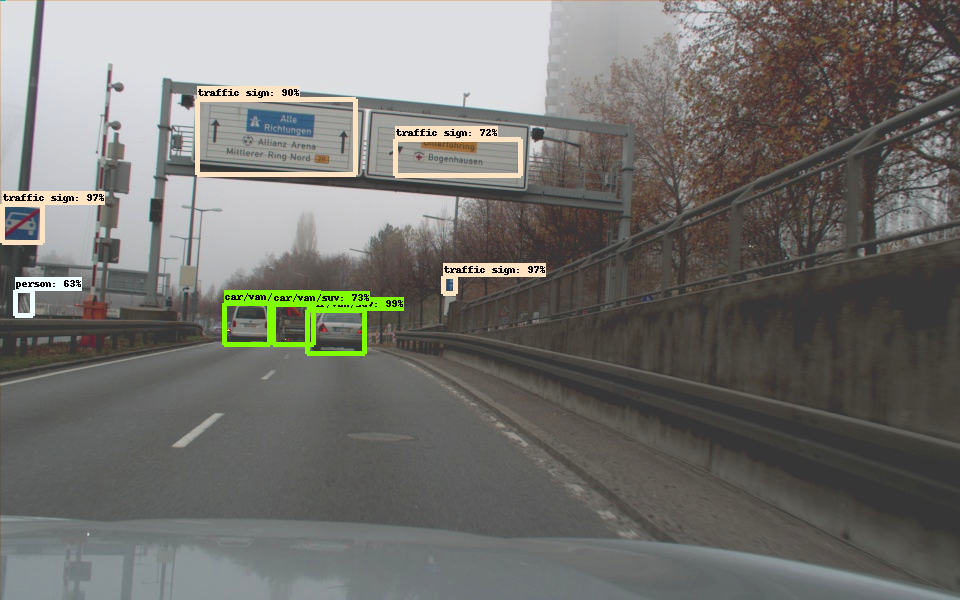

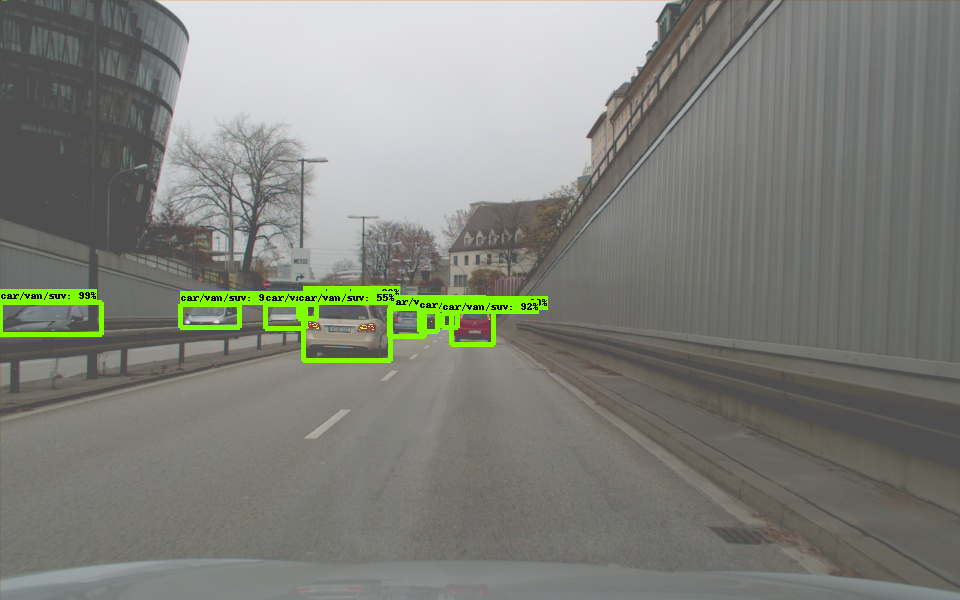

A popular approach to auto-exposure is to control the exposure based on the average value of the intensity found in the image. We compare to this conventional auto-exposure method below in real traffic situations.

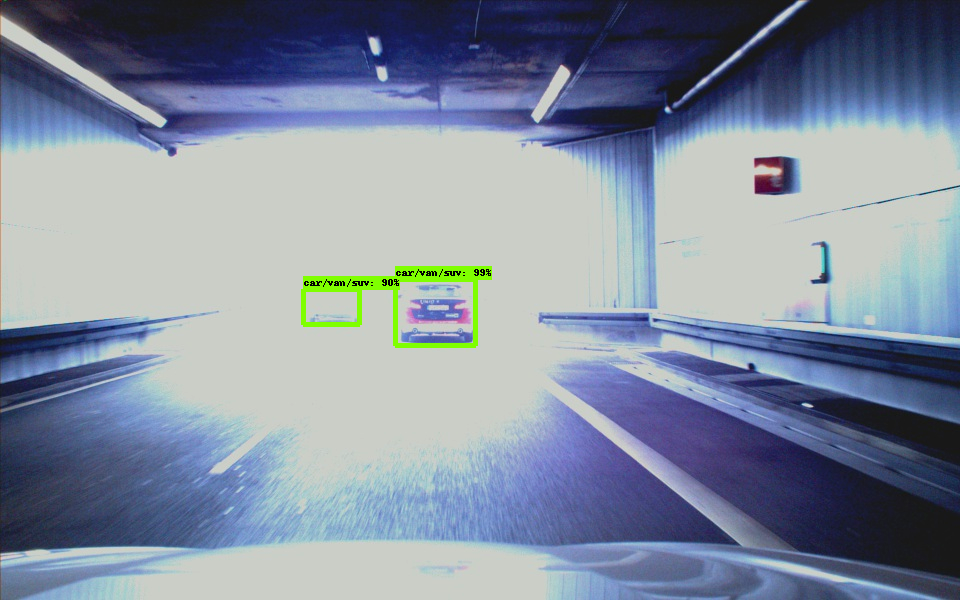

Conventional Auto-Exposure

Proposed

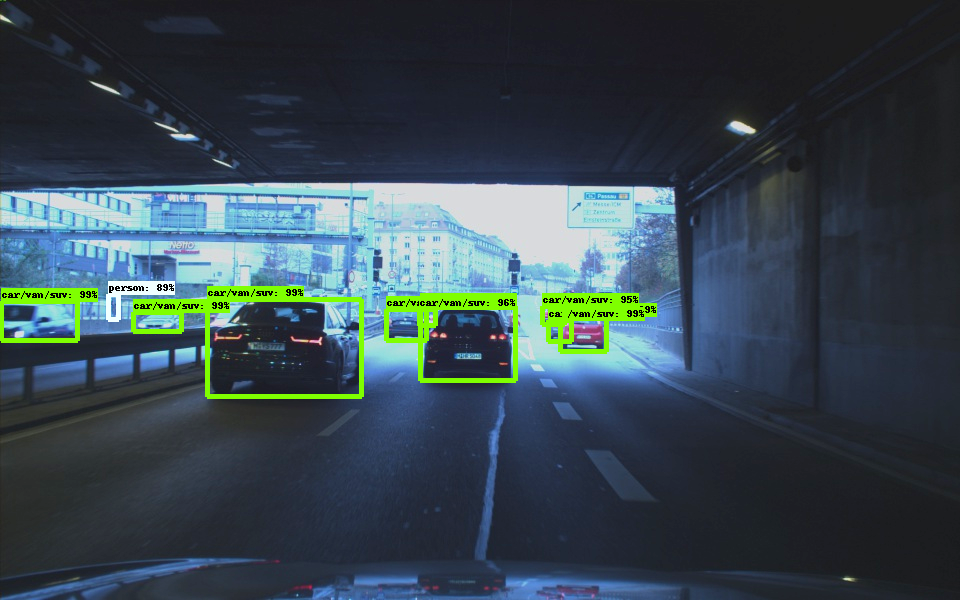

For scenes with very high dynamic range, e.g., near the end of a tunnel, the proposed method accurately balances the exposure of objects in the dark and the exposure of brightly lit objects.

Conventional Auto-Exposure

Proposed

The proposed method adapts itself robustly to changing conditions, e.g., when just exiting a tunnel.

The data has been captured in Germany in the city of Munich and the detector we use is Faster RCNN based on a variant of ResNet with 18 layers.

Learning Auto-Exposure for Automotive Object Detection.

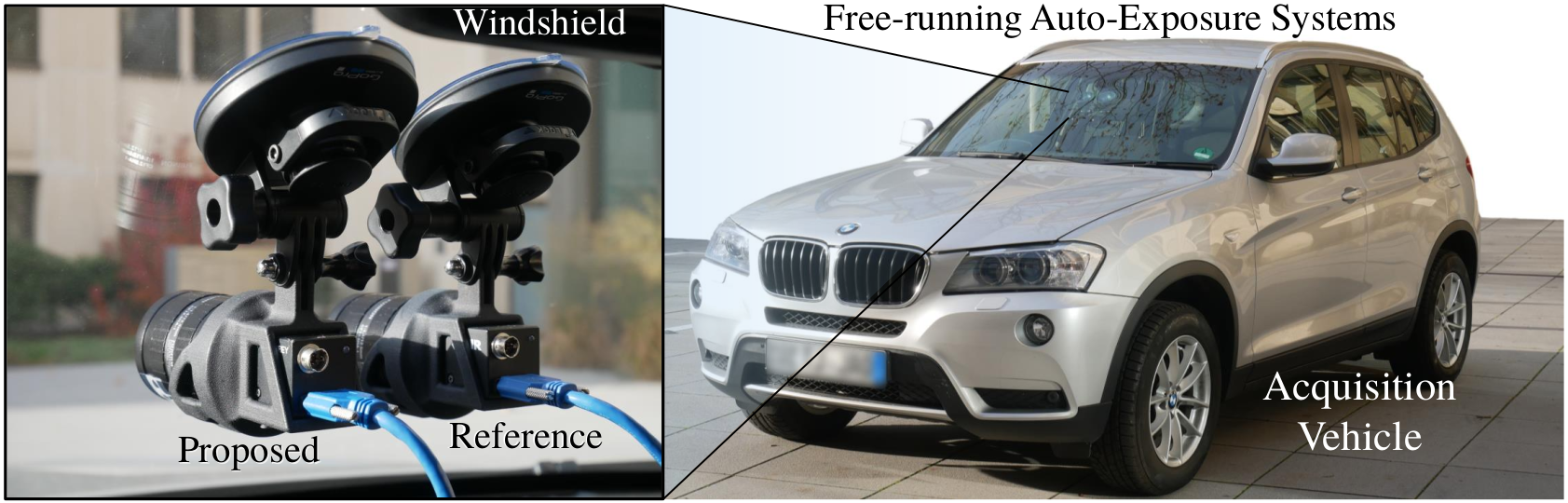

The two compared auto-exposure methods and inference pipelines run in real-time on two separate machines, each equipped with a Nvidia GTX 1070 GPU. Each of them takes input image streams from separate imagers mounted side-by-side on the windshield of a vehicle. The camera is a FLIR Blackfly (U3-23S6C-C) with resolution 1920x1200, frame rate 41 FPS, 12 bit ``LDR''. The image sensor is the Sony IMX249. The "Reference" and "Proposed" captions in the figure above refer to the cameras used for the input image stream for the conventional auto-exposure method mentioned above and the auto-exposure method we propose in our work, respectively.

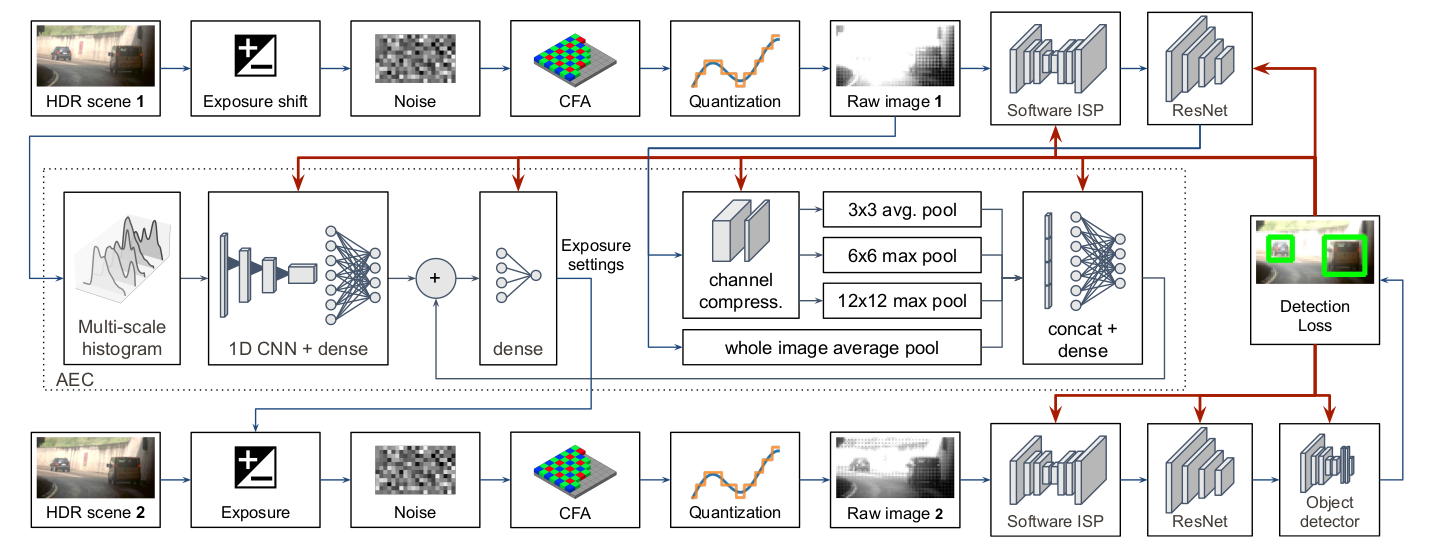

End-to-end Training Pipeline Using HDR Data.

We propose an end-to-end differentiable vision pipeline, including low dynamic range capture simulation from an HDR image, image signal processing (ISP), and object detection. For the purpose of training with stochastic gradient descent, we override the gradient of the quantization function as the function uniformly equal to 1, i.e., the gradient is computed as if quantization was replaced with the identity function.