Mask-ToF: Learning Microlens Masks for Flying Pixel Correction in Time-of-Flight Imaging

![]()

Flying pixels (FPs) are pervasive artifacts that occur at object boundaries, where background and foreground light mix to produce erroneous measurements, that can negatively impact downstream 3D vision tasks. Mask-ToF, with the help of a differentiable time-of-flight simulator, learns a microlens-level occlusion mask pattern which modulates the selection of foreground and background light on a per-pixel basis. When trained in an end-to-end fashion with a depth refinement network, Mask-ToF is able to effectively decode these modulated measurements to produce high fidelity depth reconstructions with significantly reduced flying pixel counts. We photolithographically manufacture the learned microlens mask, and validate our findings experimentally using a custom-designed optical relay system. As seen on the right, for real scenes Mask-ToF achieves significantly fewer flying pixels than a “naive” circular aperture approach, while maintaining high signal-to-noise ratio (SNR).

Paper

Mask-ToF: Learning Microlens Masks for Flying Pixel Correction in Time-of-Flight Imaging

Ilya Chugunov, Seung-Hwan Baek, Qiang Fu, Wolfgang Heidrich, Felix Heide

CVPR 2021

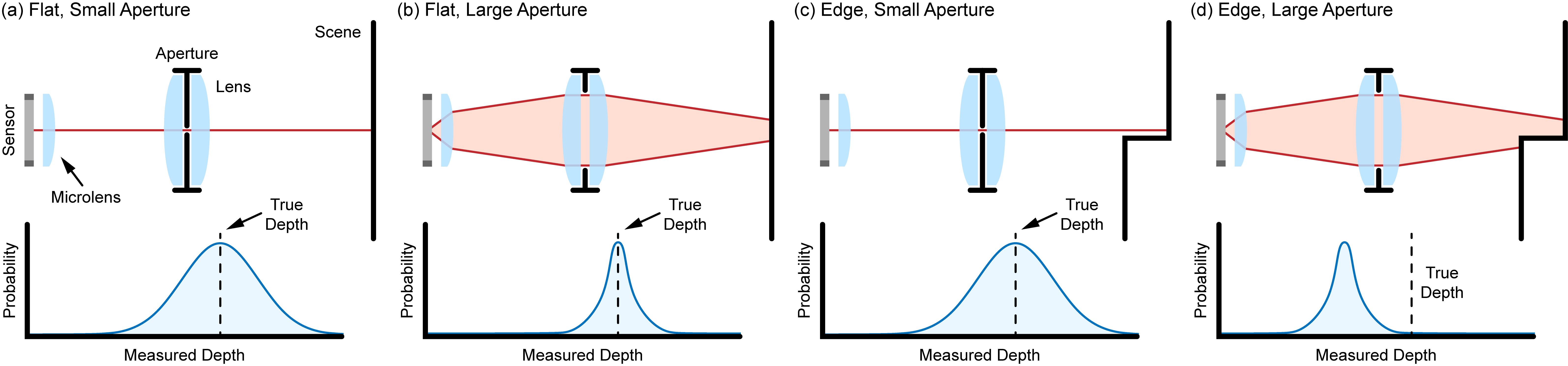

The Flying Pixel / SNR Trade-off

In general, increasing the aperture size of a ToF system increases light throughput, reducing the effects of system noise and photon shot on measured depth. If the scene being observed is locally flat — as is the case for (a) and (b) — this is a net positive, as we integrate light paths of practically identical length over the aperture and the expected distribution of measurements clusters more tightly around the true depth. For locally discontinuous scenes this is no longer the case. For while a pinhole aperture (c) can give you a wide distribution of measurements at least centered at the correct depth, a wide aperture (d) might give you measurements centered at an entirely different depth. The integrated light paths for the wide aperture are now a mix of longer background paths and shorter foreground paths, and the resulting measurement is a floating point somewhere between the two; a flying pixel (FP). On the capture side — without additional context — measurements from scenario (d) look just as trustworthy as (b).

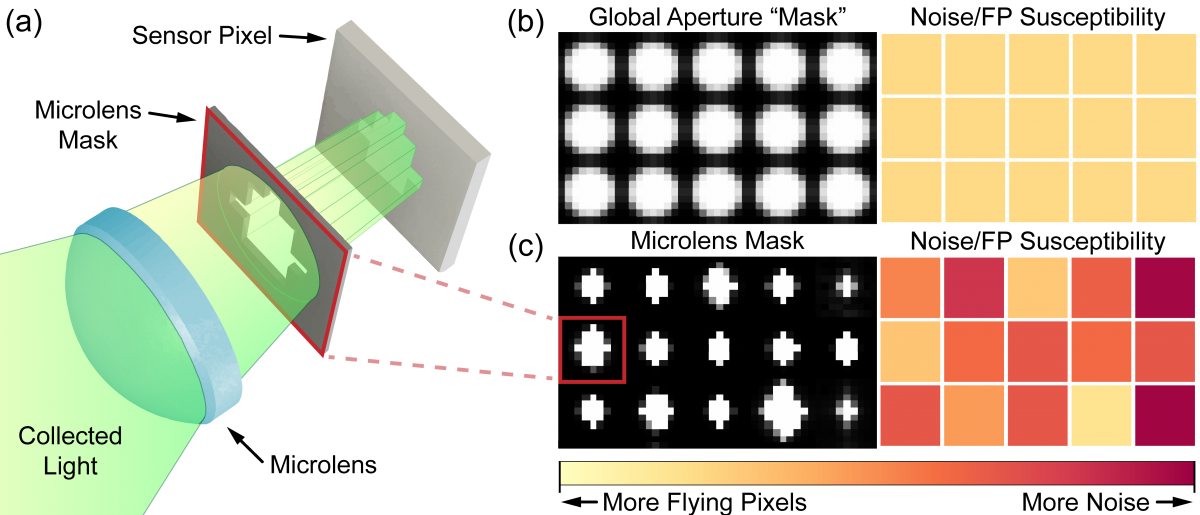

Microlens Masking

A global aperture setup can only sample spatially homogeneous configurations of SNR/FP trade-off (b). Any individual pixel will be as susceptible to FPs and noise as its neighbors. Mask-ToF, with its learned mask pattern which selectively blocks light paths on a per-pixel basis (a), allows for spatially varying noise/FP susceptibility (c). Measurements from neighboring pixels with different effective apertures can now provide the missing context needed to identify and rectify flying pixels.

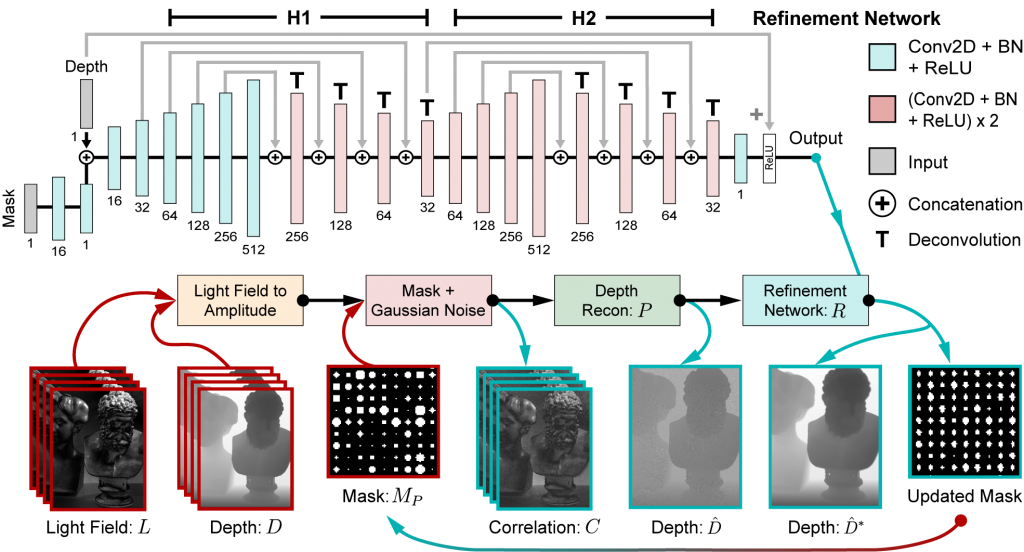

Learning a Mask

To learn a microlens mask, we need access to ToF data with sub-aperture views. As there are no available datasets for these kinds of measurements, we opt to use a light field dataset with ground truth depth maps to simulate ToF amplitude measurements. We weigh subaperture views with a mask array, add simulated noise, and combine them to produce a raw depth estimate. These are fed into a network which decodes the spatially varying pixel measurements to produce refined depth estimates. As this pipeline is fully differentiable, we are able to propagate reconstruction loss through the network and the image formation stages all the way to the mask variable; jointly updating the mask structure and learning to decode the measurements it produces.

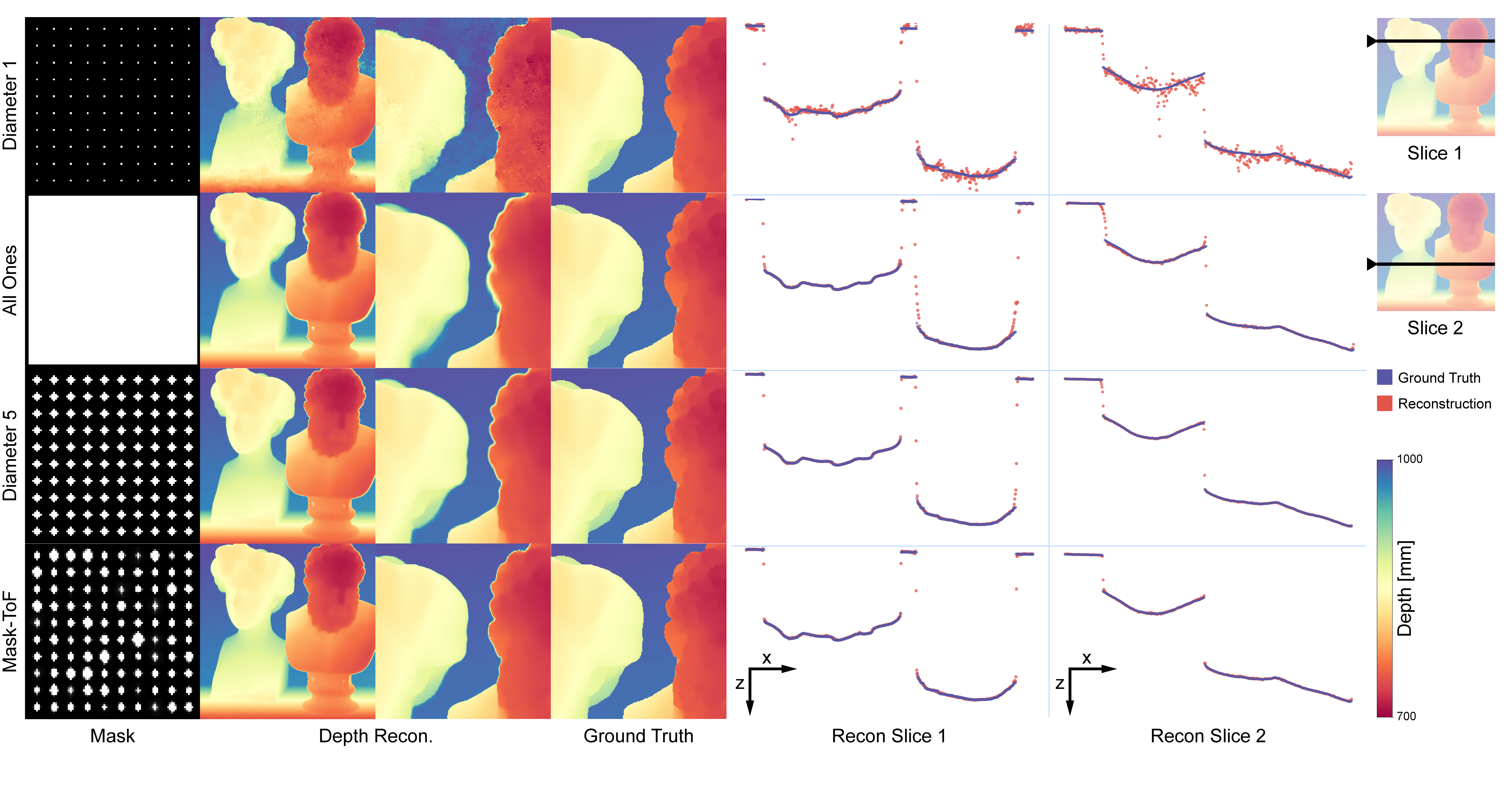

Simulated Data Results

Our learned mask pattern significantly outperforms hand-crafted mask patterns on test simulated data, both quantitatively and qualitatively. We’re able to both more effectively suppress flying pixels and achieve higher SNR than an equivalent light throughput spatially uniform mask pattern. From the slice views it’s easy to see the SNR/FP trade-off, as the Diameter 1 pinhole mask shows many errant measurements, but few flying pixels; and the All Ones mask has very little noise in its output, but has copious flying pixels surrounding depth discontinuities in the scene.

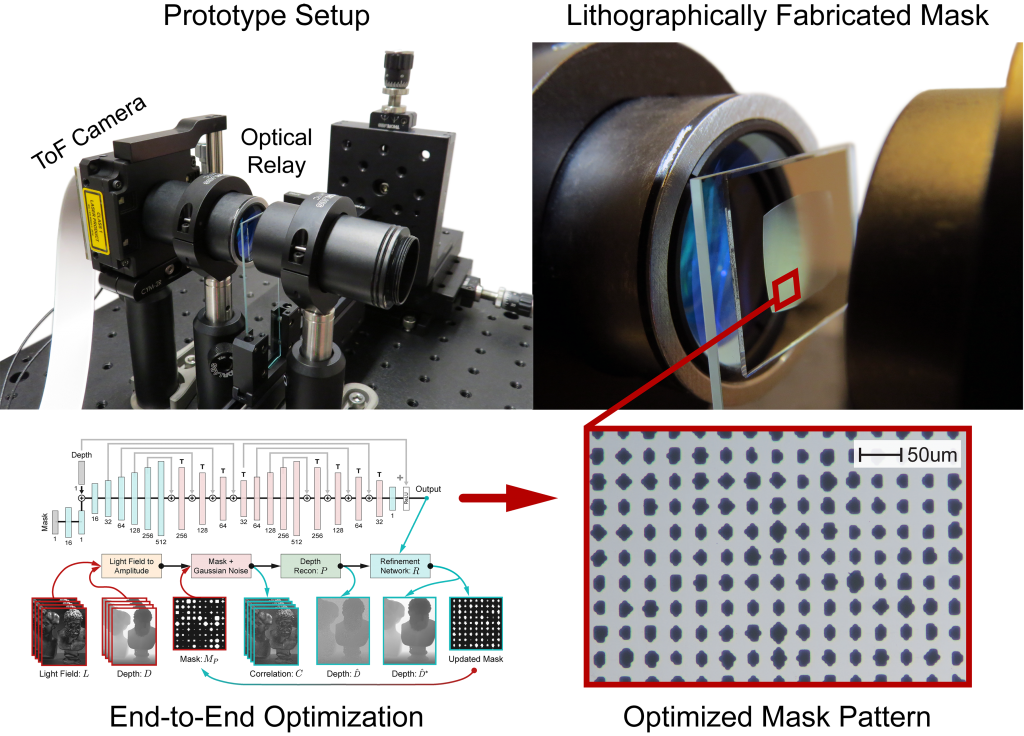

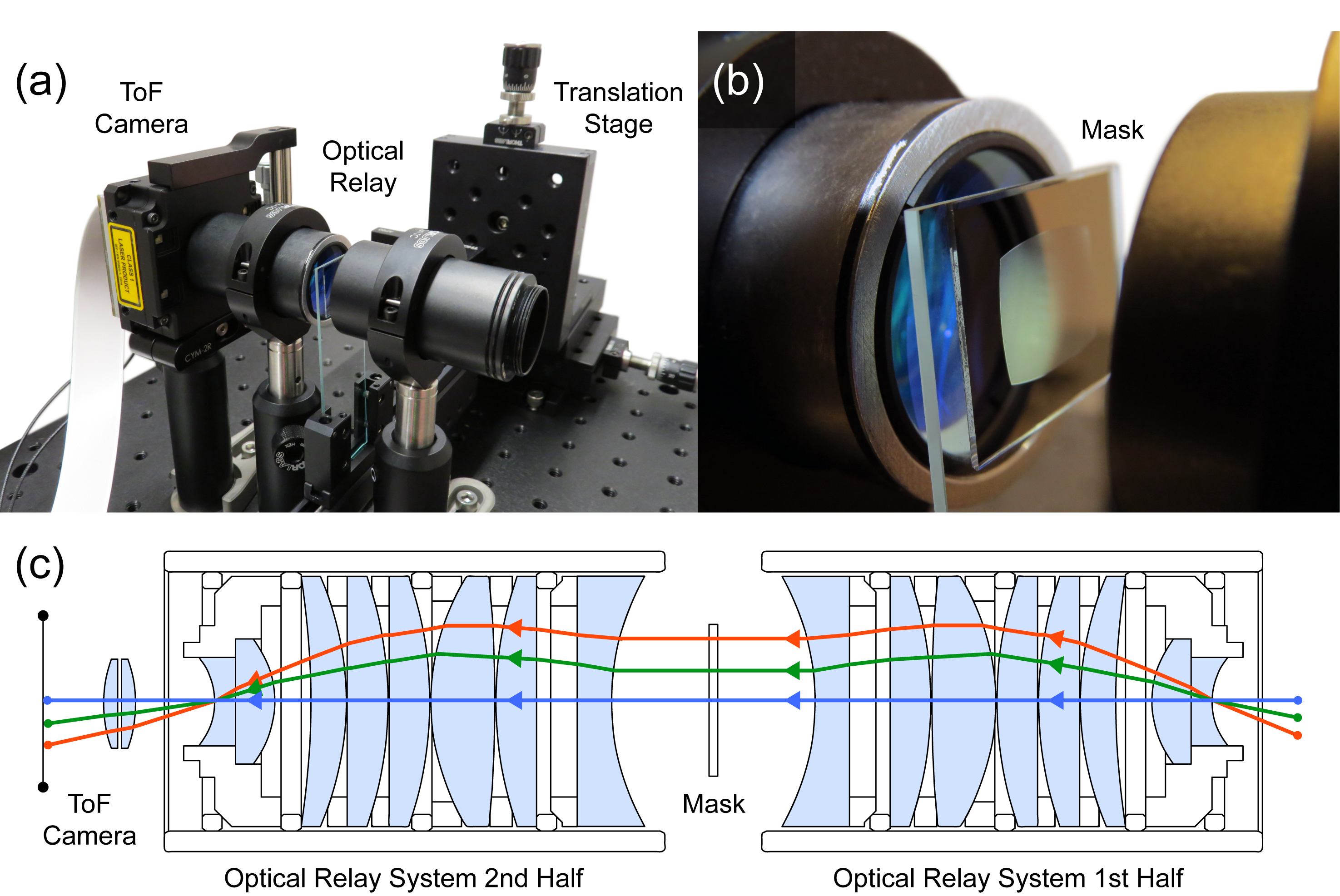

Experimental Setup

Directly accessing the CMOS sensor surface proves infeasible without risking irreparable damage to the camera system. To circumvent this we design a custom optical relay system (c) to virtually place a lithographically manufactured mask (b) onto the camera sensor. Using a translation stage (a) we’re able to align the mask pattern and record real time-of-flight measurements with a commodity camera.

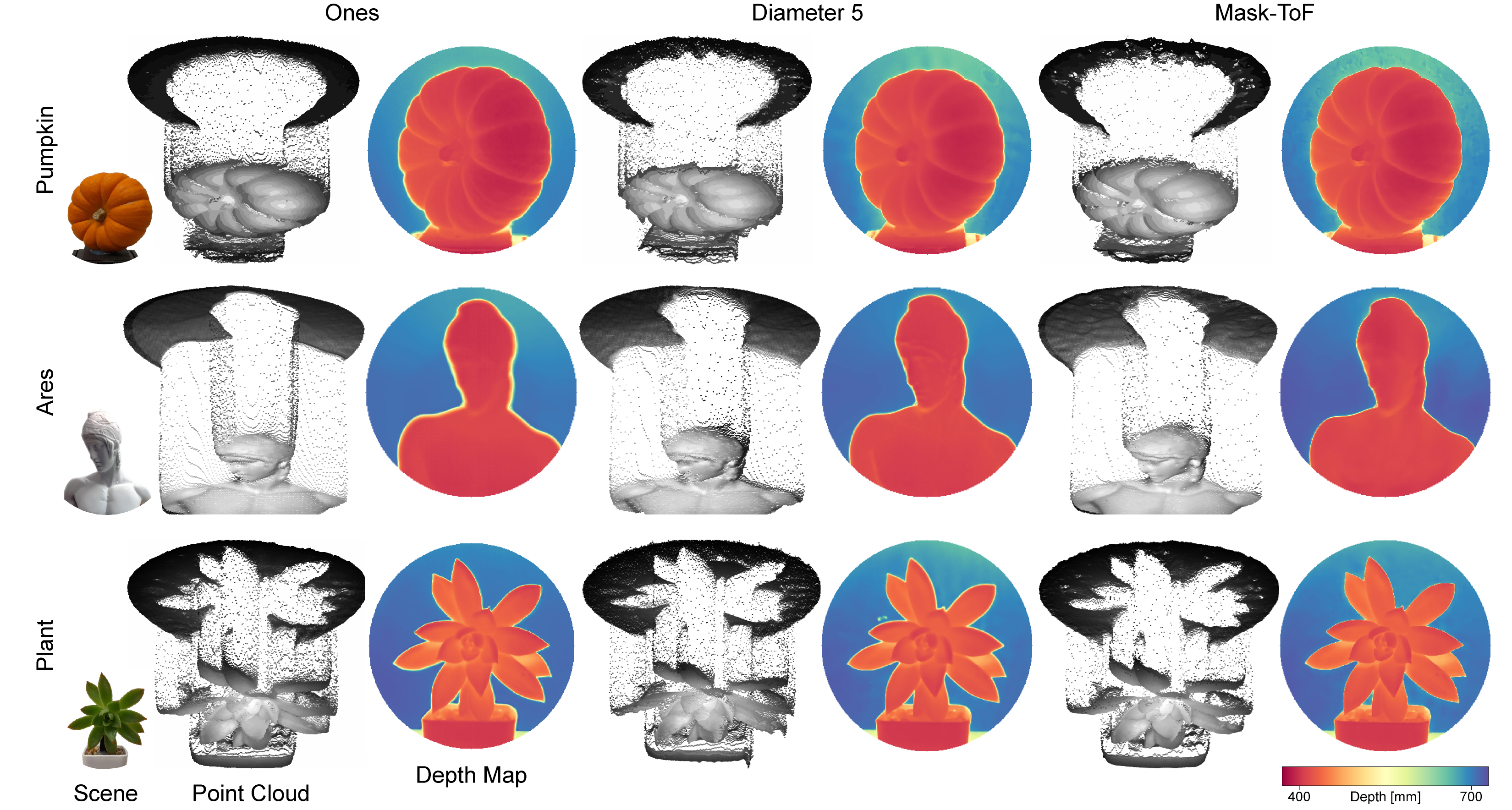

Experimental Results

We input measured experimental depth data into the refinement network, trained on simulated measurements, and observe promising reconstruction results without any fine-tuning or network retraining. On a variety of real scenes, Mask-ToF is able to significantly reduce flying pixel artifacts without adversely affecting object surface reconstruction, outperforming the equivalent light throughput spatially homogeneous mask once again. We even see a reduction in intra-object flying pixels, such as those in the pot of the Plant example, that would otherwise be hard to even detect visually.