LiDAR Snowfall Simulation for Robust 3D Object Detection

CVPR 2022 (Oral)

3D object detection is a cornerstone for robotic tasks, including autonomous driving, in which the system needs to localize and classify surrounding traffic agents, even in the presence of adverse weather. In this work, we address the problem of LiDAR-based 3D object detection under snowfall. Due to the difficulty of collecting and annotating training data in this setting, we propose a physically based method to simulate the effect of snowfall on real clear-weather LiDAR point clouds. Our method samples snow particles in 2D space for each LiDAR line and uses the induced geometry to modify the measurement for each LiDAR beam accordingly. Moreover, as snowfall often causes wetness on the ground, we also simulate ground wetness on LiDAR point clouds. We use our simulation to generate partially synthetic snowy LiDAR data and leverage these data for training 3D object detection models that are robust to snowfall. We conduct an extensive evaluation using several state-of-the-art 3D object detection methods and show that our simulation consistently yields significant performance gains on the real snowy STF dataset compared to clear-weather baselines and competing simulation approaches, while not sacrificing performance in clear weather.

Paper

LiDAR Snowfall Simulation for Robust 3D Object Detection

Martin Hahner, Christos Sakaridis, Mario Bijelic, Felix Heide, Fisher Yu, Dengxin Dai, Lux Van Gool

CVPR 2022 (Oral)

Video Overview

We aim to extend the operational design domain for autonomous vehicles to adverse snowfall conditions. The collection and annotation of captured snowfall measurements are time-consuming and hard. Instead, we introduce a snowfall simulation method that can convert a clear weather point cloud into the same point cloud as if it was captured in snowfall. Using this simulation as a data augmentation method during the training of deep neural networks for 3D object detection allows us to improve object detection by up to 2.1% in AP in heavy snowfall.

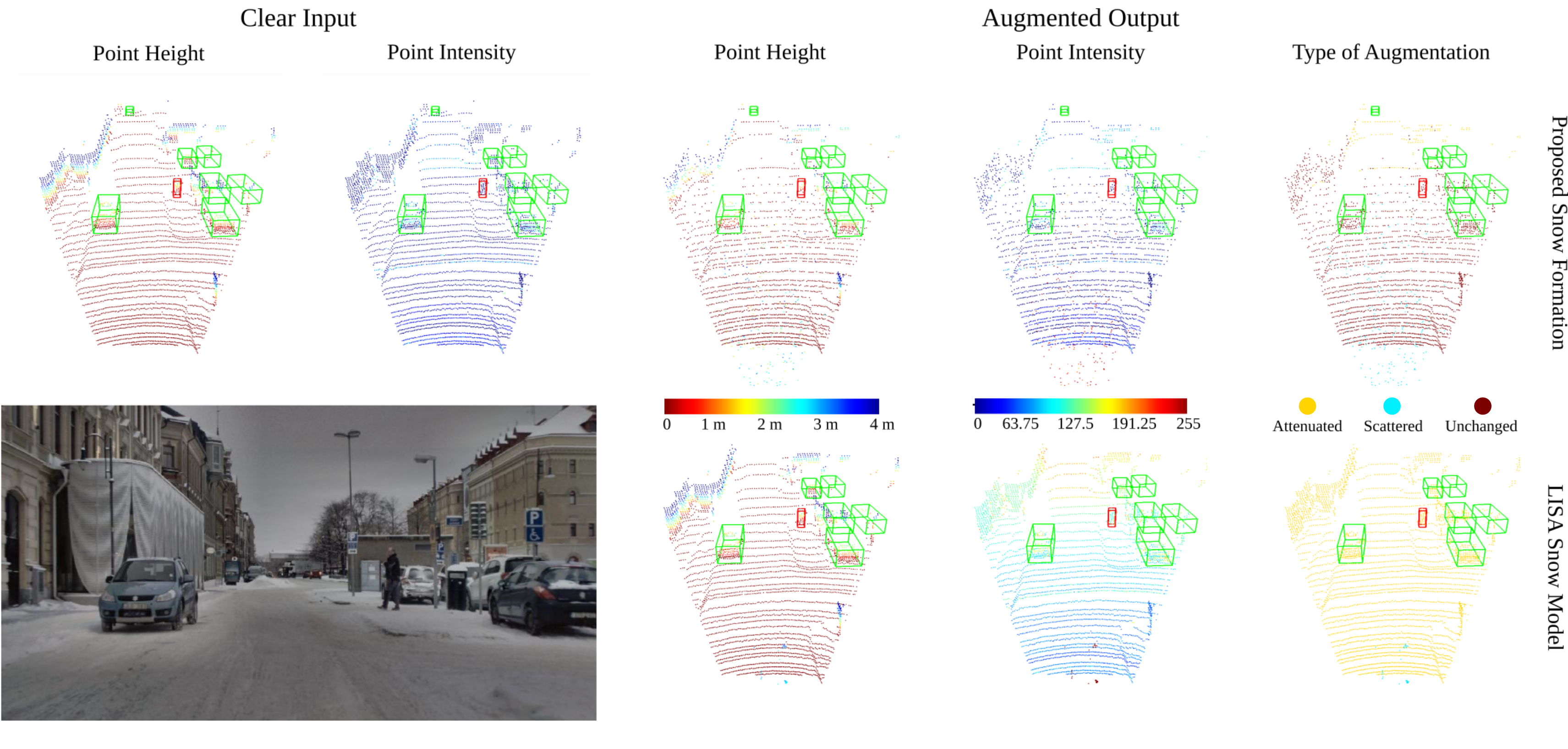

Snowfall Augmentation

We model physically accurate snowfall and use the approach to augment clear weather pointclouds to resemble captures in snowfall. The simulation shown here corresponds to a snowfall rate of rs = 2.5 mm/h. The left block shows the clear undisturbed input. The right block shows our snowfall simulation (top) and the snowfall simulation in LISA (bottom) for reference. Note that we only attenuate points which are affected by individual snowflakes instead of attenuating all points based on their distance which can be seen in the middle of the augmentation block. We also model specular reflections on the ground due to melting snow on salted roads. Both building blocks are described briefly in the following.

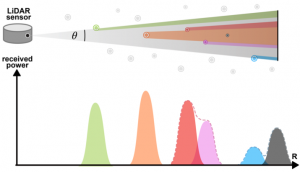

Snow Scatter Model:

To model the interaction with snow particles we apply scattering theory and sample individual snowflakes for each light path and integrate the backscattered light. Here, each scattering interaction leads to a reduction of the measured from the background target. If the echos from individual responses overcome the true background echo, a wrong detection is caused by the snow particle. For more information please refer to the manuscript.

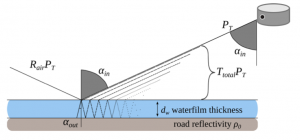

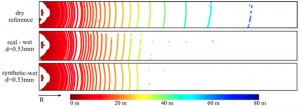

Wet Surface Model:

To simulate wet road surfaces, we model the ray interaction with thin water surfaces applying a geometrical optical model based on the Fresnel Equations shown on the left. This leads to a realistic degeneration of ground points shown on the right with a water height of 0.53mm. For details please refer to the manuscript.

Qualitative Experiments

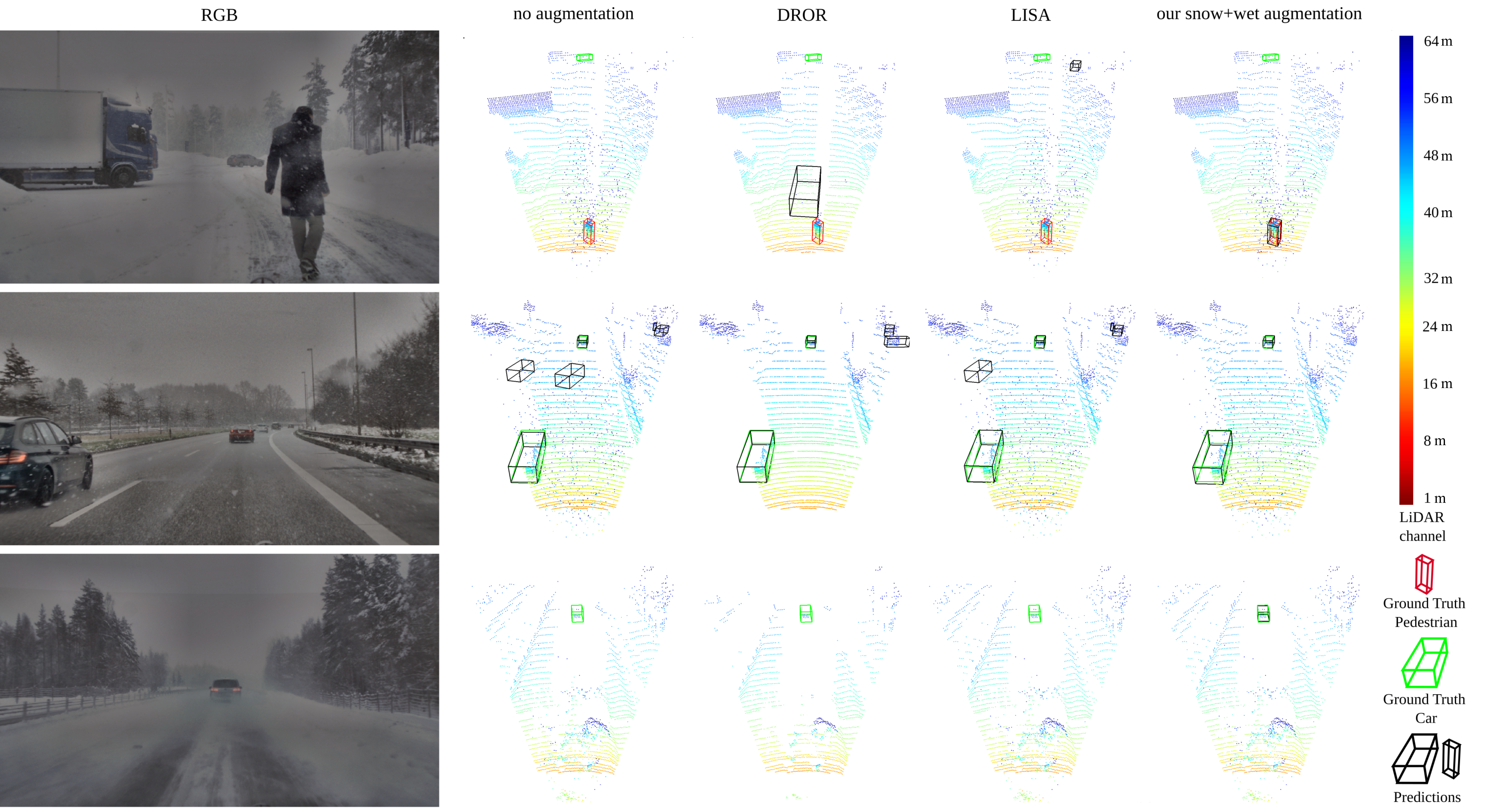

Qualitative comparison of PV-RCNN on samples from STF [1] containing heavy snowfall. The leftmost column shows the corresponding RGB images. The remaining columns show the LiDAR point clouds with ground-truth boxes and predictions using the clear-weather baseline (“no augmentation”), removal of cluttered points using DROR, a reference snow augmentation from LISA, and our full simulation (“our snow+wet augmentation”). Note, in the top row, all other methods are unable to find the pedestrian within the cluttered points. In the second example, the reference examples produces false positives within the scattered points. In the last row, only our method is able to look through the whirled up snow detecting the leading vehicle.

Related Work

[1] Mario Bijelic, Tobias Gruber, Fahim Mannan, Florian Kraus, Werner Ritter, Klaus Dietmayer, and Felix Heide. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020