Learned Multi-aperture Color-coded Optics for Snapshot Hyperspectral Imaging

- Zheng Shi*

-

Xiong Dun*

- Haoyu Wei

-

Siyu Dong

-

Zhanshan Wang

-

Xinbin Cheng

- Felix Heide

- Yifan (Evan) Peng

SIGGRAPH Asia 2024

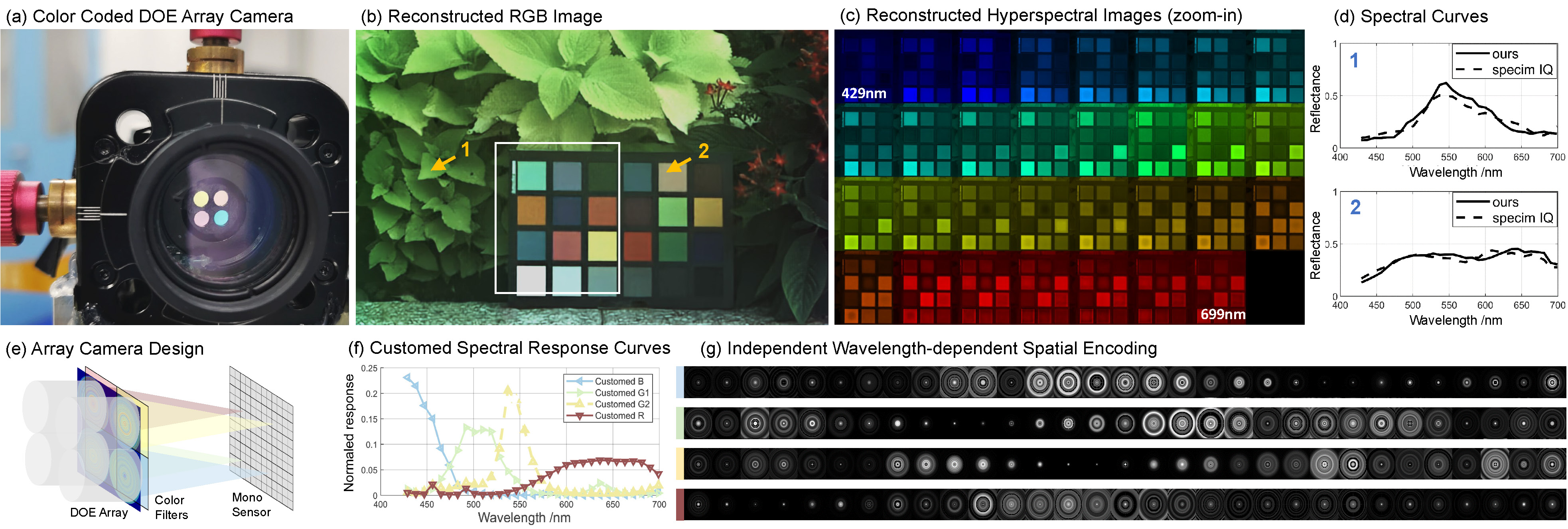

We propose a snapshot hyperspectral imager with multi-aperture color-coded optics, illustrated in (a/e), providing customized independent spatial and spectral encoding for different channels, as shown in (f/g). We experimentally validate the proposed method in both indoor and outdoor settings, recovering up to 31 spectral bands within the 429–700 nm range, closely matching the reference captured by a spectral-scan hyperspectral camera, as shown in (b-d).

Learned optics, which incorporate lightweight diffractive optics, coded-aperture modulation, and specialized image-processing neural networks, have recently garnered attention in the field of snapshot hyperspectral imaging (HSI). While conventional methods typically rely on a single lens element paired with an off-the-shelf color sensor, these setups, despite their widespread availability, present inherent limitations. First, the Bayer sensor’s spectral response curves are not optimized for HSI applications, limiting spectral fidelity of the reconstruction. Second, single lens designs rely on a single diffractive optical element (DOE) to simultaneously encode spectral information and maintain spatial resolution across all wavelengths, which constrains spectral encoding capabilities.

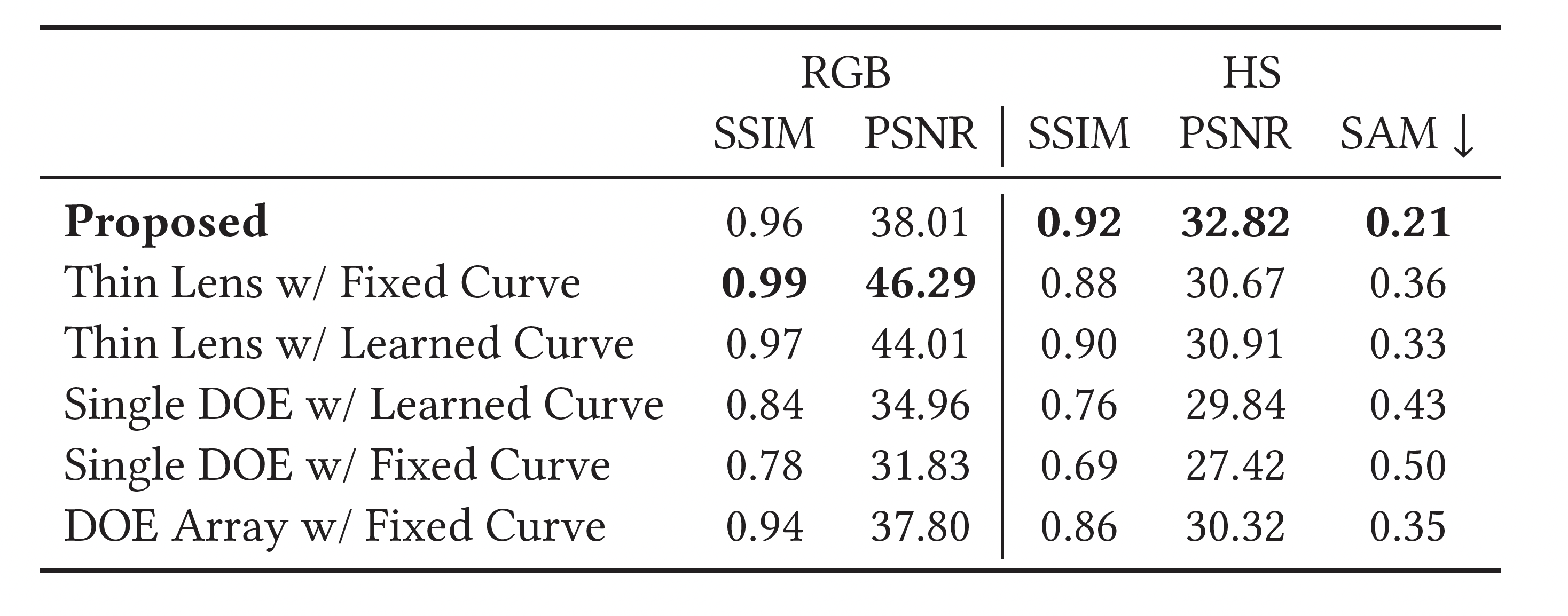

This work investigates a multi-channel lens array combined with aperture-wise color filters, all co-optimized alongside an image reconstruction network. This configuration enables independent spatial encoding and spectral response for each channel, improving optical encoding across both spatial and spectral dimensions and outperforming existing single-lens approaches by over 5~dB PSNR in reconstruction quality.

Experimental Snapshot Hyperspectral Imaging

The poposed method allows for snapshot hyperspectral imagin in both outdoor (Scenes 1 and 2) and indoor (Scene 3) settings. We validate the spectral accuracy of the imaging method here by comparing to the Specim IQ hyperspectral camera. Each scene is first captured using the Specim IQ camera as a reference for qualitative evaluation. We then capture the scene with our prototype. We apply a homography-based calibration method to register the images from the four sensor channels and adjust the white balance based on the white checker in the scene. These pre-processed quad-channel images serve as input to our neural network for reconstructing HS images across 31 channels as well as a high-fidelity RGB image. Our results confirm the method’s ability to recover up to 31 spectral bands in the 429–700 nm range, demonstrating its effectiveness across varied environments.

For each scene, we include: (a) sensor captures comprising four sub-channel images (R, G1, G2, B); (b/e) RGB reconstructions compared to Specim IQ references; (c/f) close-up views of a cropped region across all 31 channels; and (d) spectral validation plots for four sampled points on the captured scene.

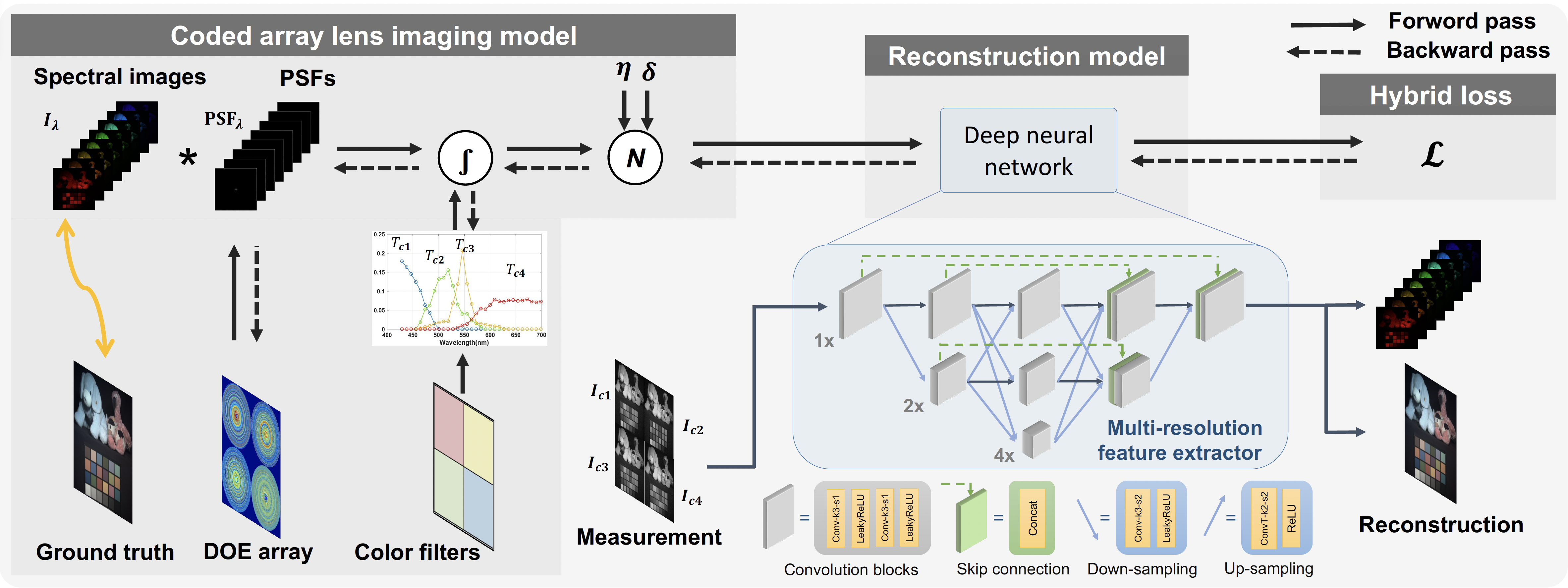

Learning Multi-Aperture Color-Coded Optics for Snapshot Hyperspectral Imaging

We design the proposed multi-aperture DOE array by jointly optimize the array, aperture-wise color filters, and image reconstruction network using a hybrid loss function. During each forward pass, the ground truth spectral images are first convolved with the PSFs of the DOE array and then multiplied by the response curves of the color filters. Noise is added to the simulated sensor image, which is then integrated over the monochrome sensor’s response for each sub-lens channel: B, G1, G2, and R. These images are input into the multi-resolution feature extractor of the image reconstruction network to recover the final hyperspectral (HS) and RGB images.

Independent Spatial and Spectral Encoding for Different Color Channels

We employ aperture-wise color filters combined with a multi-aperture diffractive lens element array, rather than traditional single DOE and pre-designed Bayer CFAs combination. This configuration not only potentially simplifies the manufacturing process but also significantly improves spectral encoding capability by allowing for independent spatial and spectral encoding across different color channels.

We validate the benefits of the proposed multi-aperture setup by analyzing the performance enhancements from spatial and spectral modulation, as well as the effects of independent versus shared spatial modulation across channels. To this end, we compare the proposed approach to variants using a fixed Bayer RGGB color filter and/or a single shared DOE across all color channels.

Validation of Multi-Aperture Configuration. The reported ablation experiemtns (see paper for details) confirm the effectiveness of the method with or without the learned array and color filter.

Related Work

[1] Zheng Shi, Yuval Bahat, Seung-Hwan Baek, Qiang Fu, Hadi Amata, Xiao Li, Praneeth Chakravarthula, Wolfgang Heidrich, and Felix Heide, Seeing Through Obstructions with Diffractive Cloaking. SIGGRAPH 2022

[2] Suhyun Shin, Seokjun Choi, Felix Heide, and Seung-Hwan Baek. Dispersed Structured Light for Hyperspectral 3D Imaging. CVPR 2024

[3] Xiong Dun, Hayato Ikoma, Gordon Wetzstein, Zhanshan Wang, Xinbin Cheng, and Yifan Peng. Learned rotationally symmetric diffractive achromat for full-spectrum computational imaging. Optica 2020