Adversarial Imaging Pipelines

-

Buu Phan

- Fahim Mannan

- Felix Heide

CVPR 2021

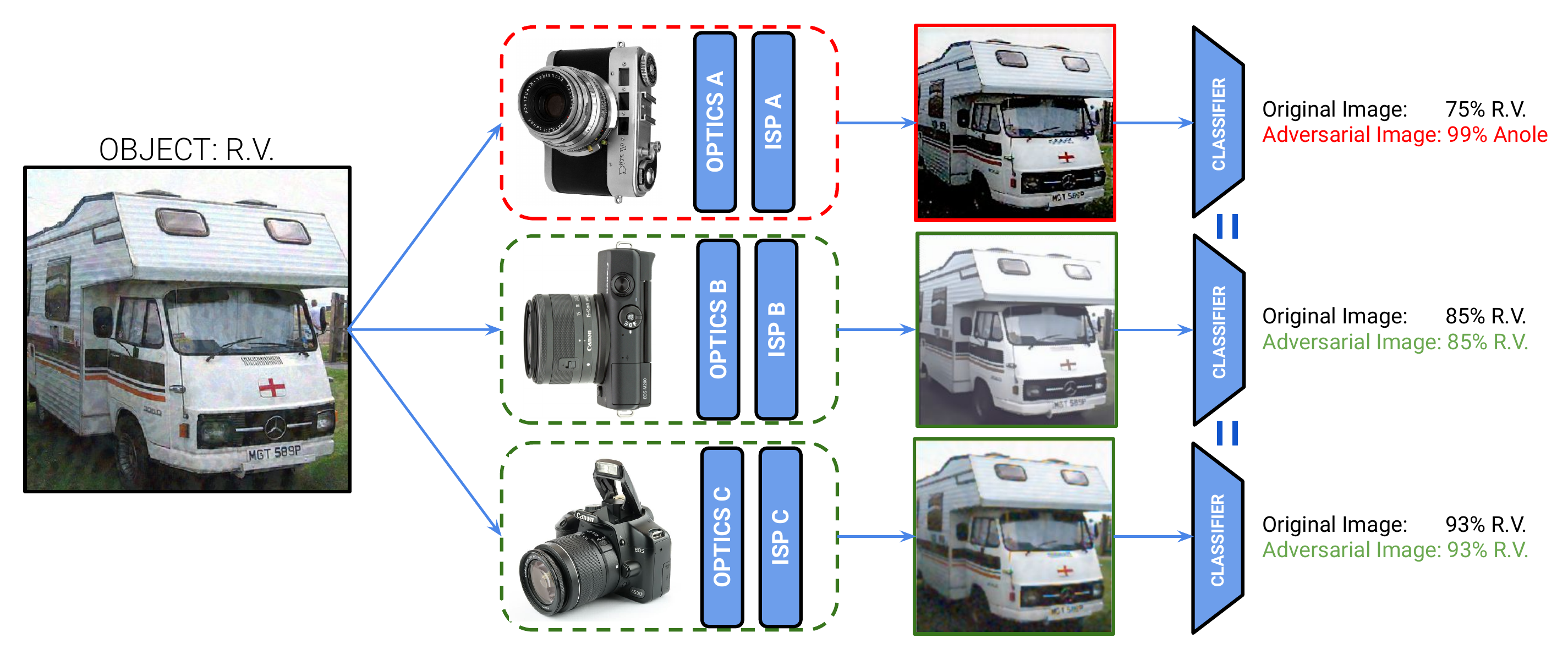

We examine and develop an adversarial attack that deceives a specific camera ISP and optics while leaving others intact, using the same downstream classifier (left-figure). Our method is validated using state-of-the-art automotive hardware ISPs and optics.

Adversarial attacks play a critical role in understanding deep neural network predictions and improving their robustness. Existing attack methods aim to deceive convolutional neural network (CNN)-based classifiers by manipulating RGB images that are fed directly to the classifiers. However, these approaches typically neglect the influence of the camera optics and image processing pipeline (ISP) that produce the network inputs. ISPs transform RAW measurements to RGB images and traditionally are assumed to preserve adversarial patterns. In fact, these low-level pipelines can destroy, introduce or amplify adversarial patterns that can deceive a downstream detector. As a result, optimized patterns can become adversarial for the classifier after being transformed by a certain camera ISP or optical lens system but not for others. In this work, we examine and develop such an attack that deceives a specific camera ISP while leaving others intact, using the same downstream classifier. We frame this camera-specific attack as a multi-task optimization problem, relying on a differentiable approximation for the ISP itself. We validate the proposed method using recent state-of-the-art automotive hardware ISPs, achieving 92% fooling rate when attacking a specific ISP. We demonstrate physical optics attacks with 90% fooling rate for a specific camera lens.

Paper

Buu Phan, Fahim Mannan, Felix Heide

Adversarial Imaging Pipelines

CVPR 2021

Video Summary

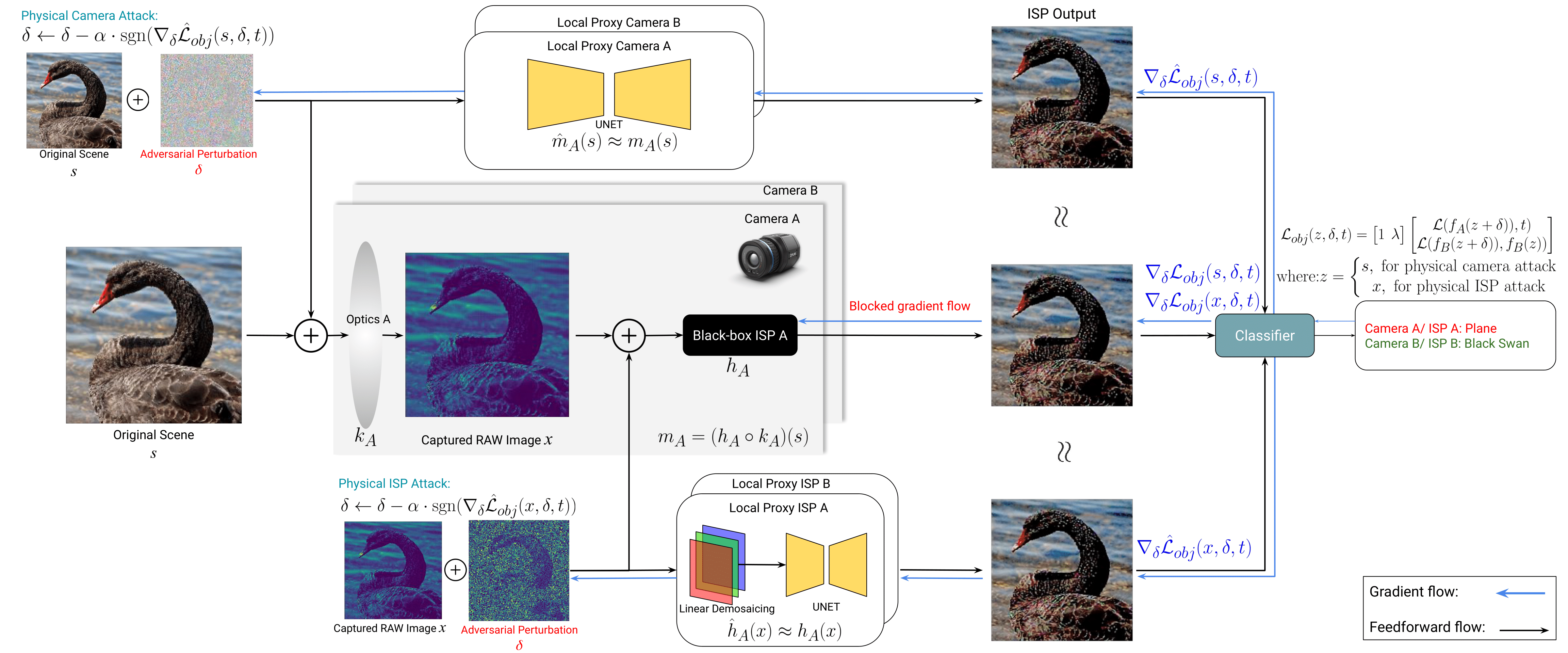

Method Overview

Overview of the proposed targeted camera attack. We perturb either the display scene (physical camera attack) or the captured RAW image (physical ISP attack), whose label is "black swan", such that they are misclassified into "plane" by pipeline A but not by pipeline B. To find such an attack, we solve an optimization problem, using the estimated gradients from the proxies approximation of the black-box, non-differentiable imaging modules. The objective function is a weighted sum of two cross-entropy losses, where the first term encourages the attack to fool pipeline A and the second term prevents it from changing the original prediction probability of pipeline B.

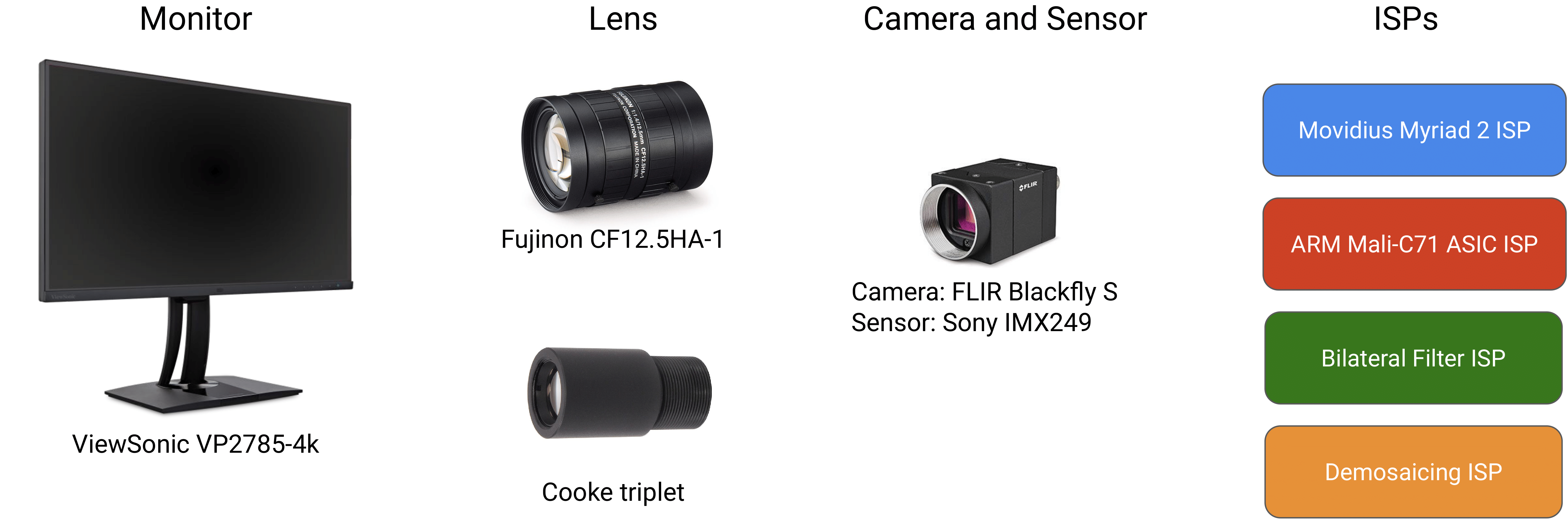

Hardware and ISPs

Image Processing Pipelines.

We evaluate our method for the black-box/non-differentiable hardware ARM Mali C71 and Movidius Myriad 2 ISPs. In addition to the two hardware ISPs, we also jointly evaluate two differentiable ISPs. The first one only performs bilinear demosaicing and will be referred to as the Demosaicing ISP. The second one performs a bilinear demosaicing operation followed by bilateral filtering and is referred to as Bilateral Filter ISP. All ISPs are described in detail in the Supplementary Document.

Optics.

We use a Fujinon CF12.5HA-1 lens with 54° field of view as the default lens for our experiments. As this compound optics is a proprietary design, we evaluate the proposed attacks on a Cooke triplet optimized for image quality using Zemax Hammer optimization and fabricated using PMMA. Details are described in the Supplementary Document.

Physical Setup.

To validate the proposed method in a physical setup, we display the attacked images on the ViewSonic VP2785-4k monitor. This setup allows us to collect large-scale evaluation statistics in a physical setup, departing from sparse validation examples presented in existing works with RGB printouts. We capture images using a FLIR Blackfly S camera employing a Sony IMX249 sensor. The camera is positioned on a tripod and mounted such that the optical axis aligns with the center of the monitor. The camera and monitor are connected to a computer, which is used to jointly display and capture thousands of validation images. Each lens-assembly is focused at infinity with the screen beyond the hyperfocal distance.

Selected Results

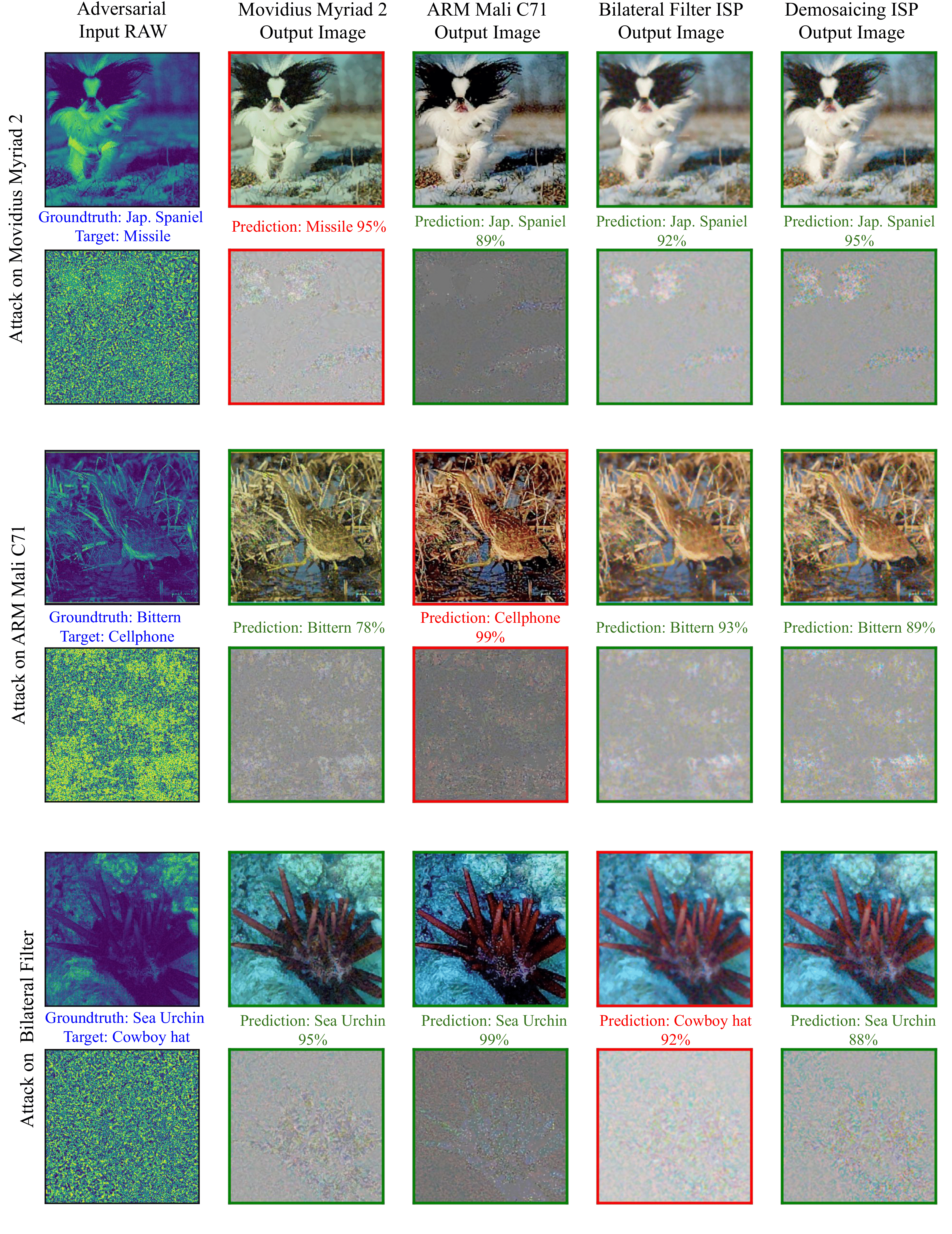

Qualitative Results on Physical ISP Attacks.

In this attack setting, we acquire the RAW images by projecting the images onto the screen, using the FLIR Blackfly S camera. These RAW images are then fed to a hardware ISP and the adversarial perturbation is added directly to the RAW image. We use ɛ = 2000 to reliably deceive a RAW image while keeping the perturbation imperceptible. For each image, we target a random class and use a total of 30 iterations, with step size α = 50. Each pair of rows (top to bottom) shows the attack on the Movidius Myriad 2, ARM Mali C71 and Bilateral Filter ISP respectively. In each targeted ISP attack, we show in the first column the adversarial RAW (top) and perturbations (bottom). The next four columns show the associated RGB images and perturbations from the ISPs. The RGB perturbation is visualized by subtracting the ISP output of adversarial RAW to that of the unattacked output.

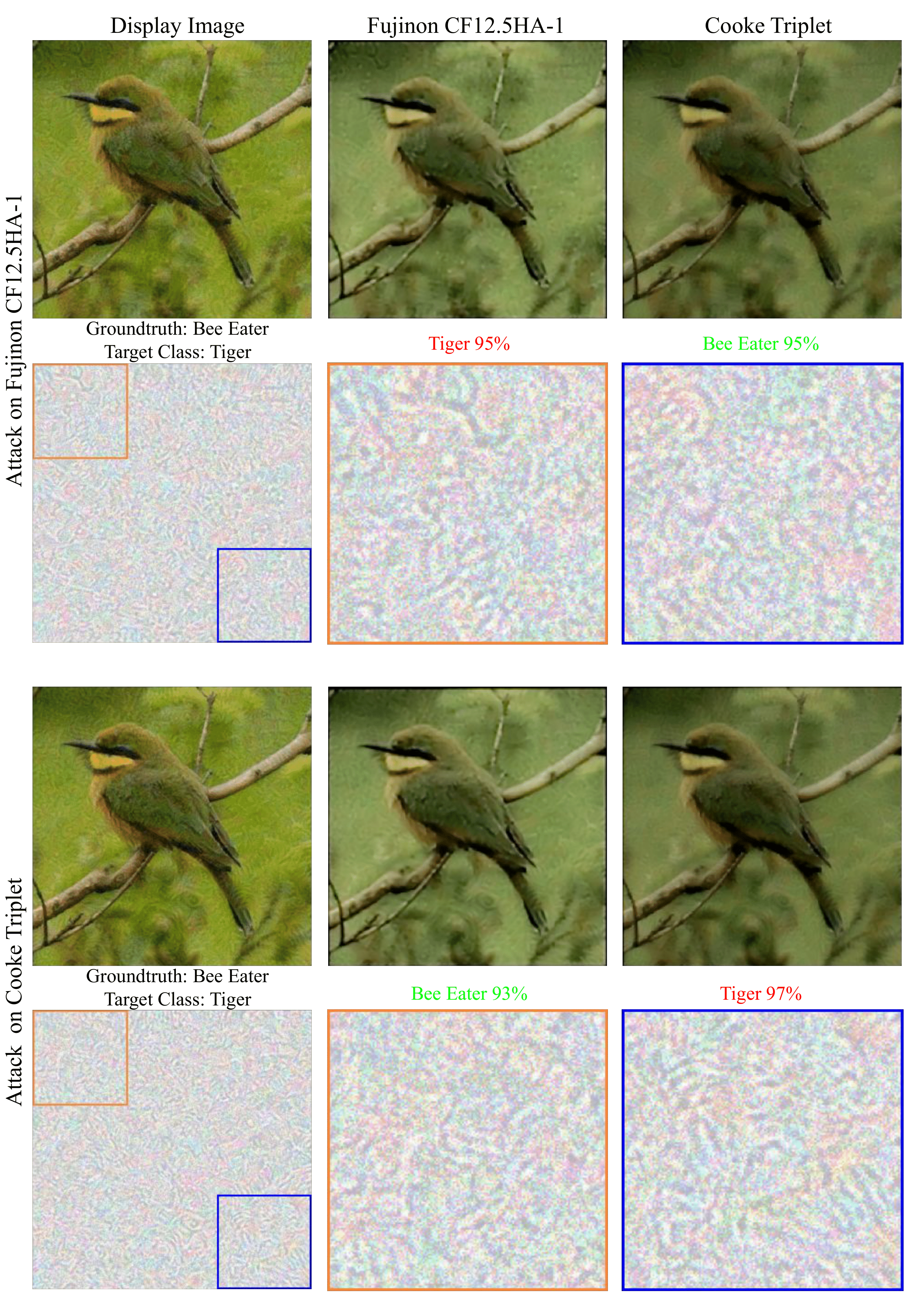

Qualitative Results on Physical Optics Attacks.

We extend the proposed method to target a compound optical module instead of a hardware ISP. The proxy function now models the entire transformation from the displayed image to optics, sensor, and ISP processing that results in the final RGB image that is fed to the image classifier. In these experiments, all the pipelines deploy identical ARM Mali C71 ISP, which allows us to assess adversarial pattern that targets only one optical system but not another. For each attacked image, we use ɛ = 0.08, target a random class and use a total of 30 iterations, with the step size α = 0.005. We visualize the targeted optics attack on the Fujinon CF12.5HA-1 and Cooke Triplet optics on 4 different images. For each attack, we show the displayed adversarial and post-processed images (top row). In the bottom row, we visualize (from left to right) the additive perturbations on the display image and its zoomed in 150 × 150 top-left and bottom right region.

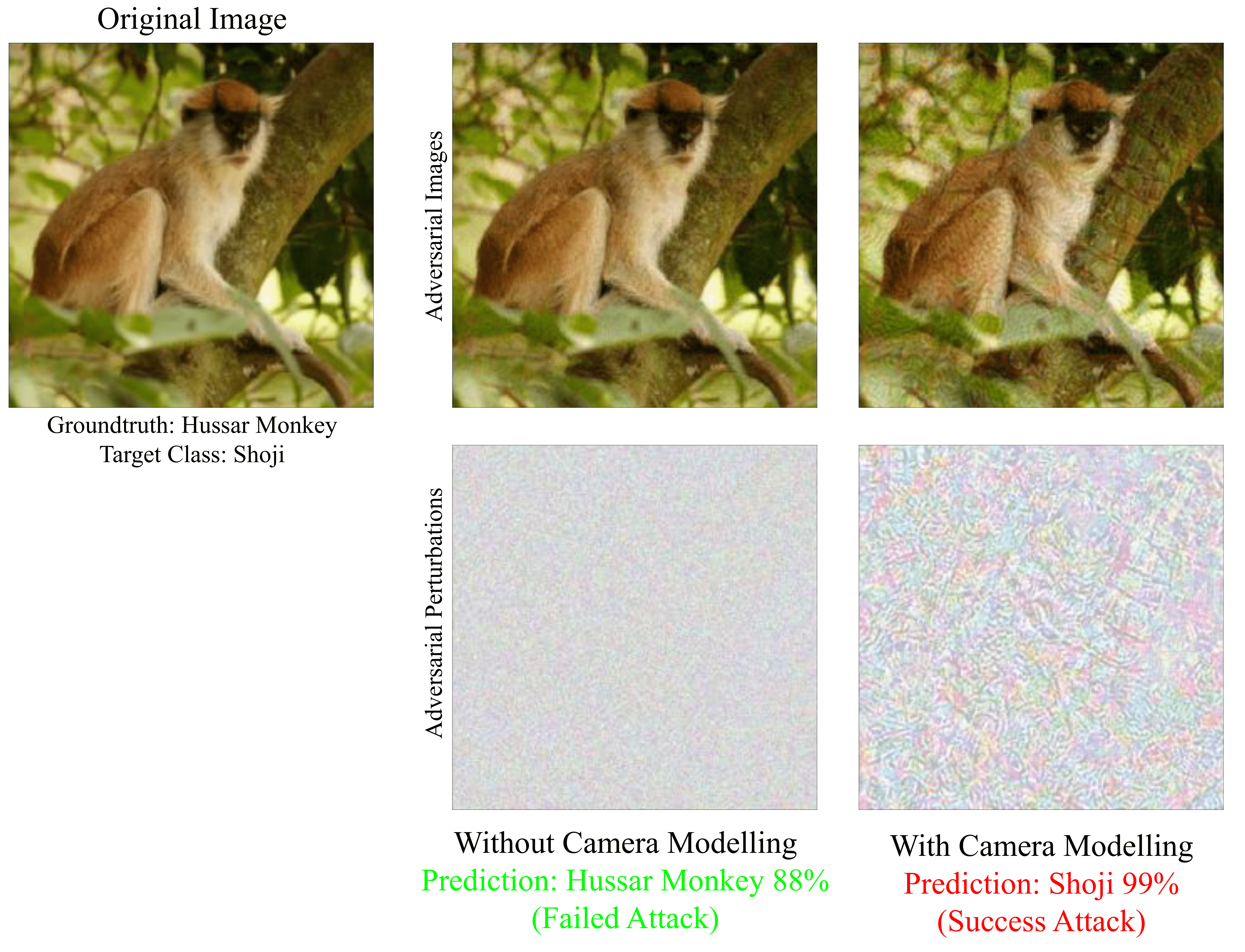

Effectiveness of Camera Modeling in Physical Adversarial Attack.

Current approaches for adversarial attacks on physical objects neglect the existence of the transformation happening in the camera, assuming that they preserve theadversarial pattern. We show here that this assumption is flawed by comparing the attack success rate when include and not include this transformation, using the Cooke Triplet optics and ARM C71 Mali ISP. When assuming that this transformation preserves the adversarial pattern, the success rate is 0.1%. In contrast, when this transformation is considered, the attack achieves up to 95%. We visualize and compare the perturbations in Figure 5, where it can be observed that although both uses the same optimization algorithm and a perturbation bound of 0.08, the perturbation when we model the transformation in the camera is more visible and structured.