Polarization Wavefront Lidar: Learning Large Scene Reconstruction from Polarized Wavefronts

Conventional lidar sensors are capable of providing centimeter-accurate distance information by emitting laser pulses into a scene and measuring the timeof-flight (ToF) of the reflection. However, the polarization of the received light that depends on the surface orientation and material properties is usually not considered. As such, the polarization modality has the potential to improve scene reconstruction beyond distance measurements. In this work, we introduce a novel long-range polarization wavefront lidar sensor (PolLidar) that modulates the polarization of the emitted and received light. Departing from conventional lidar sensors, PolLidar allows access to the raw time-resolved polarimetric wavefronts. We leverage polarimetric wavefronts to estimate normals, distance, and material properties in outdoor scenarios with a novel learned reconstruction method. We find that the proposed method improves

normal and distance reconstruction by 53% mean angular error and 41% mean absolute error compared to existing shape-from-polarization (SfP) and ToF methods

Paper

Polarization Wavefront Lidar: Learning Large Scene Reconstruction from Polarized Wavefronts

Dominik Scheuble*, Chenyang Lei*, Seung-Hwan Baek, Mario Bijelic, Felix Heide

CVPR 2024

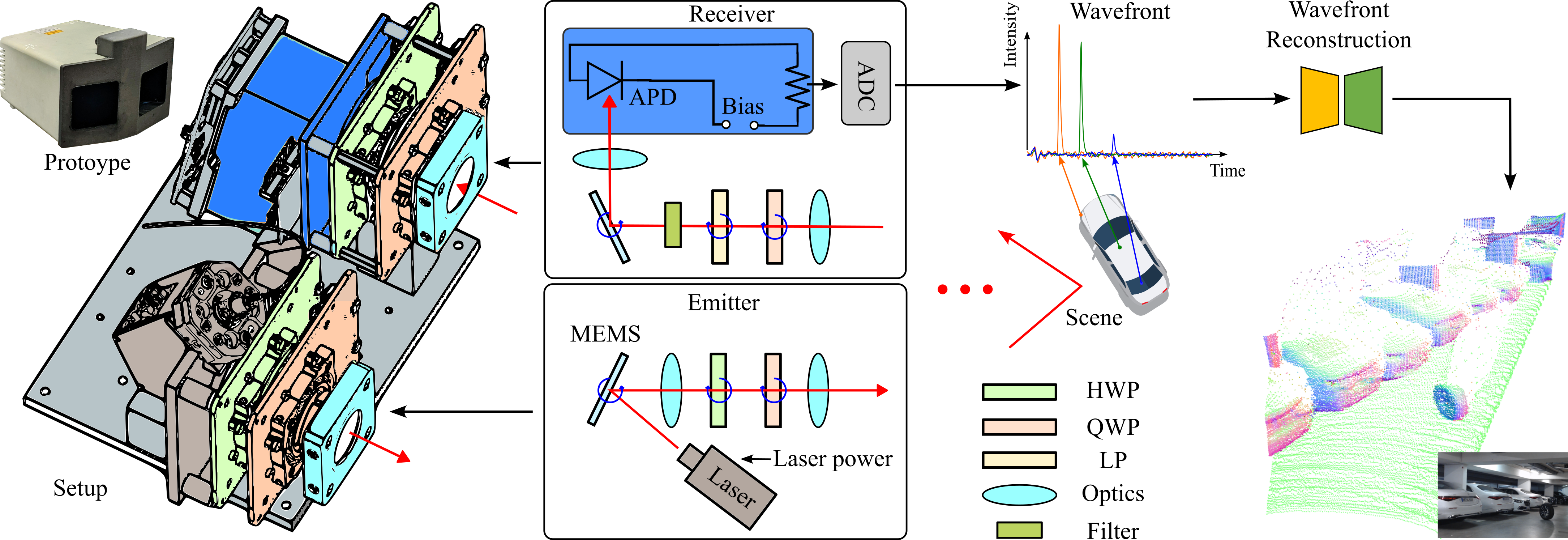

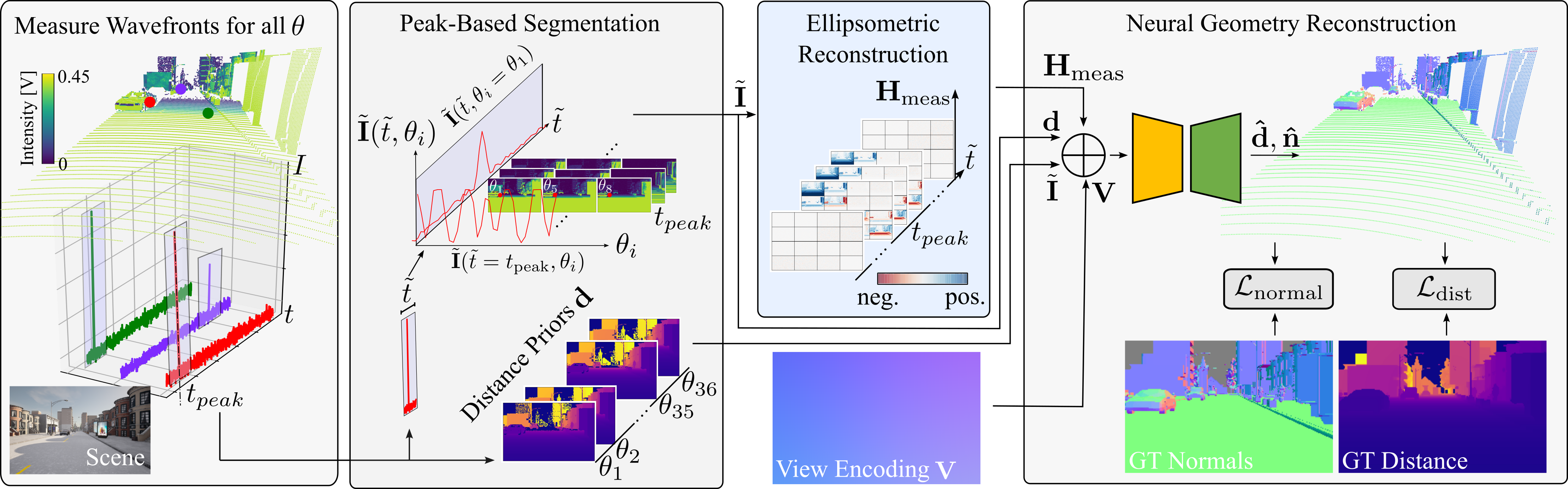

In this figure, we illustrate the functional principles of our PolLidar sensor and its processing pipeline. The hardware prototype (depicted on the left) is equipped with the unique capability of modulating the polarization of light during both the emission and reception stages. For emission, a half-wave plate (HWP) and quarter-wave plate (QWP) emit light of a specific polarization, while for reception, a QWP and a linear polarizer (LP) analyze the polarization of the received light (illustrated in the middle-left). The captured signal is processed through an analog-to-digital converter (ADC) connected to an Avalanche Photodiode (APD) for precise raw wavefront measurement (shown in the middle-right). Unlike traditional LiDAR systems, which primarily focus on distance measurements, our sensor also characterizes the polarization and wavefront of light. This advanced configuration enables a comprehensive reconstruction of LiDAR data (depicted on the right), including normals, distances, and material properties. This functionality opens new possibilities for applications in autonomous driving and environmental monitoring, among others.

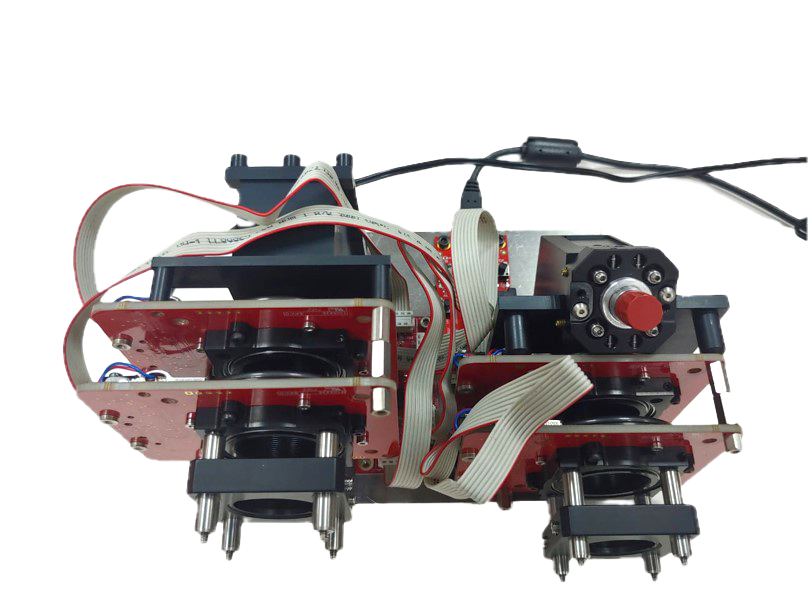

Hardware Prototype

In technical detail, our hardware prototype balances long-range capability of up to 223 m and high 150 x 236 spatial resolution within a 23.95° vertical and 31.53° horizontal field-of-view. On the right, a figure illustrates the internal setup of the hardware. We apply separate emission and reception modules using a MEMS micro-mirror for scanning and a digital micro-mirror device (DMD) to direct returning light, reducing light loss.

The system operates at a wavelength of 1064 nm, equipped with a narrow bandpass filter to reduce ambient light interference while maintaining Class-1 eye safety standards. The laser power can be adjusted to find the right balance between range and signal saturation. The laser light is modulated in polarisation through a half-wave and quarter-wave plate as explained in the section above. Subsequently, the a back-side illuminated Avalanche Photodiode (APD) with an adjustable bias reads the raw signal, digitized by a PCIe-5764 FlexRIO-Digitizer ADC sampling at 1 Gs/s, providing a resolution of 15 cm per bin. This design supports a highly configurable selection of polarization states with high resolved waveforms.

Reconstruction Results

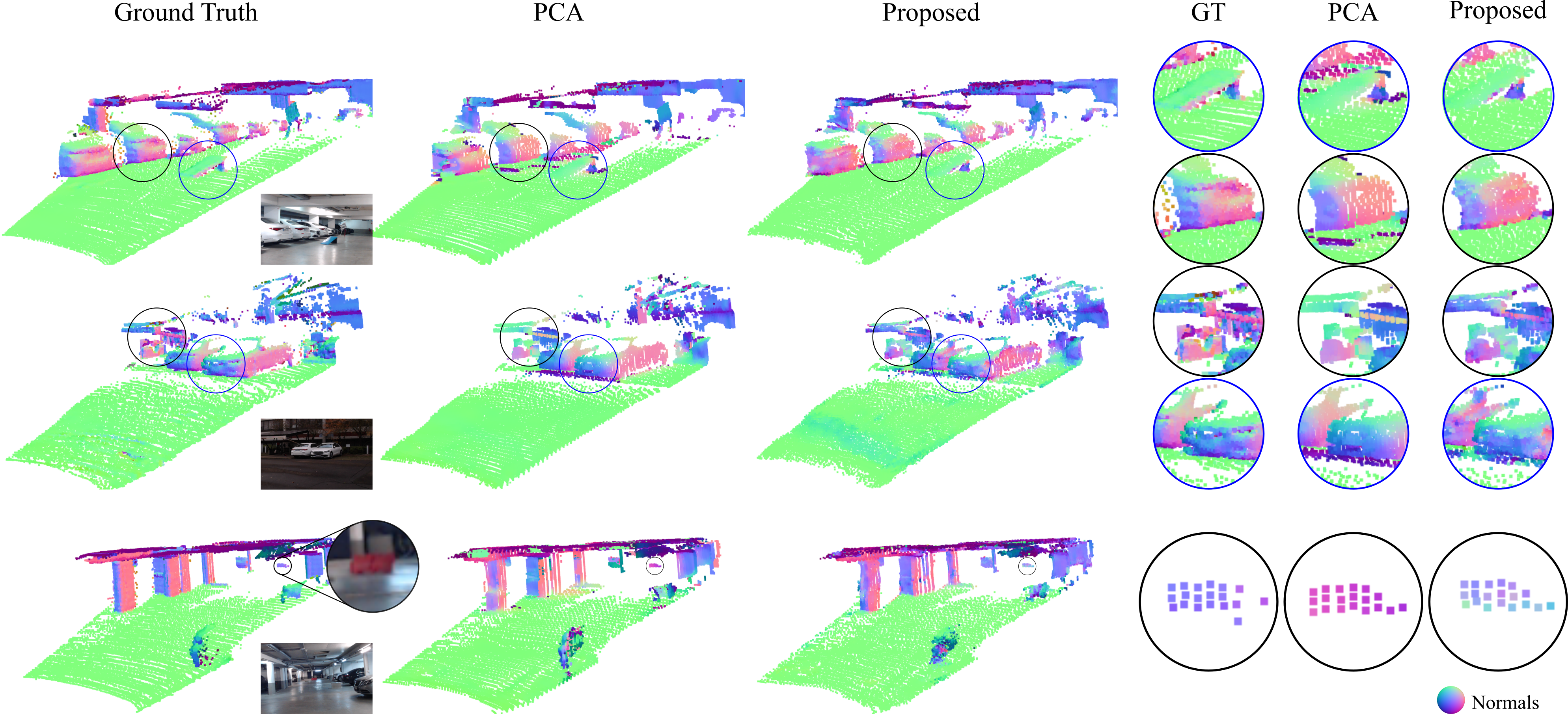

Surface Normal and Distance Reconstruction

Conventional lidar reconstruction methods (PCA) result in erroneous predictions of surface normals, especially prominent for the fine structures visible in the zoom-ins of the first two rows. In contrast, the proposed method is able to resolve these fine details by leveraging the cues from polarization. In the last row, we show a lost cargo scenario with an upright object blocking the road in 50m distance. Our method correctly classifies the object as facing toward the vehicle, whereas PCA predicts a flat surface with downwards oriented normals.

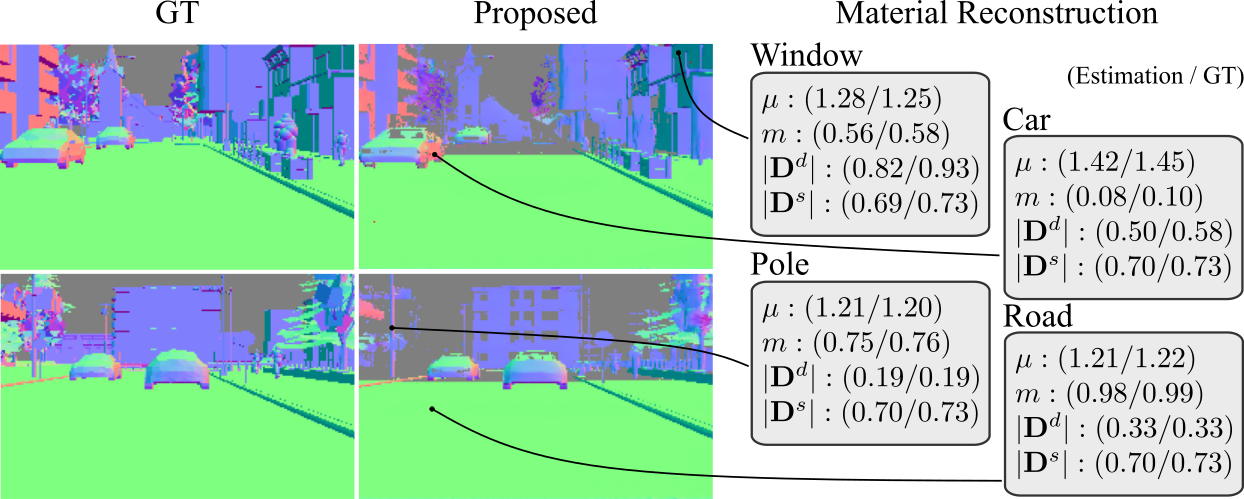

Material Property Reconstruction

We further leverage polarization to reconstruct the material properties of the scene, as the polarization changes depending on the materials the lidar beam is incident on. To this end, we use the reconstructed surface normals and follow the re-render approach of Baek et al. [2] to recover index of refraction, roughness and reflectivity.

Neural Geometry Reconstruction

We capture raw polarization wavefronts of the scene. We apply a peak-based segmentation technique to obtain a sliced polarization wavefront and distance priors. Via ellipsometric reconstruction, we estimate a sliced Mueller matrix. Finally, we concatenate all the polarization priors with viewing direction as the input to a neural network predicting distance and normals for the scene. We supervised the network with a normal loss and a distance loss.

Dataset

In order to use the proposed neural geometry reconstruction approach, a sufficient amount of training data is required. However, the finely controllable polarization elements come at the cost of longer measurement times. Thus, we pre-train in CARLA, where we simulate our PolLidar, and only finetune on the real-world PolLidar dataset.

PolLidar Dataset

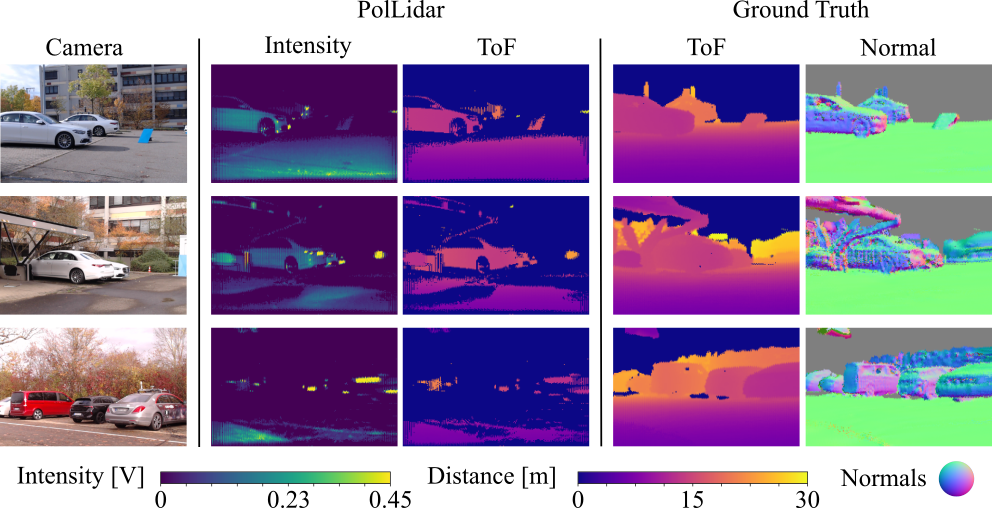

We capture a long-range polarimetric lidar dataset in typical automotive scenes with object distances up to 100m. On the left is a camera reference image, followed by PolLidar intensity for the horizontally polarized state and sensor-derived ToF distances. On the right, ground truth data from accumulated scans from a Velodyne VLS-128 lidar, providing ToF and surface normals for comparison

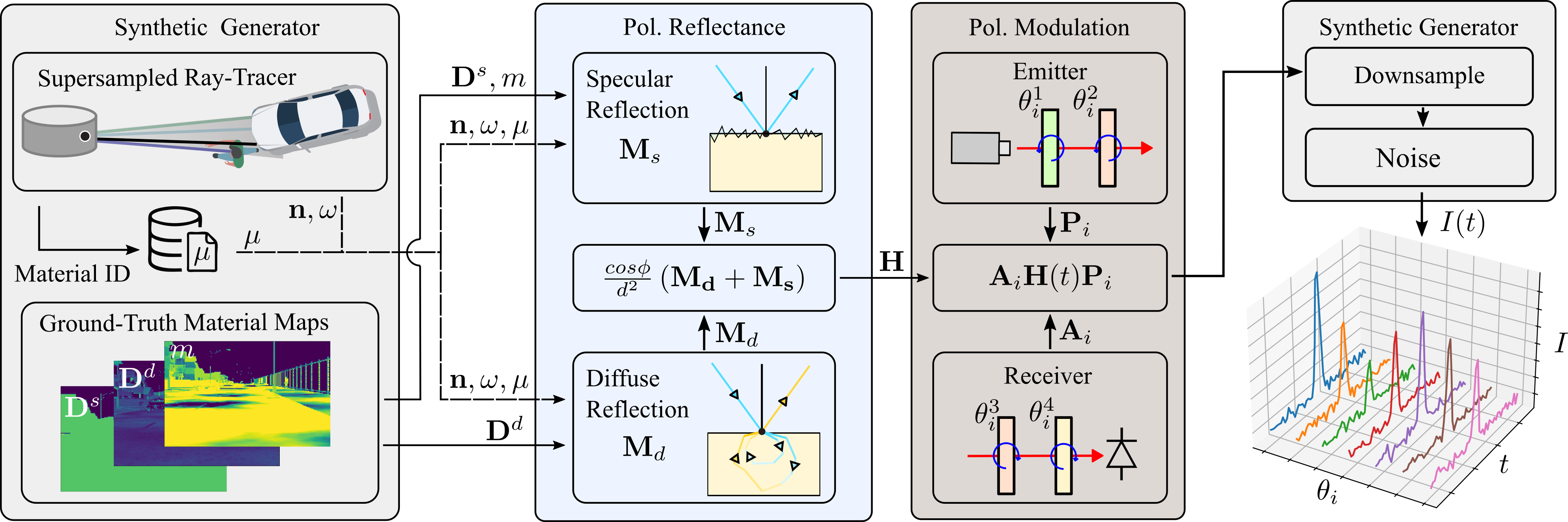

CARLA Simulation Model

We employ a PolLidar forward model in a simulator based on CARLA that generates synthetic polarimetric raw wavefronts. To this end, we extract material properties and normals from CARLA and feed them into the forward model. The resulting temporal wavefronts are subsequently downsampled in spatial dimension to model beam divergence and noise is added to simulate APD and ADC.

Related Publications

[1] Chenyang Lei, Chenyang Qi, Jiaxin Xie, Na Fan, Vladlen Koltun, and Qifeng Chen. Shape from Polarization for Complex Scenes in the Wild. CVPR 2022

[2] Seung-Hwan Baek and Felix Heide. All-photon Polarimetric Time-of-Flight Imaging. CVPR 2022

[3] Kei Ikemura, Yiming Huang, Felix Heide, Zhaoxiang Zhang, Qifeng Chen, and Chenyang Lei. Robust Depth Enhancement via Polarization Prompt Fusion Tuning. CVPR 2024