Differentiable Compound Optics and Processing Pipeline Optimization for End-to-end Camera Design

- Ethan Tseng

- Ali Mosleh

- Fahim Mannan

-

Karl St-Arnaud

-

Avinash Sharma

- Yifan (Evan) Peng

- Alexander Braun

- Derek Nowrouzezahrai

- Jean-François Lalonde

- Felix Heide

SIGGRAPH 2021

We propose an end-to-end camera design scheme that jointly optimizes compound optics together with hardware and software image post-processors. Our approach allows us to cater lens systems and the hyperparameter settings of the entire imaging pipeline towards domain-specific applications, including but not limited to automotive object detection and natural image capture.

Most modern commodity imaging systems we use directly for photography—or indirectly rely on for downstream applications—employ optical systems of multiple lenses that must balance deviations from perfect optics, manufacturing constraints, tolerances, cost, and footprint. Although optical designs often have complex interactions with downstream image processing or analysis tasks, today’s compound optics are designed in isolation from these interactions. Existing optical design tools aim to minimize optical aberrations, i.e., deviations from Gauss’ linear model of optics, instead of application specific losses, precluding joint optimization with hardware image signal processing (ISP) and highly-parameterized neural network processing. In this paper, we propose an optimization method for compound optics that lifts these limitations. We optimize entire lens systems jointly with hardware and software image processing pipelines, downstream neural network processing, and with application-specific end-to-end losses. To this end, we propose a learned, differentiable forward model for compound optics and an alternating proximal optimization method that handles function compositions with highly-varying parameter dimensions for optics, hardware ISP and neural nets. Our method integrates seamlessly atop existing optical design tools, such as Zemax. We can thus assess our method across many camera system designs and end-to-end applications. We validate our approach in an automotive camera optics setting—together with hardware ISP post processing and detection—outperforming classical optics designs for automotive object detection and traffic light state detection. For human viewing tasks, we optimize optics and processing pipelines for dynamic outdoor scenarios and dynamic low-light imaging.We outperform existing compartmentalized design or fine-tuning methods qualitatively and quantitatively, across all domain-specific applications tested.

Paper

Ethan Tseng, Ali Mosleh, Fahim Mannan, Karl St. Arnaud, Avinash Sharma, Yifan Peng, Alexander Braun, Derek Nowrouzezahrai, Jean-François Lalonde, Felix Heide

Differentiable Compound Optics and Processing Pipeline Optimization for End-to-end Camera Design

SIGGRAPH 2021

End-to-end Camera Design

Existing differentiable optical design approaches rely on the paraxial approximation, simplifying the optical response to a single point-spread function (PSF). This approximation, however, only holds for small FOVs (about 5 degrees), whereas full ray tracing is required to accurately model optics for larger FOV. Moreover, these methods only design a single optical element, whereas consumer and industrial optical systems commonly consist of a sequence of elements.

Our work develops a framework for end-to-end design of compound optical systems. We do this by simulating spatially varying PSFs that describe the features produced by complex optical pipelines, including Seidel aberrations and vignetting. Below, we show spatial PSFs corresponding to a nominal design obtained with Zemax and learned designs optimized for capturing images for human viewing, automotive object detection, and traffic light detection. Each learned optic exhibits different spatial PSFs that are suited to their specific task, see paper for details.

Differentiable Compound Optics

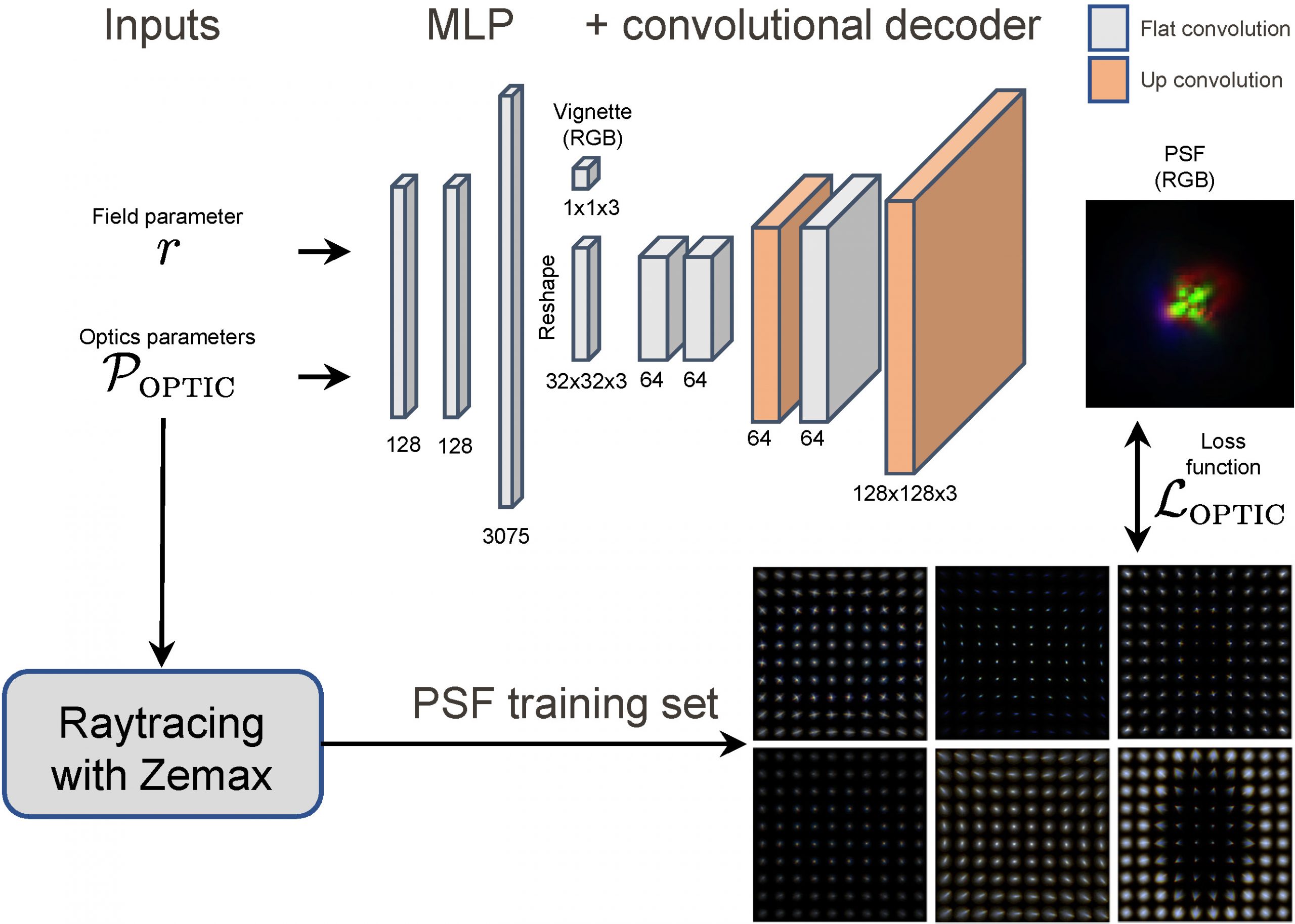

In this work, we train an optics meta-network (shown on the right) to learn optical spatial PSFs as a function of optical parameters using training data generated by ZEMAX’s ray tracer. After training, we freeze this network and use it as a differentiable module within an end-to-end imaging pipeline.

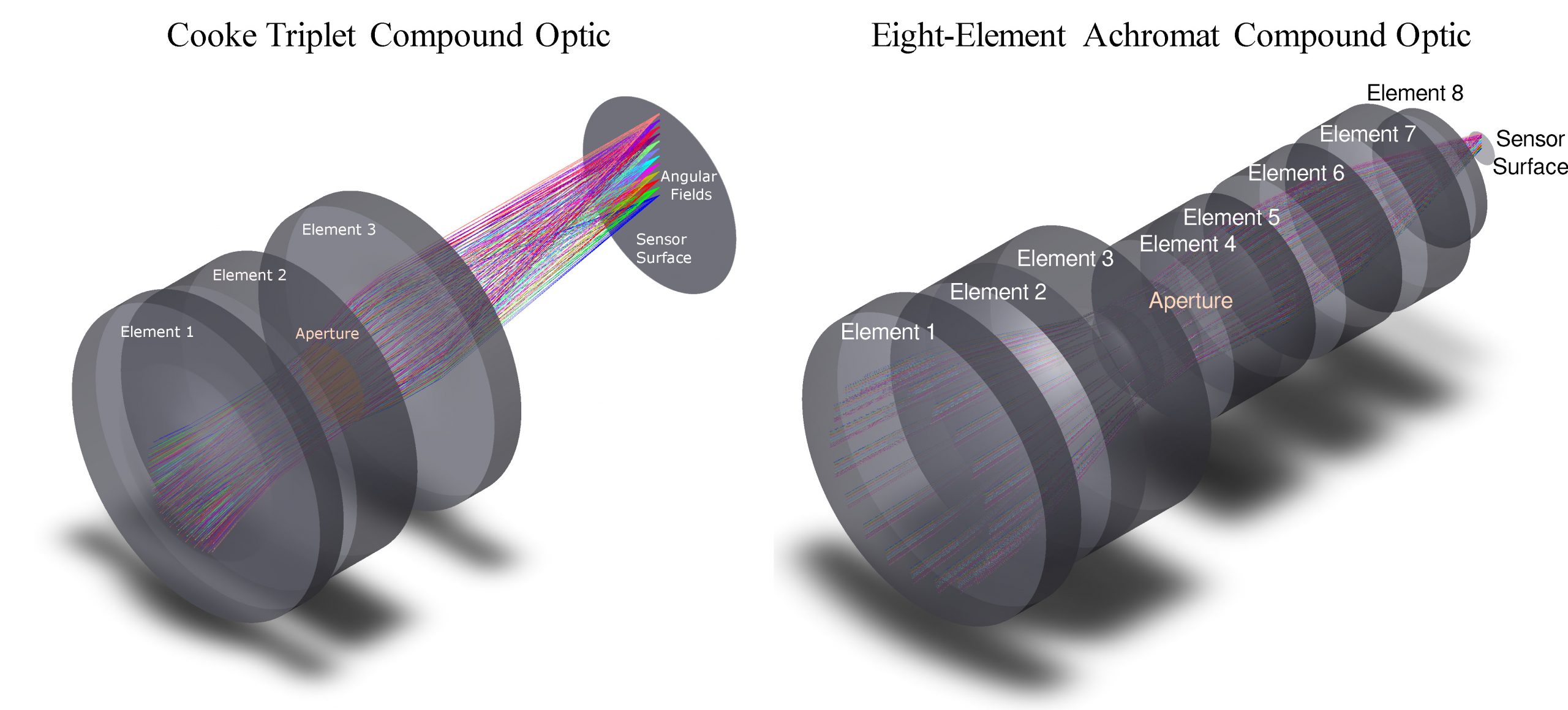

Optimization for Established Lens Designs

We demonstrate our optimization method using established lens designs: a Cooke triplet design which consists of three optical elements that corrects Seidel aberrations and an eight-element achromat compound lens. For our experiments, we compare against a nominal design obtained by the Hammer optimization in ZEMAX.

Compound Optics for Improved Image Quality

We first perform end-to-end optimization for the common task of capturing images for human viewing. Here, our pipeline consists of a Cooke triplet and an ARM Mali-C71 ISP for image processing. We manufacture both the optimized and nominal lens designs and compare them on real-world captures.

Images produced using the nominal optics (left) are blurrier and have more color artifacts than images produced using our optimized optics (right). The end-to-end optimized optic trades off sharp central focus in return for more compact PSFs across the FOV, which enables the downstream ISP to produce high-quality tone-mapped images although the spot size of the center PSF is slightly larger.

Compound Optics for Object Detection

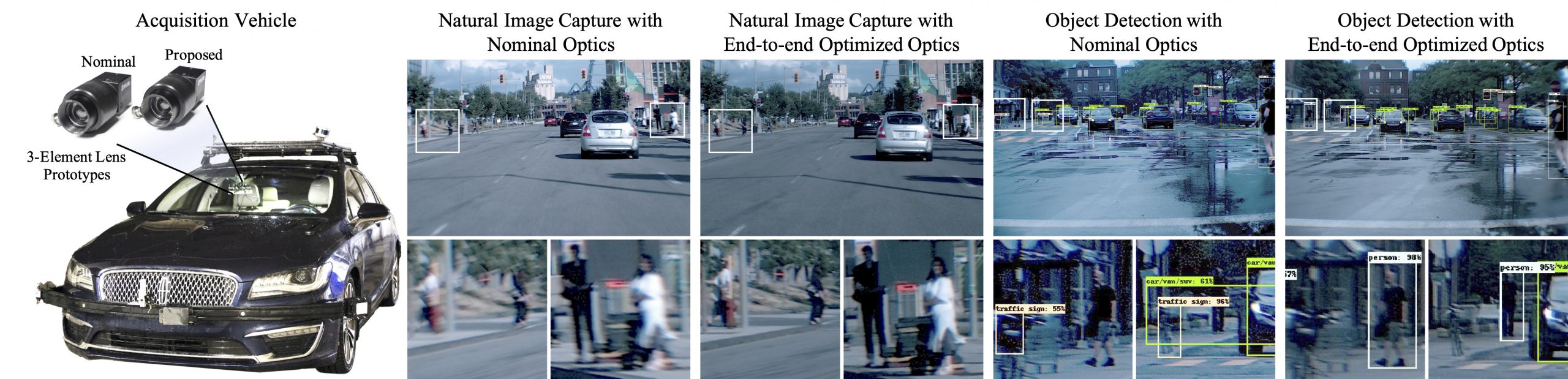

We now jointly optimize a full end-to-end pipeline for automotive object detection, consisting of a Cooke triplet compound lens, an ARM Mali-C71 ISP, and a Faster-RCNN. Our learned optics features uniform aberrations across all fields, which we attribute to the fact that it is detrimental for the convolutional detector to learn spatially varying processing. Again, we manufacture the learned and nominal compound lenses and demonstrate improved performance on real-world scenes, see qualitative results on the right.

Compound Optics for Traffic Light Detection

We also learn a specialized compound optic for an end-to-end traffic light detection pipeline, using the same Cooke triplet compound lens, ARM Mali-C71 ISP, and Faster-RCNN. The optic optimized for traffic light detection is slightly sharper in the center and the peripheries than the optic optimized for object detection due to the size of traffic lights. We use the same synchronized dual-camera setup to validate the manufactured learned optic on real-world scenes, see qualitative results on the left.

Related Publications

[1] Ethan Tseng, Felix Yu, Yuting Yang, Fahim Mannan, Karl St-Arnaud, Derek Nowrouzezahrai, Jean-François Lalonde, and Felix Heide. Hyperparameter optimization in black-box image processing using differentiable proxies. ACM Transactions on Graphics (SIGGRAPH), 38(4):27, 2019

[2] Ali Mosleh, Avinash Sharma, Emmanuel Onzon, Fahim Mannan, Nicolas Robidoux, and Felix Heide. Hardware-in-the-loop End-to-end Optimization of Camera Image Processing Pipelines. In IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2020

[3] Qilin Sun, Ethan Tseng, Qiang Fu, Wolfgang Heidrich, and Felix Heide. Learning Rank-1 Diffractive Optics for Single-shot High Dynamic Range Imaging. In IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2020

[4] Yifan Peng, Qilin Sun, Xiong Dun, Gordon Wetzstein, Wolfgang Heidrich, and Felix Heide. Learned Large Field-of-View Imaging with Thin-Plate Optics. ACM Transactions on Graphics (SIGGRAPH Asia), 38(6):219, 2019

[5] Nicolas Robidoux, Luis Eduardo Garcia Capel, Dong-eun Seo, Avinash Sharma, Federico Ariza, and Felix Heide. End-to-end High Dynamic Range Camera Pipeline Optimization. In IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2021