A Multi-Modal Benchmark for Long-Range Depth Evaluation in Adverse Weather Conditions

- Stefanie Walz*

- Andrea Ramazzina*

- Dominik Scheuble*

- Samuel Brucker

-

Alexander Zuber

- Werner Ritter

- Mario Bijelic

- Felix Heide

IROS 2025

Depth estimation is a cornerstone computer vision task that is critical for scene understanding and autonomous driving. In real-world scenarios, reliable depth perception under adverse weather is essential for safety and robustness. However, quantitatively evaluating depth estimation methods in such conditions is challenging due to the difficulty of obtaining ground truth data. Weather chambers offer controlled environments for simulating diverse weather, but current datasets are limited in range and lack dense ground truth. To address this gap, we introduce a new evaluation benchmark that extends depth assessment up to 200 meters under clear, foggy, and rainy conditions. We employ a multi-modal sensor setup featuring state-of-the-art stereo RGB, RCCB, gated cameras, and a long-range LiDAR. In addition, we capture a millimeter-scale digital twin of the test facility using a high-end geodesic laser scanner. This comprehensive benchmark enables more precise evaluation of different models and sensing modalities, including at far distances.

Adverse Weather Dataset

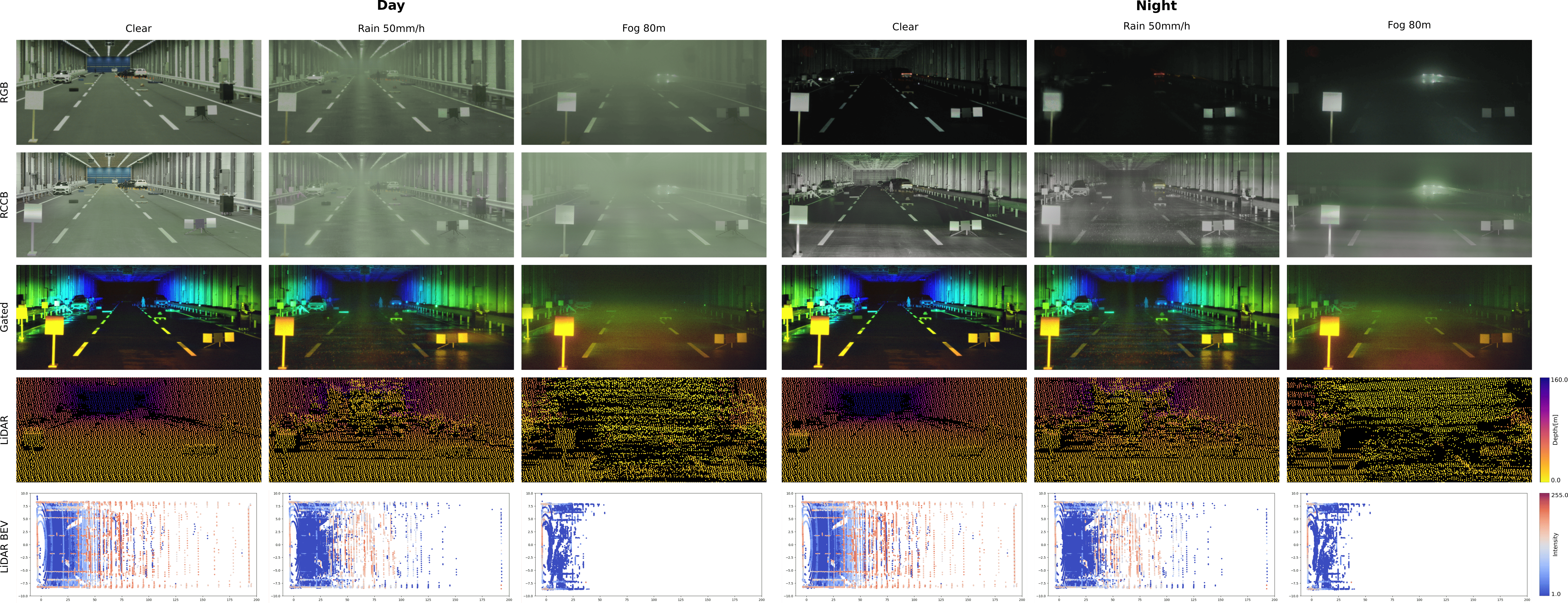

We propose an adverse weather dataset captured in the Japan Automobile Research Institute weather chamber, an advanced facility designed to simulate diverse weather conditions for research and testing. The chamber measures 200 m in length and 15 m in width, with three 3.5 m lanes supporting a variety of experimental setups. Its lighting system provides adjustable horizontal illuminance from 200 to 1,600 lx with 50 m dimming zones and a fixed 5,000 K color temperature. Rainfall is simulated using dual sprinkler systems that produce 640 µm and 1,400 µm droplets at intensities of 30, 50, and 80 mm/h. Fog is generated using 7.5 µm particles, with configurable visibility ranges from 10 to 80 m.

We construct realistic automotive scenarios featuring cars, mannequins representing pedestrians, and lost cargo objects positioned at varying distances. Our dataset focuses on diverse and challenging environments, including highway scenes and construction sites. Data is collected under reproducible adverse weather conditions including day and night, clear weather, multiple fog levels, and varying rainfall rates. We provide sensor measurements from three state-of-the-art camera systems: an RGB stereo camera, an RCCB stereo camera, and a gated stereo camera, as well as a long-range LiDAR sensor.

Ground Truth Acquisition

We obtain high-precision ground truth depth measurements for static scenarios using a Leica ScanStation P30 laser scanner. This advanced system features a horizontal 360°/290° field of view, operates at 1550 nm wavelength, and can capture up to 1 million points per second with an angular accuracy of up to 8″ and range accuracy of 1.2 mm + 10 parts per million (ppm). To enhance resolution and minimize occlusions, we combine multiple point clouds captured from different overlapping positions. Each individual scan generates approximately 157M points and requires about 5 minutes to complete, which restricts this high-resolution acquisition method to a limited number of static scenes. All sensors in our automotive suite are calibrated relative to this ground truth point cloud. We employ generalized iterative closest point (ICP) to determine the rotation and translation transformation between the Leica laser scan and the Velodyne point cloud.

Depth Benchmark

While extensive research exists in depth estimation and completion, our benchmark focuses on one representative algorithm from each category for conciseness. In particular, we use DPT as monocular depth estimation model, CREStereo as stereo approach, CompletionFormer as LiDAR depth completion method, Gated Stereo for (stereo) gated depth estimation and Gated-RCCB-Stereo for cross-spectral stereo depth estimation. Additionally, DPT, CREStereo, and CompletionFormer are trained and tested using both RGB and RCCB images as input to enable a comprehensive comparison between different camera modalities

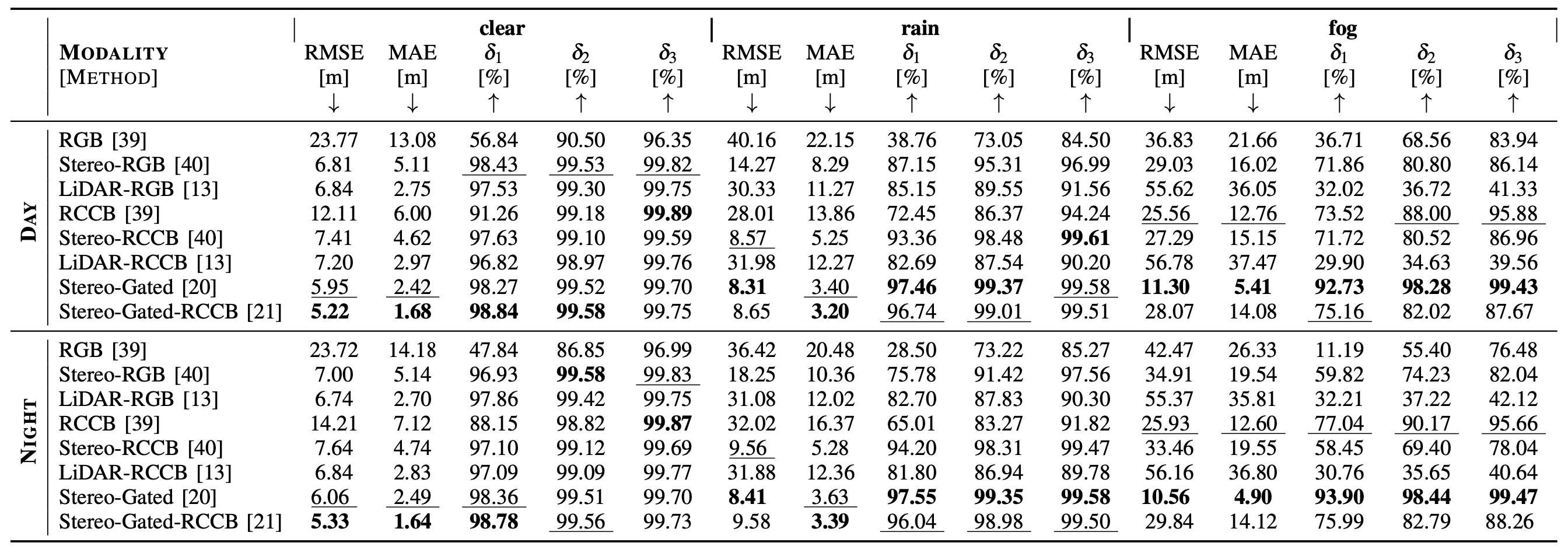

Quantitative Results

Quantitative comparison of all benchmarked algorithms in clear and adverse weather conditions. Best results in each category are in bold and second best are underlined.

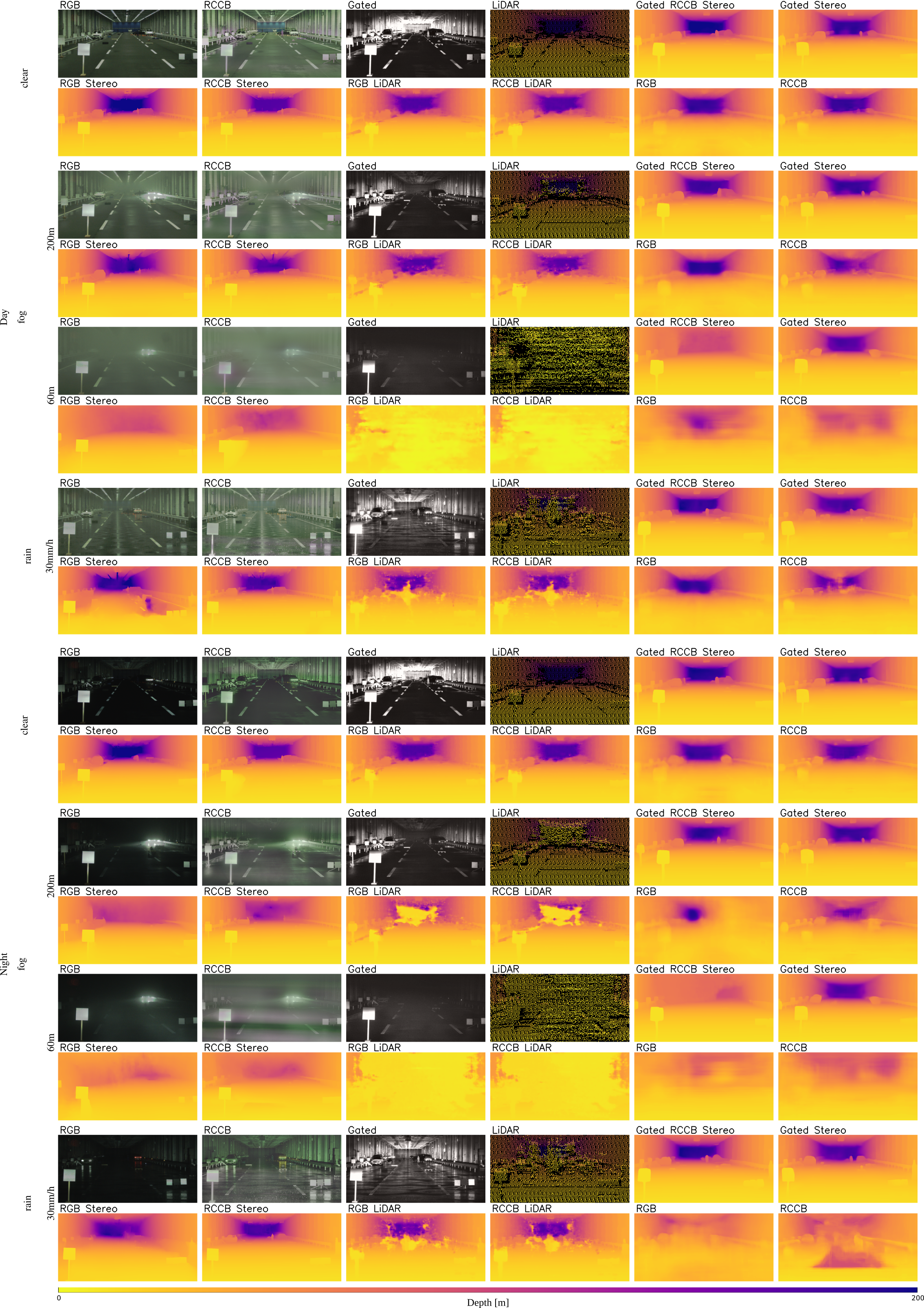

Qualitative Results

Qualitative results for all benchmarked depth estimation methods in clear conditions, foggy conditions of different visibility levels and rainy conditions. In addition to the resulting 2.5D depth maps, we include a RGB, RCCB and Gated images as well as the projected LiDAR point cloud as reference.

Related Publications

[1] Samuel Brucker, Stefanie Walz, Mario Bijelic and Felix Heide.

Cross-spectral Gated-RGB Stereo Depth Estimation. The IEEE International Conference on Computer Vision (CVPR), 2024.

[2] Stefanie Walz, Mario Bijelic, Andrea Ramazzina, Amanpreet Walia, Fahim Mannan and Felix Heide. Gated Stereo: Joint Depth Estimation from Gated and Wide-Baseline Active Stereo Cues. The IEEE International Conference on Computer Vision (CVPR), 2023.

[3] Jiankun Li, Peisen Wang, Pengfei Xiong, Tao Cai, Ziwei Yan, Lei Yang, Jiangyu Liu, Haoqiang Fan and Shuaicheng Liu. Practical Stereo Matching via Cascaded Recurrent Network with Adaptive Correlation. The IEEE International Conference on Computer Vision (CVPR), 2022.

[4] Youmin Zhang, Xianda Guo, Matteo Poggi, Zheng Zhu, Guan Huang and Stefano Mattoccia.

Completionformer: Depth completion with convolutions and vision transformer. The IEEE International Conference on Computer Vision (CVPR), 2023.

[5] Rene Ranftl, Alexey Bochkovskiy and Vladlen Koltun.

Vision Transformers for Dense Prediction. The IEEE International Conference on Computer Vision (CVPR), 2021.